screenshot:

log: /var/log/boot.gz

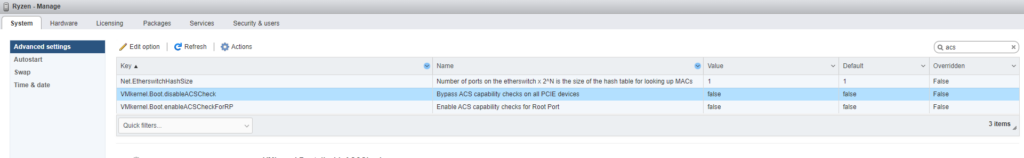

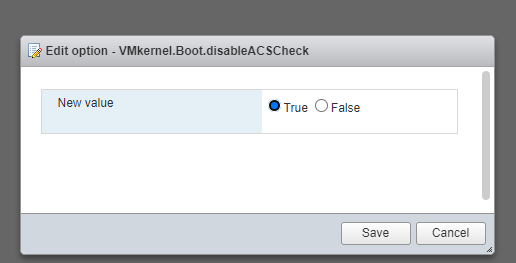

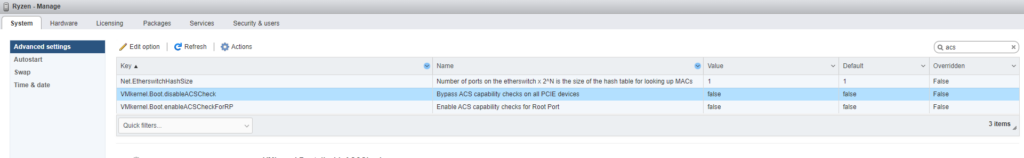

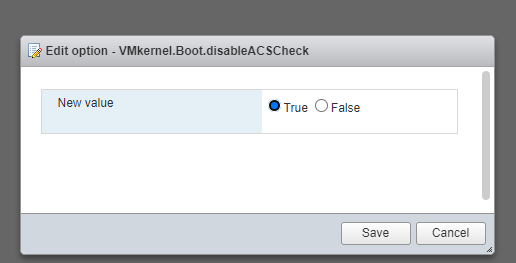

no PCI ACS support device_idworkaround was to disable ACS

screenshot:

log: /var/log/boot.gz

no PCI ACS support device_idworkaround was to disable ACS

Hostd crashes with the below:

Hostd.log

2018-12-17T22:37:50.138Z info hostd[9130B80] [Originator@6876 sub=Hostsvc] Storage data synchronization policy set to invalidate_change

2018-12-17T22:37:50.140Z info hostd[9130B80] [Originator@6876 sub=Libs] lib/ssl: OpenSSL using FIPS_drbg for RAND

2018-12-17T22:37:50.140Z info hostd[9130B80] [Originator@6876 sub=Libs] lib/ssl: protocol list tls1.2

2018-12-17T22:37:50.140Z info hostd[9130B80] [Originator@6876 sub=Libs] lib/ssl: protocol list tls1.2 (openssl flags 0x17000000)

2018-12-17T22:37:50.140Z info hostd[9130B80] [Originator@6876 sub=Libs] lib/ssl: cipher list !aNULL:kECDH+AESGCM:ECDH+AESGCM:RSA+AESGCM:kECDH+AES:ECDH+AES:RSA+AES

2018-12-17T22:37:50.141Z info hostd[9130B80] [Originator@6876 sub=Libs] GetTypedFileSystems: fstype vfat

2018-12-17T22:37:50.141Z info hostd[9130B80] [Originator@6876 sub=Libs] GetTypedFileSystems: uuid 579bba34-1440dc54-3308-70106f411e18 <-----volume that went offfline

2018-12-17T22:37:51.136Z info hostd[9AB8B70] [Originator@6876 sub=ThreadPool] Thread enlisted

VMkernel:

2018-12-17T22:19:15.397Z cpu18:65910)VMW_SATP_LOCAL: satp_local_updatePath:789: Failed to update path "vmhba32:C0:T0:L0" state. Status=Transient storage condition, suggest retry

2018-12-17T22:19:18.801Z cpu14:65607)WARNING: NMP: nmp_DeviceRequestFastDeviceProbe:237: NMP device "eui.00a0504658335330" state in doubt; requested fast path state update...

2018-12-17T22:19:18.801Z cpu14:65607)ScsiDeviceIO: 2968: Cmd(0x439d4981c540) 0x1a, CmdSN 0x6d2dc7 from world 0 to dev "eui.00a0504658335330" failed H:0x7 D:0x0 P:0x0 Invalid sense data: 0x0 0x0 0x0.

2018-12-17T22:19:21.394Z cpu6:5268773)ScsiPath: 5115: Command 0x0 (cmdSN 0x0, World 0) to path vmhba32:C0:T0:L2 timed out: expiry time occurs 1002ms in the past

2018-12-17T22:19:21.394Z cpu6:5268773)VMW_SATP_LOCAL: satp_local_updatePath:789: Failed to update path "vmhba32:C0:T0:L2" state. Status=Transient storage condition, suggest retry

2018-12-17T22:19:22.892Z cpu22:65615)ScsiDeviceIO: 2968: Cmd(0x439d4981c540) 0x1a, CmdSN 0x6d2dc7 from world 0 to dev "eui.00a0504658335330" failed H:0x5 D:0x0 P:0x0 Invalid sense data: 0x0 0x0 0x0.

2018-12-17T22:19:23.400Z cpu34:65627)NMP: nmp_ThrottleLogForDevice:3593: last error status from device eui.00a0504658335330 repeated 1 times

2018-12-17T22:19:23.400Z cpu34:65627)NMP: nmp_ThrottleLogForDevice:3647: Cmd 0x1a (0x439d4989a540, 0) to dev "eui.00a0504658335330" on path "vmhba32:C0:T0:L0" Failed: H:0x5 D:0x0 P:0x0 Invalid sense data: 0x0 0x0 0x0. Act:EVAL

2018-12-17T22:19:23.400Z cpu34:65627)WARNING: NMP: nmp_DeviceRequestFastDeviceProbe:237: NMP device "eui.00a0504658335330" state in doubt; requested fast path state update...

2018-12-17T22:19:23.400Z cpu34:65627)ScsiDeviceIO: 2968: Cmd(0x439d4989a540) 0x1a, CmdSN 0x6d2dc8 from world 0 to dev "eui.00a0504658335330" failed H:0x5 D:0x0 P:0x0 Invalid sense data: 0x0 0x0 0x0.

2018-12-17T22:19:26.798Z cpu14:65607)NMP: nmp_ThrottleLogForDevice:3647: Cmd 0x1a (0x439d4a8ed5c0, 0) to dev "eui.00a0504658335331" on path "vmhba32:C0:T0:L1" Failed: H:0x7 D:0x0 P:0x0 Invalid sense data: 0x0 0x0 0x0. Act:EVAL

2018-12-17T22:19:26.798Z cpu14:65607)WARNING: NMP: nmp_DeviceRequestFastDeviceProbe:237: NMP device "eui.00a0504658335331" state in doubt; requested fast path state update...

2018-12-17T22:19:26.798Z cpu14:65607)ScsiDeviceIO: 2968: Cmd(0x439d4a8ed5c0) 0x1a, CmdSN 0x6d2dd8 from world 0 to dev "eui.00a0504658335331" failed H:0x7 D:0x0 P:0x0 Invalid sense data: 0x31 0x22 0x20.

Scsi Decoder: Link

In my case, The volume appeared to have gone offline because the host was aborting the commands to the HBA.

/etc/init.d/hostd status

hostd is not running.

However,

ps | grep hostd

2098894 2098894 hostdCgiServer

2105175 2105175 hostd

2105176 2105175 hostd-worker

2105177 2105175 hostd-worker

2105178 2105175 hostd-worker

2105179 2105175 hostd-worker

2105180 2105175 hostd-IO

2105181 2105175 hostd-IO

2105182 2105175 hostd-fair

2105183 2105175 hostd-worker

2105184 2105175 hostd-worker

2105185 2105175 hostd-worker

2105187 2105175 hostd-worker

2105191 2105175 hostd-worker

2105192 2105175 hostd-worker

2105193 2105175 hostd-worker

2105194 2105175 hostd-worker

2105251 2105175 hostd-poll

localcli storage core device world list

Device World ID Open Count World Name

------------------------------------------------------------------------------------------------------

mpx.vmhba32:C0:T0:L0 2099479 1 smartd

mpx.vmhba32:C0:T0:L0 2105105 1 vpxa

mpx.vmhba32:C0:T0:L0 2105175 1 hostd

naa.600508b1001c555e5048cfd74e058fdc 2097185 1 idle0

naa.600508b1001c555e5048cfd74e058fdc 2097403 1 OCFlush

naa.600508b1001c555e5048cfd74e058fdc 2098198 1 Res6AffinityMgrWorld

naa.600508b1001c555e5048cfd74e058fdc 2098325 1 Vol3JournalExtendMgrWorld

naa.600508b1001c555e5048cfd74e058fdc 2099479 1 smartd

naa.600508b1001c555e5048cfd74e058fdc 2105175 1 hostd

naa.6001405d7dc7524f3364522a27b7c508 2097185 1 idle0

naa.6001405d7dc7524f3364522a27b7c508 2099760 1 fdm

naa.6001405d7dc7524f3364522a27b7c508 2099766 1 worker

naa.6001405d7dc7524f3364522a27b7c508 2099771 1 worker

naa.6001405d7dc7524f3364522a27b7c508 2100189 1 J6AsyncReplayManager

naa.6001405d7dc7524f3364522a27b7c508 2100219 1 worker

naa.6001405d7dc7524f3364522a27b7c508 2105175 1 hostd

naa.6001405d7dc7524f3364522a27b7c508 2105286 1 hostd-worker

t10.NVMe____THNSN51T02DUK_NVMe_TOSHIBA_1024GB_______E3542500020D0800 2097185 1 idle0

t10.NVMe____THNSN51T02DUK_NVMe_TOSHIBA_1024GB_______E3542500020D0800 2097446 1 bcflushd

t10.NVMe____THNSN51T02DUK_NVMe_TOSHIBA_1024GB_______E3542500020D0800 2098539 1 J6AsyncReplayManager

t10.NVMe____THNSN51T02DUK_NVMe_TOSHIBA_1024GB_______E3542500020D0800 2105105 1 vpxa

t10.NVMe____THNSN51T02DUK_NVMe_TOSHIBA_1024GB_______E3542500020D0800 2105175 1 hostd

naa.600508b1001c5a5167700b7ae7160e91 2097185 1 idle0

naa.600508b1001c5a5167700b7ae7160e91 2097403 1 OCFlush

naa.600508b1001c5a5167700b7ae7160e91 2098198 1 Res6AffinityMgrWorld

naa.600508b1001c5a5167700b7ae7160e91 2098325 1 Vol3JournalExtendMgrWorld

naa.600508b1001c5a5167700b7ae7160e91 2099479 1 smartd

naa.600508b1001c5a5167700b7ae7160e91 2105175 1 hostd

naa.600508b1001ca2c68c28022b4447710f 2097185 1 idle0

naa.600508b1001ca2c68c28022b4447710f 2097403 1 OCFlush

naa.600508b1001ca2c68c28022b4447710f 2098198 1 Res6AffinityMgrWorld

naa.600508b1001ca2c68c28022b4447710f 2098325 1 Vol3JournalExtendMgrWorld

naa.600508b1001ca2c68c28022b4447710f 2099479 1 smartd

naa.600508b1001ca2c68c28022b4447710f 2105175 1 hostd

This shows that hostd still appears to be stuck as running in a zombie state.

To resolve this, we will need to

Worst case scenario, Host reboot.

Joining Esxi to doamin fails with the below message:

[root@esx:~] ./usr/lib/vmware/likewise/bin/domainjoin-cli join ntitta.in

Joining to AD Domain: ntitta.in

With Computer DNS Name: esx.

[email protected]'s password:

Error: LW_ERROR_INVALID_MESSAGE [code 0x00009c46]

The Inter Process message is invalid

Cat /etc/hosts show below:

Cat /etc/hosts

# that require network functionality will fail.

127.0.0.1 localhost.localdomain localhost

::1 localhost.localdomain localhost

192.168.1.104 esx. esx <------------------notice the "esx."In some cases, the hostname on the /etc/hosts file might not match to that of the host. (look for the message on domainjoin-join “With Computer DNS Name: esx.”

In order to sort this out, correct the /etc/host file (in my case, I had to suffix the DNS suffix)

Cat /etc/hosts:

# that require network functionality will fail.

127.0.0.1 localhost.localdomain localhost

::1 localhost.localdomain localhost

192.168.1.104 esx.ntitta.in esx

restart lwsmd

/etc/init.d/lwsmd startRe-attempt doamin join CLI

Note: In some cases after performing the change to /etc/hosts, joining the host via powerCLI will fail with error “‘ vmwauth InvalidHostNameException: The current hostname is invalid: hostname cannot be resolved”

Hostd.log:

2019-01-07T19:20:16.288Z error hostd[DEC1B70] [Originator@6876 sub=ActiveDirectoryAuthentication opID=500fc81b-b5-602f user=vpxuser:IKIGO\nik] vmwauth InvalidHostNameException: The current hostname is invalid: hostname cannot be resolved

The management agents/host will need a restart to sort this out.

services.sh restartCreate a virtual machine with EFI boot, install windows + tools and power down the VM

Open ssh to the host where the VM is registered to

use vim-cmd to look for the VM

vim-cmd vmsvc/getallvms | grep *name_of_VM*

cd to the data store path

cd /vmfs/volumes/NVME/nvidia/Edit the vmx file using the vi editor and add the below lines

hypervisor.cpuid.v0 = "FALSE"

pciHole.dynStart = "2816"Save the editor and then reload the VMX (201 is the vmid from the above screenshot)

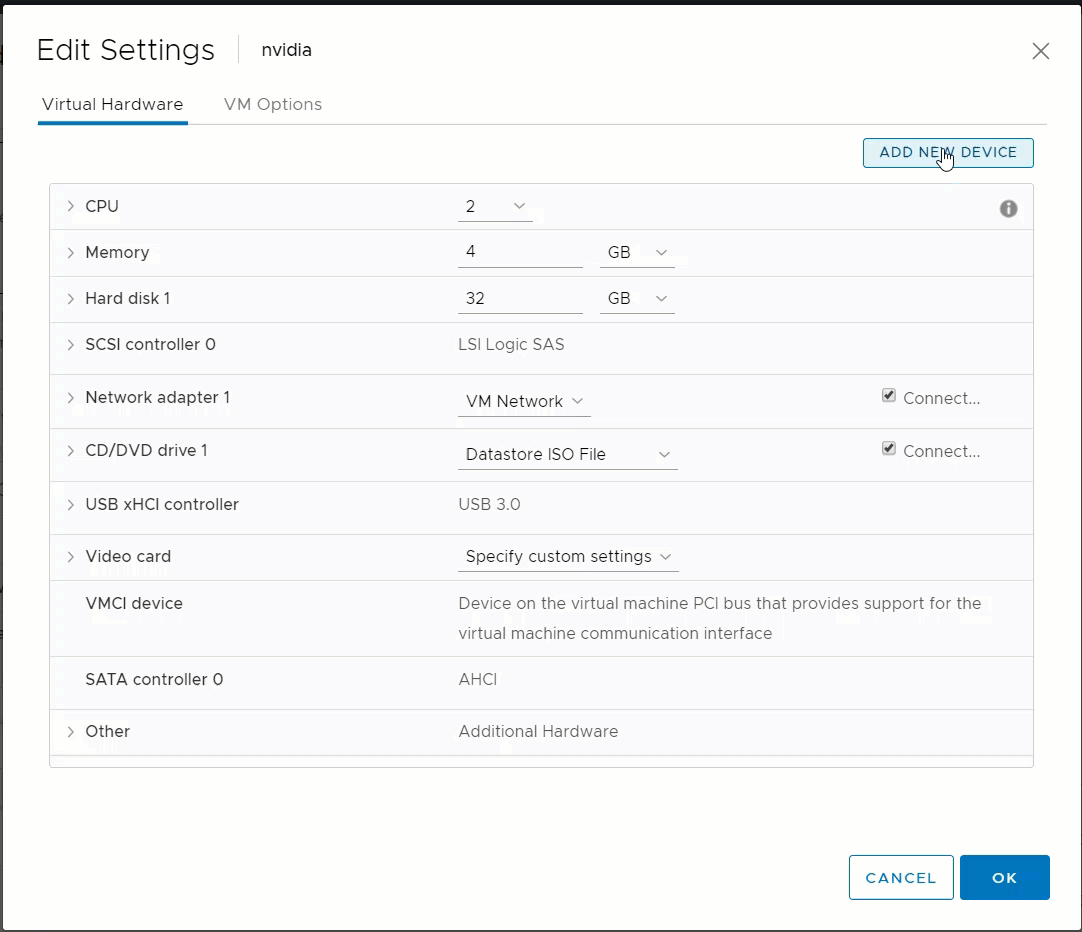

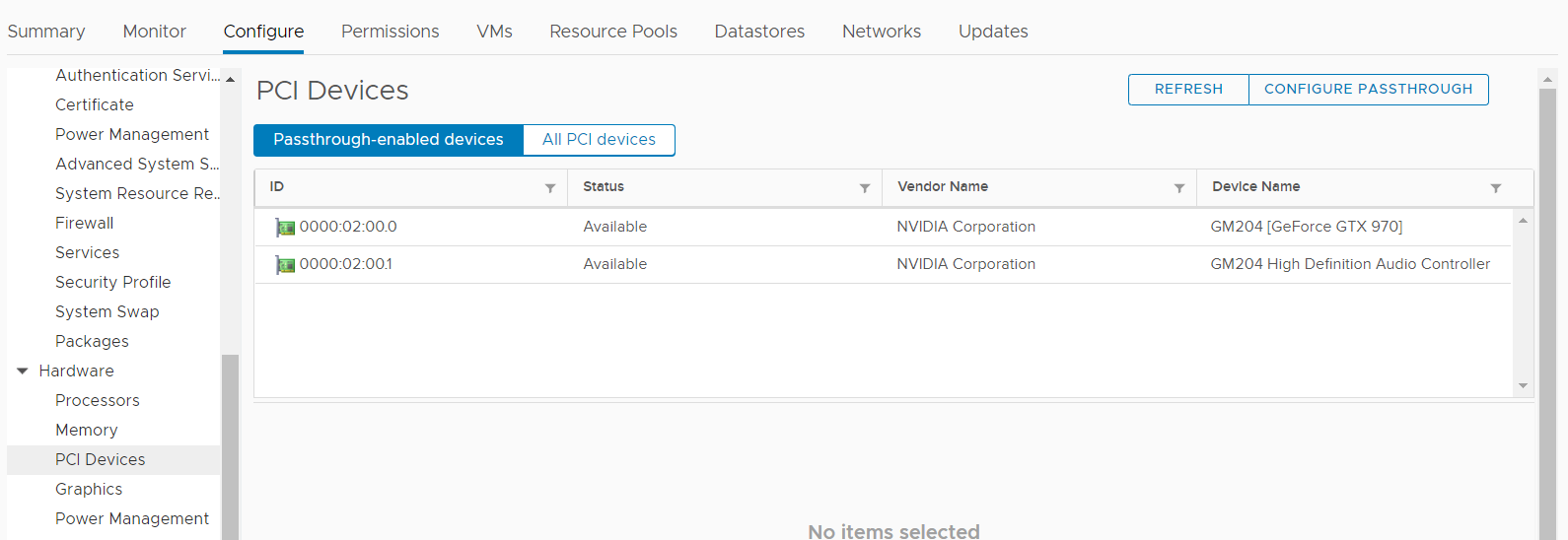

vim-cmd vmsvc/reload 201Edit settings on the VM> add new hardware>pci device>add graphic card and its audio device(2 pci pass through device and set the memory reservation to maximum)

Take a snapshot and then power on the VM

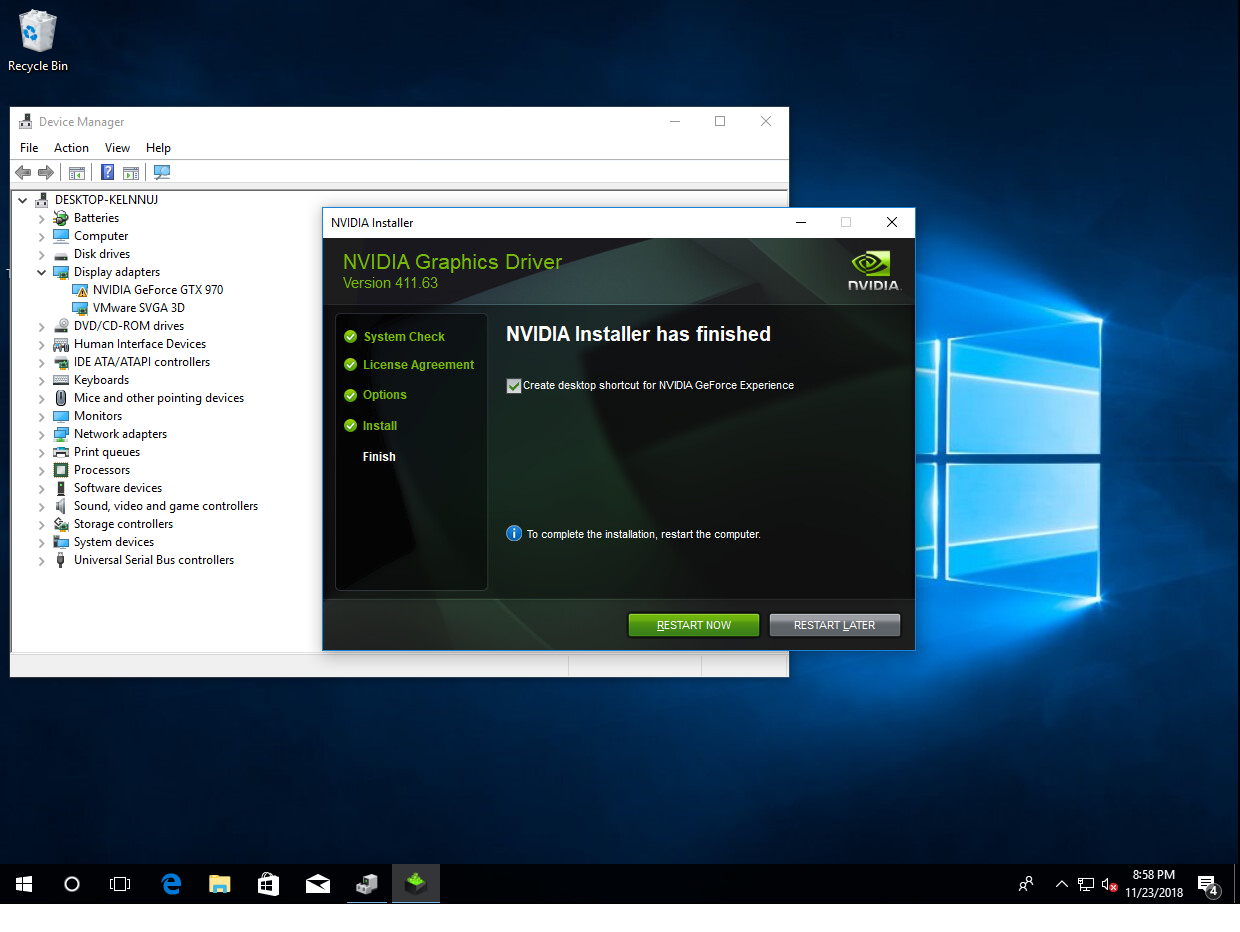

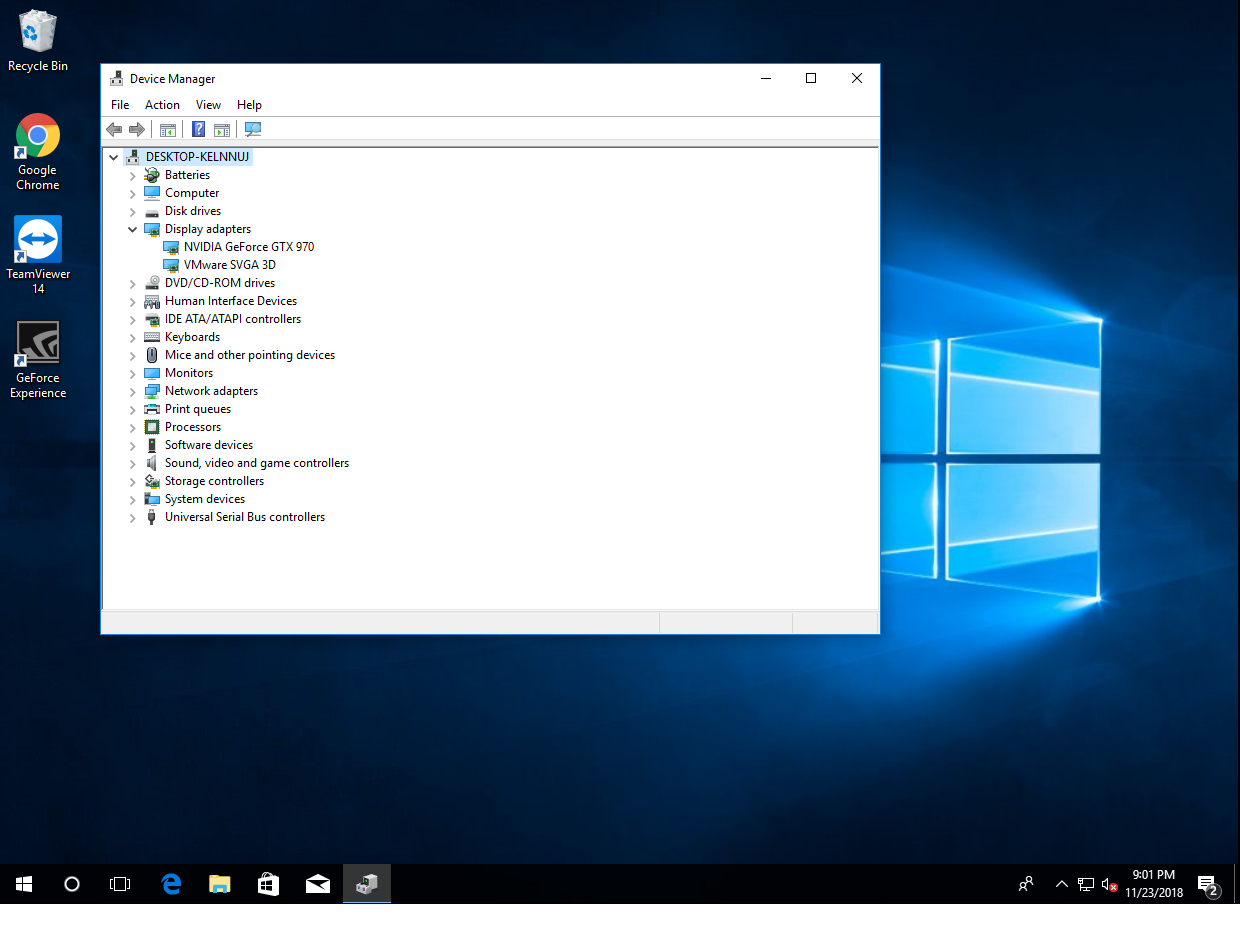

Conform the hardware under graphic adapter followed by nvidia drivers installation.

Device manager

Note: The nvidia card and its audio device must already have been marked as under pci device as pass through!!

To start off with, Download the latest version of CentOs7 from https://www.centos.org/download/

At the time of writing, this is: CentOS-7 (1804)

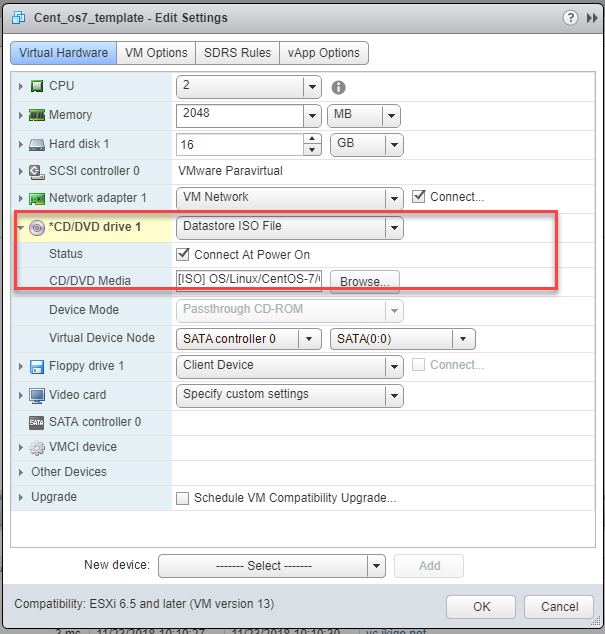

Lets start by creating a new Virtual machine. I will select Esxi 6.5 for backward compatibility with other host.

Mount the ISO,

Power on the VM and begin installing of the OS and begin the installation

Note: During the install we will enable the default nic interface and set this to DHCP

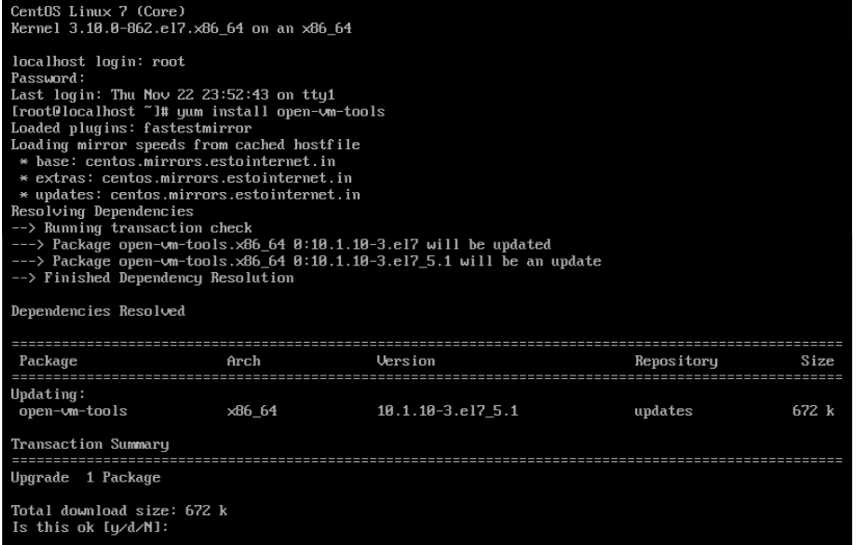

Note: Since the ISO used was from a most recent release, openvm-tools is auto installed along with the linux installer. if you are using an older version of the cent os installer iso, you must install open-vm-tools with the below command (will need the VM connected to the internet).

yum install open-vm-toolsI would recommend updating the tools to the latest release.

then followed by installing pearl (pre-requisites for guest customization)

yum install perlOnce done, power down the VM and convert it to a template.

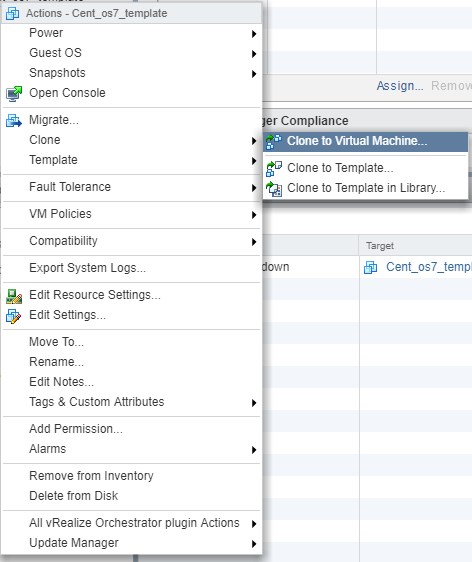

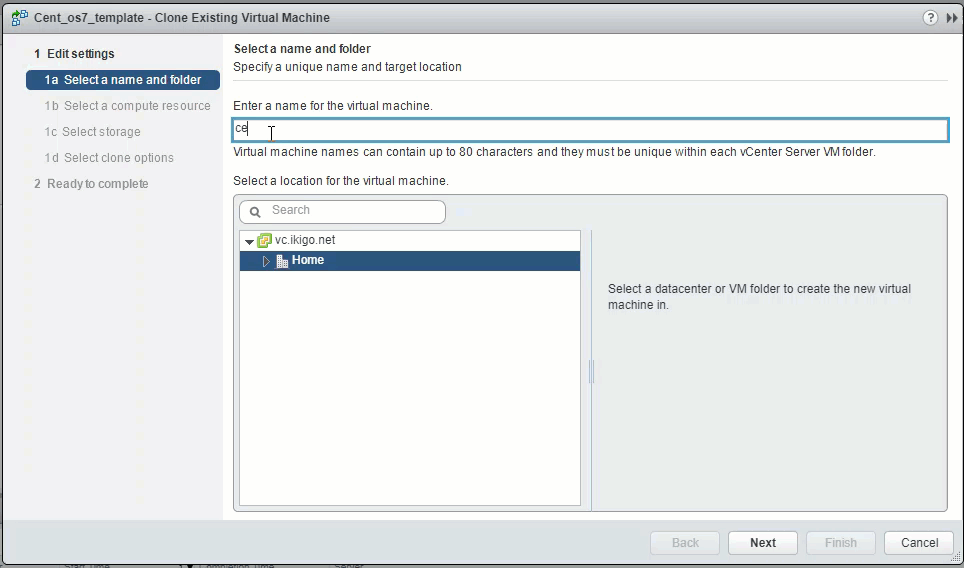

Test the template by deploying a VM with guest customization

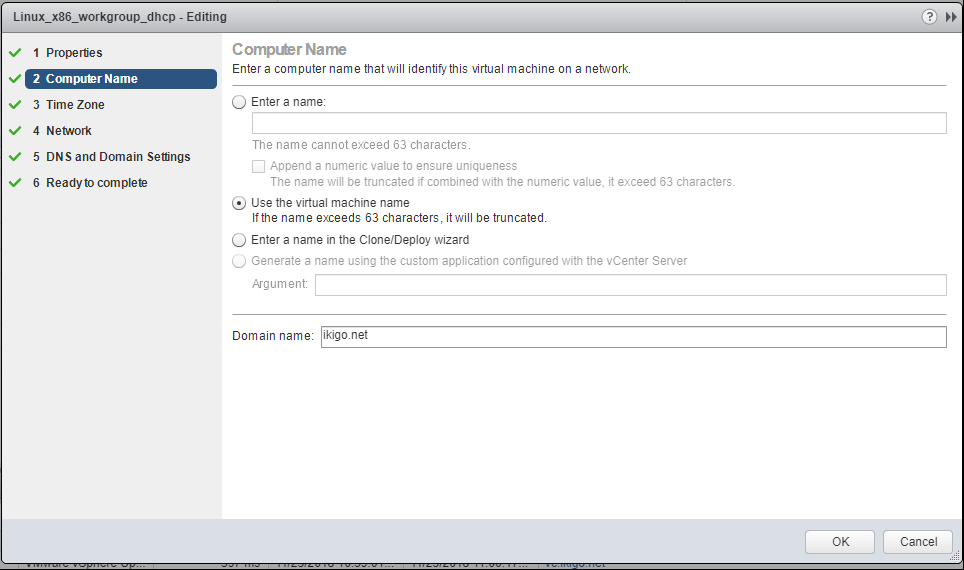

When the VM boots up you should see the host name set to the name of the VM (the spec that I used to customize uses the name as of vsphere inventory as the the virtual machine name)

Looking at the VM that was just deployed, we see the host name has changed as per the specification.

Troubleshooting:

Log file for guest cust:

/var/log/vmware-imc/toolsDeployPkg.logEnable complete memory dump feature by changing following registry keys:

HKEY_LOCAL_MACHINE\System\CurrentControlSet\Control\CrashControl

CrashDumpEnabled REG_DWORD 0x1

HKEY_LOCAL_MACHINE\System\CurrentControlSet\Control\Session Manager\Memory Management

PagingFiles REG_MULTI_SZ c:\pagefile.sys 13312 13312Enable keyboard crash dump feature by adding following registry keys:

HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Services\i8042prt\Parameters

Value Name: CrashOnCtrlScroll

Data Type: REG_DWORD

Value: 1

HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Services\kbdhid\Parameters

Value Name: CrashOnCtrlScroll

Data Type: REG_DWORD

Value: 1

Enable NMI crash dump feature by adding following key:

HKEY_LOCAL_MACHINE\System\CurrentControlSet\Control\CrashControl

Value Name: NMICrashDump

Data Type: REG_DWORD

Value: 1Capture a kernel memory dump in following ways:

o Send NMI to Guest OS Link:How to send NMI to Guest OS on ESXi 6.x (2149185)

Or

o On VM console, press Right Ctrl + Scroll Lock button 2 times.

Machine crashes into blue screen and save a memory dump, restart automatically once dump generation reaches 100%. You should be able to see 12GB (memory allocated to the VM) here: C:\Windows\MEMORY.DMP file.

In certain scenario when Esxi’s local/alt boot bank/ boot volume goes offline (symlinks broken),if the boot device is available, it needs to be remounted via CLI

To remount bootbank, run the below command.

localcli --plugin-dir=/usr/lib/vmware/esxcli/int/ boot system restore --bootbanksTo determine if the boot device is available, run through the below:

Determining the boot volume:

[root@AMD-fx:~] ls -ltrh /

total 1161

lrwxrwxrwx 1 root root 49 Mar 24 05:44 store -> /vmfs/volumes/5c963b7e-a90346aa-0102-0007e9b5fb18

lrwxrwxrwx 1 root root 49 Mar 24 05:44 bootbank -> /vmfs/volumes/fbff1b71-b140971e-160d-5c5f543035b8 <------------

lrwxrwxrwx 1 root root 49 Mar 24 05:44 altbootbank -> /vmfs/volumes/29249a7e-c37cdaf0-dbe5-30b1bb5afdd9 <------------

lrwxrwxrwx 1 root root 49 Mar 24 05:44 scratch -> /vmfs/volumes/5c963b87-d1260d20-cc4a-0007e9b5fb18 <-------------

lrwxrwxrwx 1 root root 29 Mar 24 05:44 productLocker -> /locker/packages/vmtoolsRepo/

lrwxrwxrwx 1 root root 6 Mar 24 05:44 locker -> /store

If the symlnks to the UUID are not created, look at the /var/log/boot.gz to determine why the device was not detected (most likely bad/missing drivers or passed through USB

Determine boot device (use the UUID of bootbank/altbotbank)

[root@AMD-fx:~] vmkfstools -P /vmfs/volumes/fbff1b71-b140971e-160d-5c5f543035b8

vfat-0.04 (Raw Major Version: 0) file system spanning 1 partitions.

File system label (if any):

Mode: private

Capacity 261853184 (63929 file blocks * 4096), 108040192 (26377 blocks) avail, max supported file size 0

Disk Block Size: 512/0/0

UUID: fbff1b71-b140971e-160d-5c5f543035b8

Partitions spanned (on "disks"):

t10.ATA_____HTS721010G9SA00_______________________________MPCZN7Y0GZ452L:5

Is Native Snapshot Capable: NO

Determining if the boot devise is available:

[root@AMD-fx:~] esxcli storage core device list -d t10.ATA_____HTS721010G9SA00_______________________________MPCZN7Y0GZ452L

t10.ATA_____HTS721010G9SA00_______________________________MPCZN7Y0GZ452L

Display Name: Local ATA Disk (t10.ATA_____HTS721010G9SA00_______________________________MPCZN7Y0GZ452L)

Has Settable Display Name: true

Size: 95396

Device Type: Direct-Access

Multipath Plugin: NMP

Devfs Path: /vmfs/devices/disks/t10.ATA_____HTS721010G9SA00_______________________________MPCZN7Y0GZ452L

Vendor: ATA

Model: HTS721010G9SA00

Revision: C10H

SCSI Level: 5

Is Pseudo: false

Status: on

Is RDM Capable: false

Is Local: true

Is Removable: false

Is SSD: false

Is VVOL PE: false

Is Offline: false <-------------------------------------------------

Is Perennially Reserved: false

Queue Full Sample Size: 0

Queue Full Threshold: 0

Thin Provisioning Status: unknown

Attached Filters:

VAAI Status: unsupported

Other UIDs: vml.01000000002020202020204d50435a4e375930475a3435324c485453373231

Is Shared Clusterwide: false

Is SAS: false

Is USB: false

Is Boot Device: true

Device Max Queue Depth: 31

No of outstanding IOs with competing worlds: 31

Drive Type: unknown

RAID Level: unknown

Number of Physical Drives: unknown

Protection Enabled: false

PI Activated: false

PI Type: 0

PI Protection Mask: NO PROTECTION

Supported Guard Types: NO GUARD SUPPORT

DIX Enabled: false

DIX Guard Type: NO GUARD SUPPORT

Emulated DIX/DIF Enabled: false

The other day, I had a customer having all management applications on a different DNS suffux as that of the domain.

Ie: Domain : ntitta.in

Management host’s: on mgmt.local

on the customer’s setup, the VCSA was deployed with an FQDN VCSA.MGMT.local However, when the appliance was added to domain ntitta.in, the VCSA renames itself to VCSA.ntitta.in

Apparently the likewise scripts on VCSA is set to rename the appliance to the domain suffix. This might cause all sort of strange behaviour/PNID mismatches on normal functionality.

In order to sort this/set this right, we wanna invoke the domain join script ignoring the hostname.

/opt/likewise/bin/domainjoin-cli join --disable hostname domain_name domain_userExample:

root@vcsa [ ~ ]# /opt/likewise/bin/domainjoin-cli join --disable hostname ntitta.in nik

Joining to AD Domain: ntitta.in

With Computer DNS Name: vcsa.mgmt.localNote that the script acknowledges that it is going to join to join AD with the computer name vcsa.mgmt.local. this is precisely what we want.

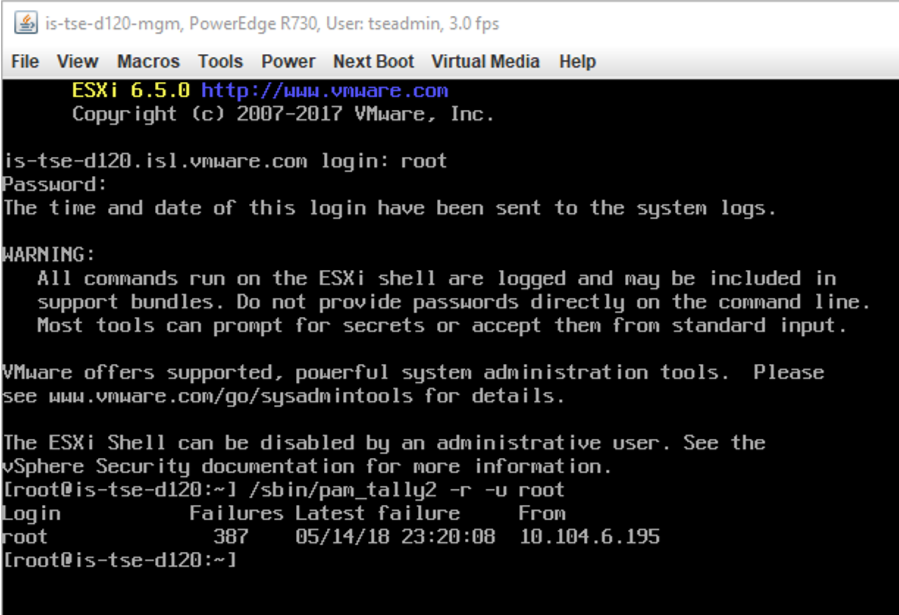

Generally. Should the root account be locked out, SSH and UI/client access to the host fails. In order to work this around

The root account should now be unlocked. Review the IP listed there to prevent logon(scripted or 3’rd party monitoring)

Syntax:

ovftool -ds="datastore" -n="VM_Name" -–net:"VM _old_Network"="VM Network" c:\path_to_ovf\file.ovf vi://[email protected]:password@vCenter_server_name?ip=Esxi_Host_IPExample:

ovftool -ds="datastore" -n="VMName" -–net:"VM Network"="VM Network" C:\Users\ntitta\Downloads\myth.ovf vi://[email protected]:P@[email protected]/?ip=10.109.10.120Note:

VM Network = the vmnetwork of the ovf at the time of export

If you are not too sure of the original network, Run the below to query:

C:\Program Files\VMware\VMware OVF Tool>ovftool C:\Users\ntitta\Downloads\myth.ovf

Output:

OVF version: 1.0

VirtualApp: false

Name: myth

Download Size: Unknown

Deployment Sizes:

Flat disks: 16.00 GB

Sparse disks: Unknown

Networks:

Name: VM Network

Description: The VM Network network

Virtual Machines:

Name: myth

Operating System: ubuntu64guest

Virtual Hardware:

Families: vmx-13

Number of CPUs: 2

Cores per socket: 1

Memory: 1024.00 MB

Disks:

Index: 0

Instance ID: 5

Capacity: 16.00 GB

Disk Types: SCSI-lsilogic

NICs:

Adapter Type: VmxNet3

Connection: VM Network

Link to download OVFtool https://my.vmware.com/web/vmware/details?productId=614&downloadGroup=OVFTOOL420