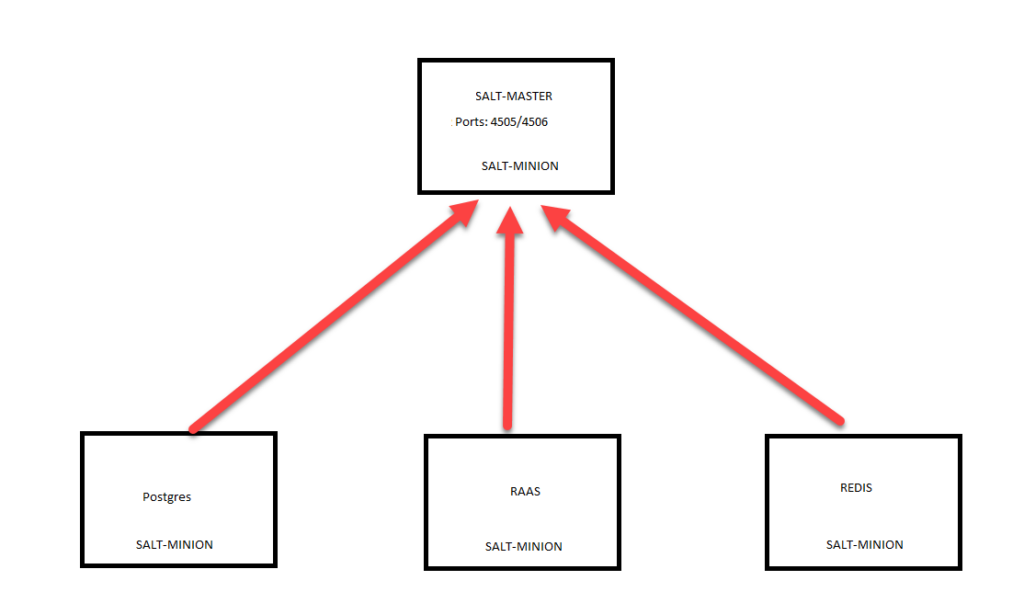

When troubleshooting a minion deployment failure, I would recommend hashing out the salt part of the blueprint and run this as a day2 task. This would help save significant deployment time and help focuss on the minion deployment issue alone.

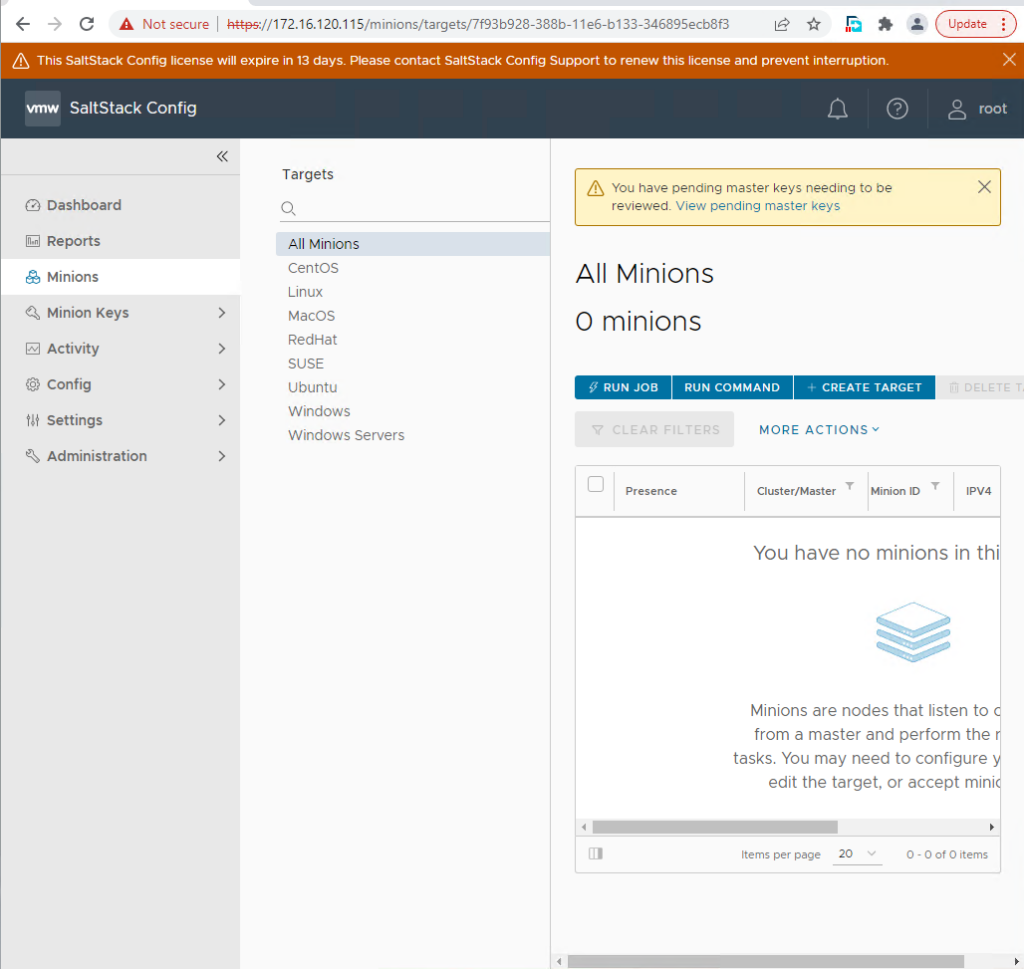

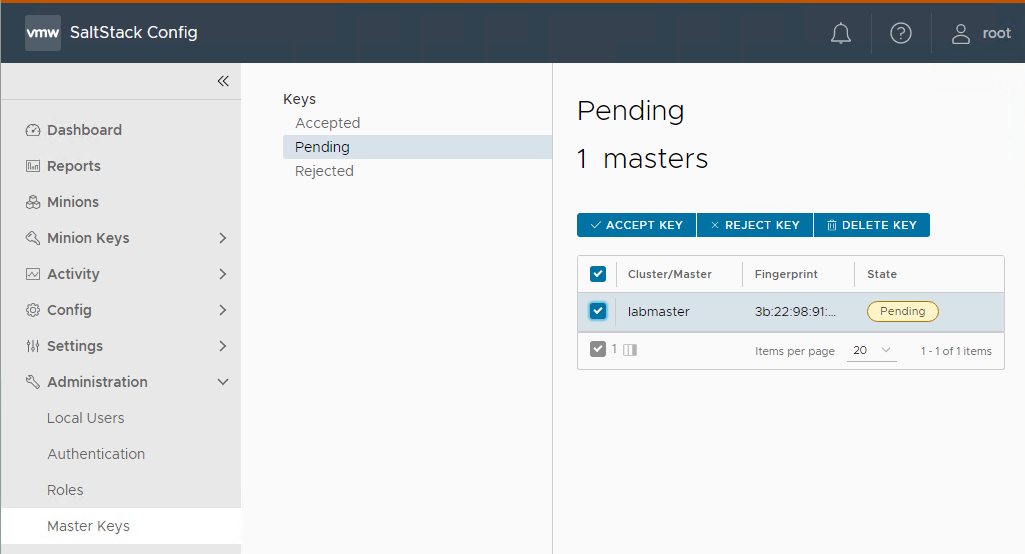

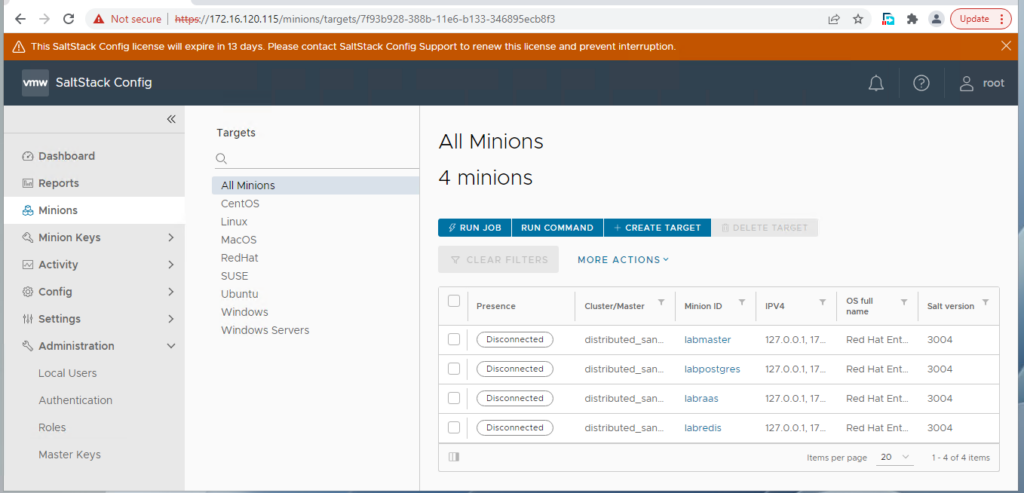

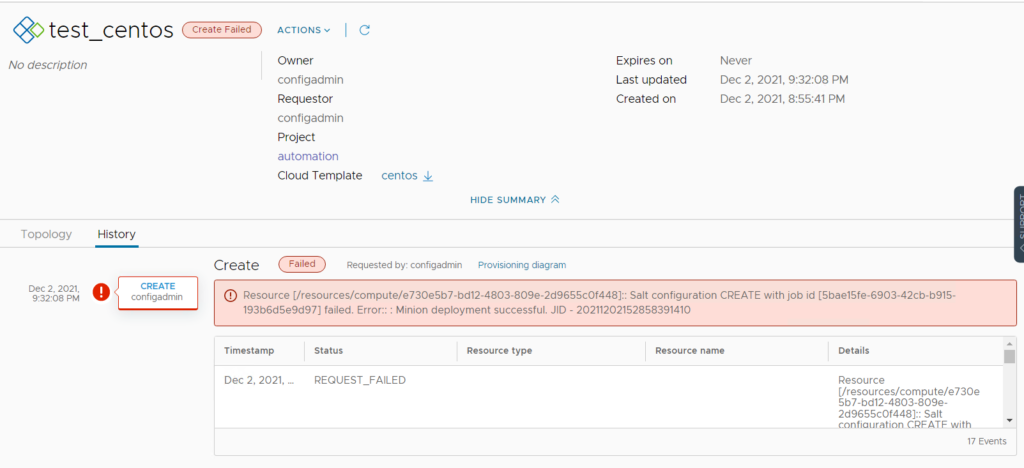

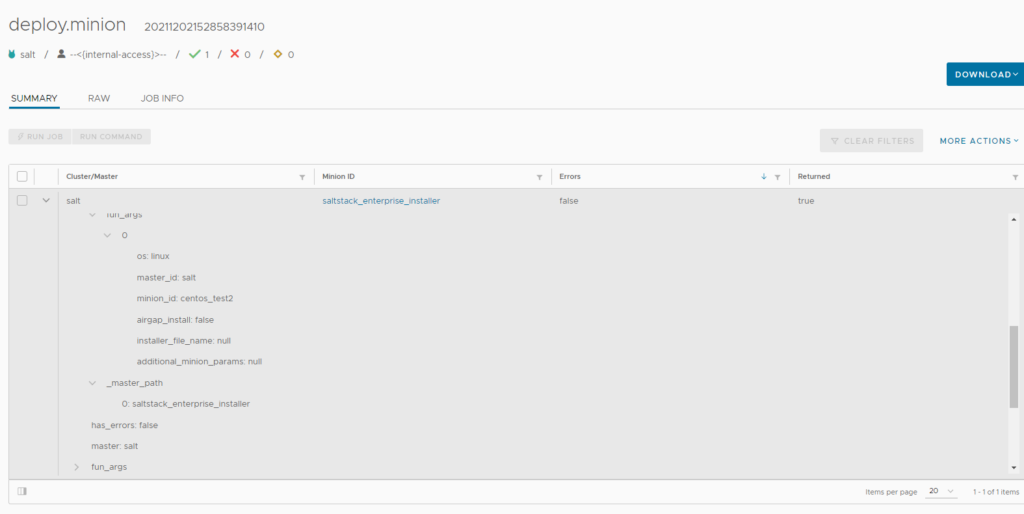

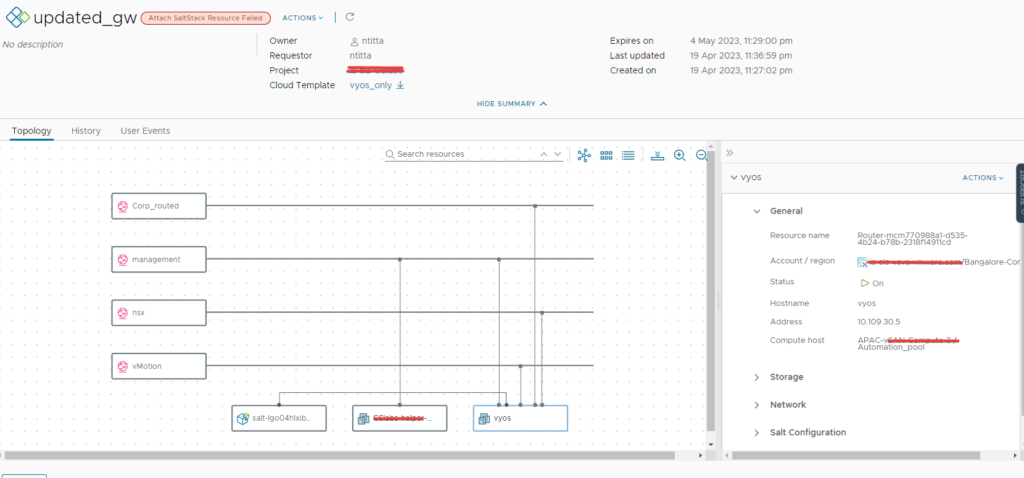

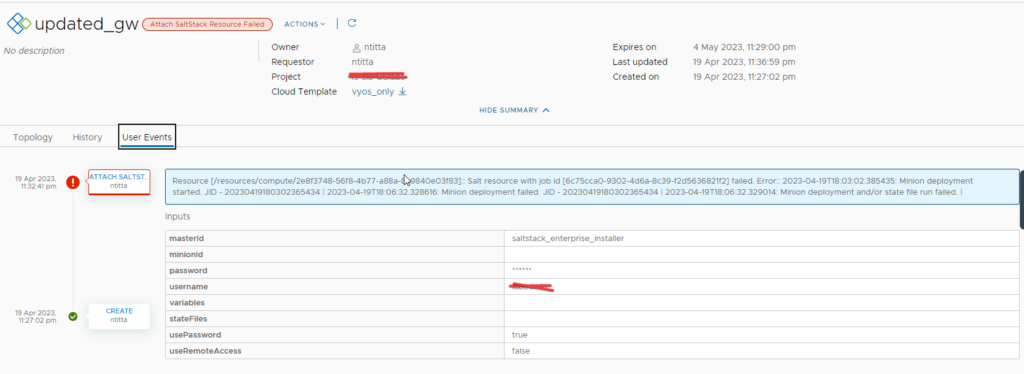

So in my scenario, I Finished my deployment and run the salt as a day2 task which failed:

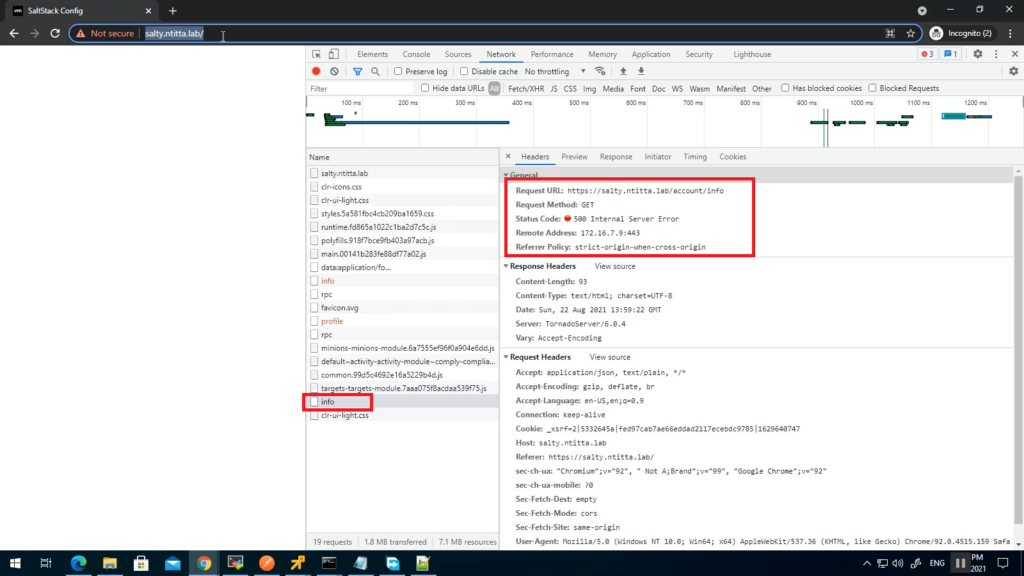

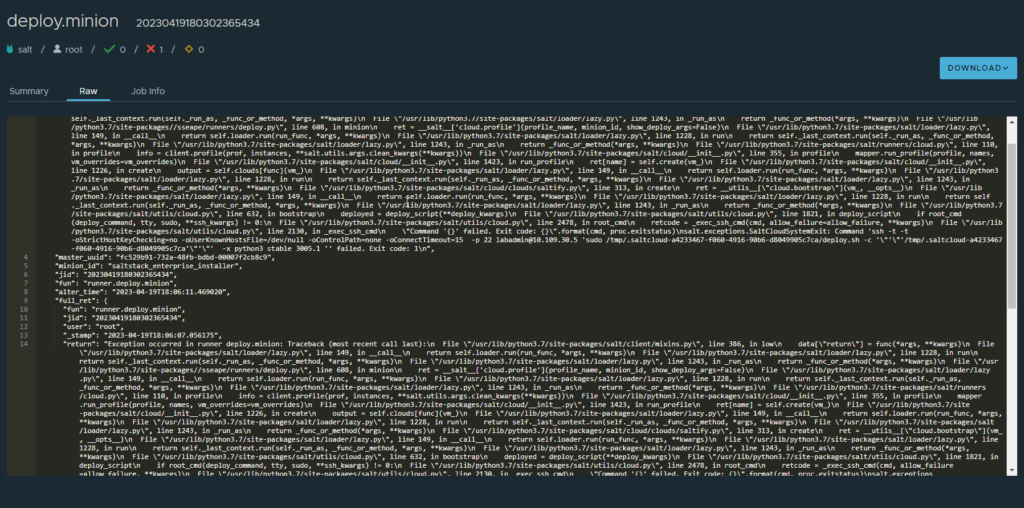

Navigate to Aria config(salt-config) web UI > activity > jobs > completed > Look for a deploy.minion task click on the JID (the long number to the right table of the job) and then click on raw:

so, this tells us that the script that was being executed failed and hence “Exit code: 1”

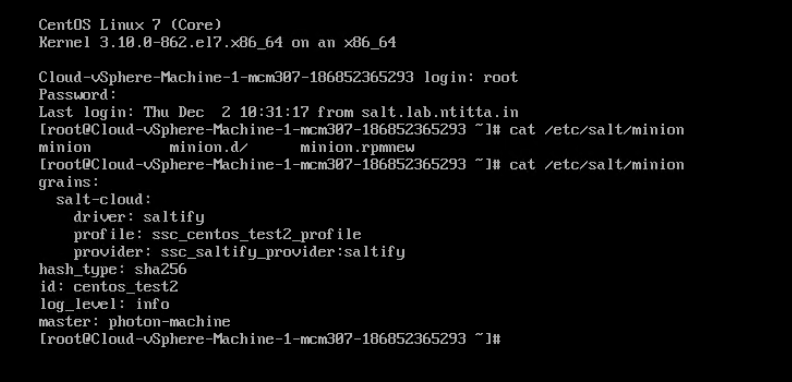

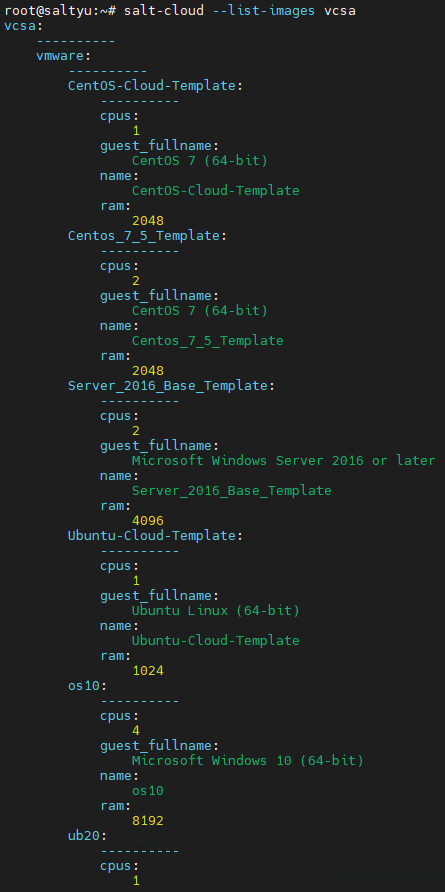

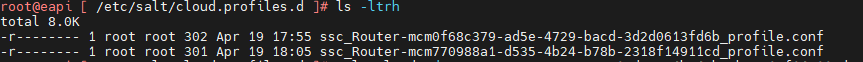

SSH to salt master and navigate to /etc/salt/cloud.profiles.d, you should see a conf with the the same vRA deployment name. in my case it was the second one from the below screenshot.

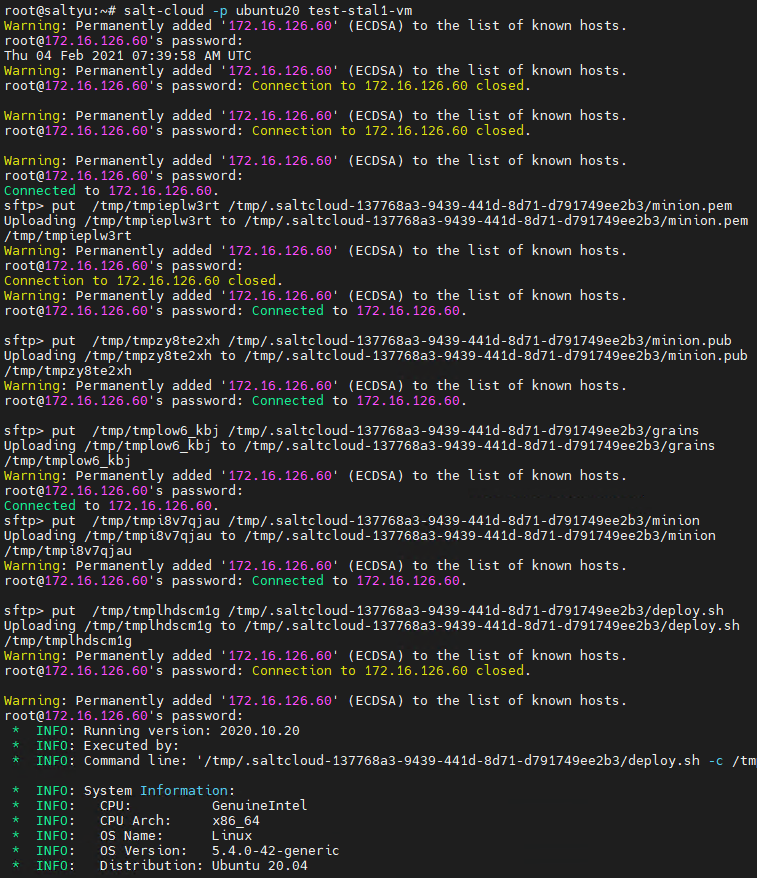

at this stage, you can manually call on salt-cloud with the debug flag so that you have realtime logging as the script attempts to connect to the remote host and bootstrap the minion.

The basic syntax is

salt-cloud -p profile_name VM_name -l debugin my case:

salt-cloud -p ssc_Router-mcm770988a1-d535-4b24-b78b-2318f14911cd_profile test -l debugNote: do not include the .conf in the profile name and the VM_name can be anything, it really does not matter in the current senario.

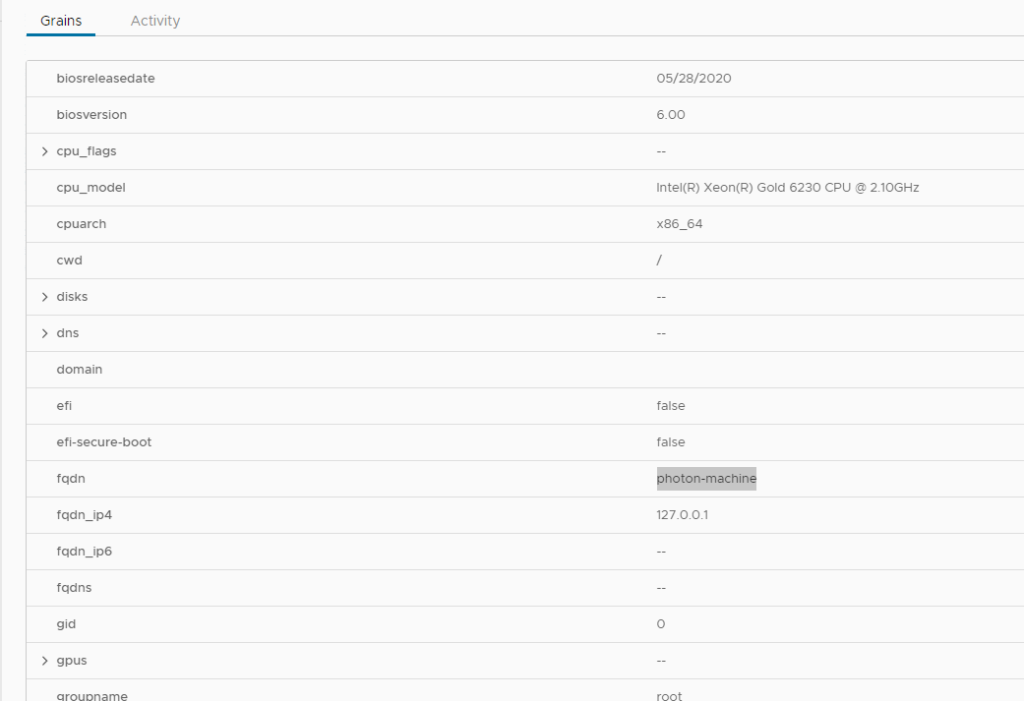

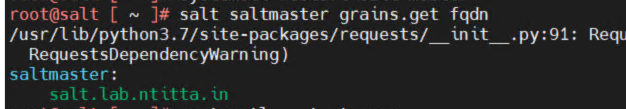

Typically, you want to look at the very end for the errors, In my case it was bad DNS.

[email protected]'s password: [DEBUG ] [email protected]'s password:

[sudo] password for labadmin: [DEBUG ] [sudo] password for labadmin:

* INFO: Running version: 2022.08.12

* INFO: Executed by: /bin/sh

* INFO: Command line: '/tmp/.saltcloud-3e1d4338-c7d1-4dbb-8596-de0d6bf587ec/deploy.sh -c /tmp/.saltcloud-3e1d4338-c7d1-4dbb-8596-de0d6bf587ec -x python3 stable 3005.1'

* WARN: Running the unstable version of bootstrap-salt.sh

* INFO: System Information:

* INFO: CPU: AuthenticAMD

* INFO: CPU Arch: x86_64

* INFO: OS Name: Linux

* INFO: OS Version: 5.15.0-69-generic

* INFO: Distribution: Ubuntu 22.04

* INFO: Installing minion

* INFO: Found function install_ubuntu_stable_deps

* INFO: Found function config_salt

* INFO: Found function preseed_master

* INFO: Found function install_ubuntu_stable

* INFO: Found function install_ubuntu_stable_post

* INFO: Found function install_ubuntu_res[DEBUG ] * INFO: Running version: 2022.08.12

* INFO: Executed by: /bin/sh

* INFO: Command line: '/tmp/.saltcloud-3e1d4338-c7d1-4dbb-8596-de0d6bf587ec/deploy.sh -c /tmp/.saltcloud-3e1d4338-c7d1-4dbb-8596-de0d6bf587ec -x python3 stable 3005.1'

* WARN: Running the unstable version of bootstrap-salt.sh

* INFO: System Information:

* INFO: CPU: AuthenticAMD

* INFO: CPU Arch: x86_64

* INFO: OS Name: Linux

* INFO: OS Version: 5.15.0-69-generic

* INFO: Distribution: Ubuntu 22.04

* INFO: Installing minion

* INFO: Found function install_ubuntu_stable_deps

* INFO: Found function config_salt

* INFO: Found function preseed_master

* INFO: Found function install_ubuntu_stable

* INFO: Found function install_ubuntu_stable_post

* INFO: Found function install_ubuntu_res

tart_daemons

* INFO: Found function daemons_running

* INFO: Found function install_ubuntu_check_services

* INFO: Running install_ubuntu_stable_deps()

Ign:1 http://in.archive.ubuntu.com/ubuntu jammy InRelease

Ign:2 https://packages.microsoft.com/ubuntu/22.04/prod jammy InRelease

Ign:3 https://repo.saltproject.io/py3/ubuntu/20.04/amd64/archive/3005.1 focal InRelease

Ign:4 http://in.archive.ubuntu.com/ubuntu jammy-updates InRelease

Ign:5 http://in.archive.ubuntu.com/ubuntu jammy-backports InRelease

Ign:6 http://in.archive.ubuntu.com/ubuntu jammy-security InRelease

[DEBUG ] tart_daemons

* INFO: Found function daemons_running

* INFO: Found function install_ubuntu_check_services

* INFO: Running install_ubuntu_stable_deps()

Ign:1 http://in.archive.ubuntu.com/ubuntu jammy InRelease

Ign:2 https://packages.microsoft.com/ubuntu/22.04/prod jammy InRelease

Ign:3 https://repo.saltproject.io/py3/ubuntu/20.04/amd64/archive/3005.1 focal InRelease

Ign:4 http://in.archive.ubuntu.com/ubuntu jammy-updates InRelease

Ign:5 http://in.archive.ubuntu.com/ubuntu jammy-backports InRelease

Ign:6 http://in.archive.ubuntu.com/ubuntu jammy-security InRelease

Ign:1 http://in.archive.ubuntu.com/ubuntu jammy InRelease

Ign:2 https://packages.microsoft.com/ubuntu/22.04/prod jammy InRelease

Ign:3 https://repo.saltproject.io/py3/ubuntu/20.04/amd64/archive/3005.1 focal InRelease

Ign:4 http://in.archive.ubuntu.com/ubuntu jammy-updates InRelease

Ign:5 http://in.archive.ubuntu.com/ubuntu jammy-backports InRelease

Ign:6 http://in.archive.ubuntu.com/ubuntu jammy-security InRelease

[DEBUG ] Ign:1 http://in.archive.ubuntu.com/ubuntu jammy InRelease

Ign:2 https://packages.microsoft.com/ubuntu/22.04/prod jammy InRelease

Ign:3 https://repo.saltproject.io/py3/ubuntu/20.04/amd64/archive/3005.1 focal InRelease

Ign:4 http://in.archive.ubuntu.com/ubuntu jammy-updates InRelease

Ign:5 http://in.archive.ubuntu.com/ubuntu jammy-backports InRelease

Ign:6 http://in.archive.ubuntu.com/ubuntu jammy-security InRelease

Ign:1 http://in.archive.ubuntu.com/ubuntu jammy InRelease

Ign:2 https://packages.microsoft.com/ubuntu/22.04/prod jammy InRelease

Ign:3 https://repo.saltproject.io/py3/ubuntu/20.04/amd64/archive/3005.1 focal InRelease

Ign:4 http://in.archive.ubuntu.com/ubuntu jammy-updates InRelease

Ign:5 http://in.archive.ubuntu.com/ubuntu jammy-backports InRelease

Ign:6 http://in.archive.ubuntu.com/ubuntu jammy-security InRelease

[DEBUG ] Ign:1 http://in.archive.ubuntu.com/ubuntu jammy InRelease

Ign:2 https://packages.microsoft.com/ubuntu/22.04/prod jammy InRelease

Ign:3 https://repo.saltproject.io/py3/ubuntu/20.04/amd64/archive/3005.1 focal InRelease

Ign:4 http://in.archive.ubuntu.com/ubuntu jammy-updates InRelease

Ign:5 http://in.archive.ubuntu.com/ubuntu jammy-backports InRelease

Ign:6 http://in.archive.ubuntu.com/ubuntu jammy-security InRelease

Err:1 http://in.archive.ubuntu.com/ubuntu jammy InRelease

Temporary failure resolving 'in.archive.ubuntu.com'

Err:3 https://repo.saltproject.io/py3/ubuntu/20.04/amd64/archive/3005.1 focal InRelease

Temporary failure resolving 'repo.saltproject.io'

Err:2 https://packages.microsoft.com/ubuntu/22.04/prod jammy InRelease

Temporary failure resolving 'packages.microsoft.com'

Err:4 http://in.archive.ubuntu.com/ubuntu jammy-updates InRelease

Temporary failure resolving 'in.archive.ubuntu.com'

Err:5 http://in.archive.ubuntu.com/ubuntu jammy-backports InRelease

Temporary failure resolving 'in.archive.ubuntu.com'

Err:6 http://in.archive.ubuntu.com/ubuntu jammy-security InRelease

Temporary failure resolving 'in.archive.ubuntu.com'

Reading package lists...[DEBUG ] Err:1 http://in.archive.ubuntu.com/ubuntu jammy InRelease

Temporary failure resolving 'in.archive.ubuntu.com'

Err:3 https://repo.saltproject.io/py3/ubuntu/20.04/amd64/archive/3005.1 focal InRelease

Temporary failure resolving 'repo.saltproject.io'

Err:2 https://packages.microsoft.com/ubuntu/22.04/prod jammy InRelease

Temporary failure resolving 'packages.microsoft.com'

Err:4 http://in.archive.ubuntu.com/ubuntu jammy-updates InRelease

Temporary failure resolving 'in.archive.ubuntu.com'

Err:5 http://in.archive.ubuntu.com/ubuntu jammy-backports InRelease

Temporary failure resolving 'in.archive.ubuntu.com'

Err:6 http://in.archive.ubuntu.com/ubuntu jammy-security InRelease

Temporary failure resolving 'in.archive.ubuntu.com'

Reading package lists...

Connection to 10.109.30.5 closed.

[DEBUG ] Connection to 10.109.30.5 closed.

* WARN: Non-LTS Ubuntu detected, but stable packages requested. Trying packages for previous LTS release. You may experience problems.

Reading package lists...

Building dependency tree...

Reading state information...

wget is already the newest version (1.21.2-2ubuntu1).

ca-certificates is already the newest version (20211016ubuntu0.22.04.1).

gnupg is already the newest version (2.2.27-3ubuntu2.1).

apt-transport-https is already the newest version (2.4.8).

The following packages were automatically installed and are no longer required:

eatmydata libeatmydata1 python3-json-pointer python3-jsonpatch

Use 'sudo apt autoremove' to remove them.

0 upgraded, 0 newly installed, 0 to remove and 62 not upgraded.

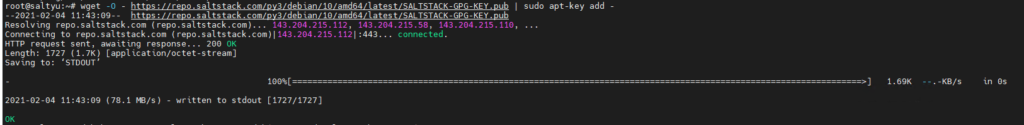

* ERROR: https://repo.saltproject.io/py3/ubuntu/20.04/amd64/archive/3005.1/salt-archive-keyring.gpg failed to download to /tmp/salt-gpg-UclYVAky.pub

* ERROR: Failed to run install_ubuntu_stable_deps()!!!

[DEBUG ] * WARN: Non-LTS Ubuntu detected, but stable packages requested. Trying packages for previous LTS release. You may experience problems.

Reading package lists...

Building dependency tree...

Reading state information...

wget is already the newest version (1.21.2-2ubuntu1).

ca-certificates is already the newest version (20211016ubuntu0.22.04.1).

gnupg is already the newest version (2.2.27-3ubuntu2.1).

apt-transport-https is already the newest version (2.4.8).

The following packages were automatically installed and are no longer required:

eatmydata libeatmydata1 python3-json-pointer python3-jsonpatch

Use 'sudo apt autoremove' to remove them.

0 upgraded, 0 newly installed, 0 to remove and 62 not upgraded.

* ERROR: https://repo.saltproject.io/py3/ubuntu/20.04/amd64/archive/3005.1/salt-archive-keyring.gpg failed to download to /tmp/salt-gpg-UclYVAky.pub

* ERROR: Failed to run install_ubuntu_stable_deps()!!!

The same can be done for windows minion deployment troubleshooting too!!