Topology

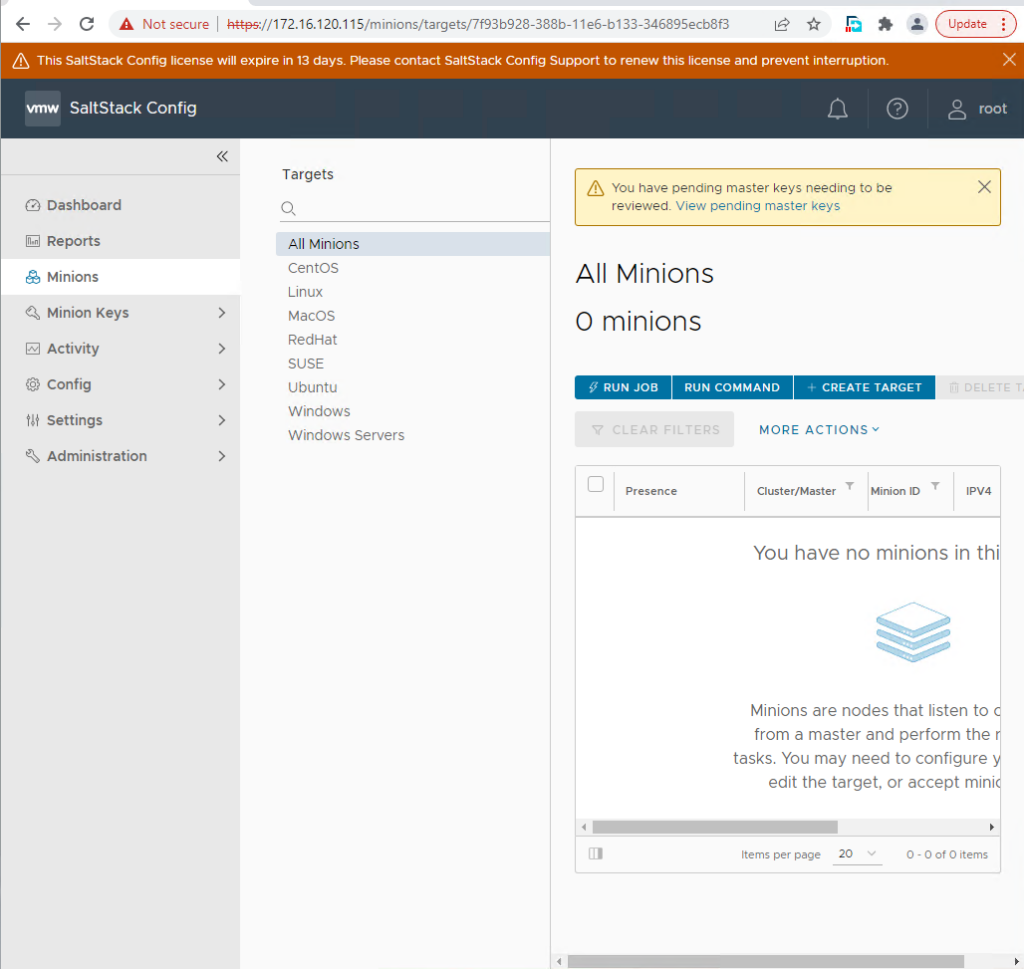

Prerequisites:

you must have a working salt-master and minions installed on the Redis/Postgres and the RAAS instance. Refer SaltConfig Multi-Node scripted Deployment Part-1

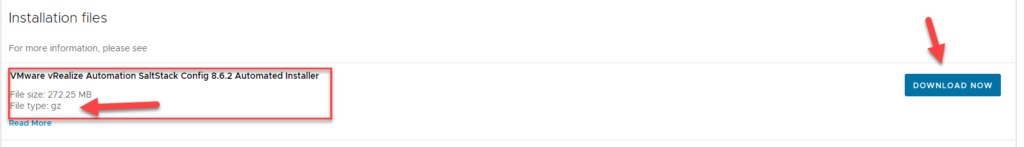

Dowload SaltConfig automated installer .gz from https://customerconnect.vmware.com/downloads/details?downloadGroup=VRA-SSC-862&productId=1206&rPId=80829

Extract and copy the files to the salt-master. In my case, I have placed it in the /root dir

the automated/scripted installer needs additional packages. you will need to install the below components on all the machines.

- openssl (typically installed at this point)

- epel-release

- python36-cryptography

- python36-pyOpenSSL

Install epel-release

Note: you can install most of the above using yum install packagename on centos however on redhat you will need to install the epel-release RPM manually

sudo yum install https://repo.ius.io/ius-release-el7.rpm https://dl.fedoraproject.org/pub/epel/epel-release-latest-7.noarch.rpm -y

Since the package needs to be installed on all nodes, I will leverage salt to run the commands on all nodes.

salt '*' cmd.run "sudo yum install https://repo.ius.io/ius-release-el7.rpm https://dl.fedoraproject.org/pub/epel/epel-release-latest-7.noarch.rpm -y"sample output:

[root@labmaster ~]# salt '*' cmd.run "sudo yum install https://repo.ius.io/ius-release-el7.rpm https://dl.fedoraproject.org/pub/epel/epel-release-latest-7.noarch.rpm -y"

labpostgres:

Loaded plugins: product-id, search-disabled-repos, subscription-manager

Examining /var/tmp/yum-root-VBGG1c/ius-release-el7.rpm: ius-release-2-1.el7.ius.noarch

Marking /var/tmp/yum-root-VBGG1c/ius-release-el7.rpm to be installed

Examining /var/tmp/yum-root-VBGG1c/epel-release-latest-7.noarch.rpm: epel-release-7-14.noarch

Marking /var/tmp/yum-root-VBGG1c/epel-release-latest-7.noarch.rpm to be installed

Resolving Dependencies

--> Running transaction check

---> Package epel-release.noarch 0:7-14 will be installed

---> Package ius-release.noarch 0:2-1.el7.ius will be installed

--> Finished Dependency Resolution

Dependencies Resolved

================================================================================

Package Arch Version Repository Size

================================================================================

Installing:

epel-release noarch 7-14 /epel-release-latest-7.noarch 25 k

ius-release noarch 2-1.el7.ius /ius-release-el7 4.5 k

Transaction Summary

================================================================================

Install 2 Packages

Total size: 30 k

Installed size: 30 k

Downloading packages:

Running transaction check

Running transaction test

Transaction test succeeded

Running transaction

Installing : epel-release-7-14.noarch 1/2

Installing : ius-release-2-1.el7.ius.noarch 2/2

Verifying : epel-release-7-14.noarch 1/2

Verifying : ius-release-2-1.el7.ius.noarch 2/2

Installed:

epel-release.noarch 0:7-14 ius-release.noarch 0:2-1.el7.ius

Complete!

labmaster:

Loaded plugins: product-id, search-disabled-repos, subscription-manager

Examining /var/tmp/yum-root-ALBF1m/ius-release-el7.rpm: ius-release-2-1.el7.ius.noarch

Marking /var/tmp/yum-root-ALBF1m/ius-release-el7.rpm to be installed

Examining /var/tmp/yum-root-ALBF1m/epel-release-latest-7.noarch.rpm: epel-release-7-14.noarch

Marking /var/tmp/yum-root-ALBF1m/epel-release-latest-7.noarch.rpm to be installed

Resolving Dependencies

--> Running transaction check

---> Package epel-release.noarch 0:7-14 will be installed

---> Package ius-release.noarch 0:2-1.el7.ius will be installed

--> Finished Dependency Resolution

Dependencies Resolved

================================================================================

Package Arch Version Repository Size

================================================================================

Installing:

epel-release noarch 7-14 /epel-release-latest-7.noarch 25 k

ius-release noarch 2-1.el7.ius /ius-release-el7 4.5 k

Transaction Summary

================================================================================

Install 2 Packages

Total size: 30 k

Installed size: 30 k

Downloading packages:

Running transaction check

Running transaction test

Transaction test succeeded

Running transaction

Installing : epel-release-7-14.noarch 1/2

Installing : ius-release-2-1.el7.ius.noarch 2/2

Verifying : epel-release-7-14.noarch 1/2

Verifying : ius-release-2-1.el7.ius.noarch 2/2

Installed:

epel-release.noarch 0:7-14 ius-release.noarch 0:2-1.el7.ius

Complete!

labredis:

Loaded plugins: product-id, search-disabled-repos, subscription-manager

Examining /var/tmp/yum-root-QKzOF1/ius-release-el7.rpm: ius-release-2-1.el7.ius.noarch

Marking /var/tmp/yum-root-QKzOF1/ius-release-el7.rpm to be installed

Examining /var/tmp/yum-root-QKzOF1/epel-release-latest-7.noarch.rpm: epel-release-7-14.noarch

Marking /var/tmp/yum-root-QKzOF1/epel-release-latest-7.noarch.rpm to be installed

Resolving Dependencies

--> Running transaction check

---> Package epel-release.noarch 0:7-14 will be installed

---> Package ius-release.noarch 0:2-1.el7.ius will be installed

--> Finished Dependency Resolution

Dependencies Resolved

================================================================================

Package Arch Version Repository Size

================================================================================

Installing:

epel-release noarch 7-14 /epel-release-latest-7.noarch 25 k

ius-release noarch 2-1.el7.ius /ius-release-el7 4.5 k

Transaction Summary

================================================================================

Install 2 Packages

Total size: 30 k

Installed size: 30 k

Downloading packages:

Running transaction check

Running transaction test

Transaction test succeeded

Running transaction

Installing : epel-release-7-14.noarch 1/2

Installing : ius-release-2-1.el7.ius.noarch 2/2

Verifying : epel-release-7-14.noarch 1/2

Verifying : ius-release-2-1.el7.ius.noarch 2/2

Installed:

epel-release.noarch 0:7-14 ius-release.noarch 0:2-1.el7.ius

Complete!

labraas:

Loaded plugins: product-id, search-disabled-repos, subscription-manager

Examining /var/tmp/yum-root-F4FNTG/ius-release-el7.rpm: ius-release-2-1.el7.ius.noarch

Marking /var/tmp/yum-root-F4FNTG/ius-release-el7.rpm to be installed

Examining /var/tmp/yum-root-F4FNTG/epel-release-latest-7.noarch.rpm: epel-release-7-14.noarch

Marking /var/tmp/yum-root-F4FNTG/epel-release-latest-7.noarch.rpm to be installed

Resolving Dependencies

--> Running transaction check

---> Package epel-release.noarch 0:7-14 will be installed

---> Package ius-release.noarch 0:2-1.el7.ius will be installed

--> Finished Dependency Resolution

Dependencies Resolved

================================================================================

Package Arch Version Repository Size

================================================================================

Installing:

epel-release noarch 7-14 /epel-release-latest-7.noarch 25 k

ius-release noarch 2-1.el7.ius /ius-release-el7 4.5 k

Transaction Summary

================================================================================

Install 2 Packages

Total size: 30 k

Installed size: 30 k

Downloading packages:

Running transaction check

Running transaction test

Transaction test succeeded

Running transaction

Installing : epel-release-7-14.noarch 1/2

Installing : ius-release-2-1.el7.ius.noarch 2/2

Verifying : epel-release-7-14.noarch 1/2

Verifying : ius-release-2-1.el7.ius.noarch 2/2

Installed:

epel-release.noarch 0:7-14 ius-release.noarch 0:2-1.el7.ius

Complete!

[root@labmaster ~]#

Note: in the above, i am targeting ‘*’ which means all accepted minions will be targeted when executing the job. in my case, I just have the 4 minions.. you can replace the ‘*’ with minion names should you have other minions that are not going to be used as a part of the installation. eg:

salt 'labmaster' cmd.run "rpm -qa | grep epel-release"

salt 'labredis' cmd.run "rpm -qa | grep epel-release"

salt 'labpostgres' cmd.run "rpm -qa | grep epel-release"

salt 'labraas' cmd.run "rpm -qa | grep epel-release"Installing the other packages:

Install python36-cryptography

salt '*' pkg.install python36-cryptographyOutput:

[root@labmaster ~]# salt '*' pkg.install python36-cryptography

labpostgres:

----------

gpg-pubkey.(none):

----------

new:

2fa658e0-45700c69,352c64e5-52ae6884,de57bfbe-53a9be98,fd431d51-4ae0493b

old:

2fa658e0-45700c69,de57bfbe-53a9be98,fd431d51-4ae0493b

python36-asn1crypto:

----------

new:

0.24.0-7.el7

old:

python36-cffi:

----------

new:

1.9.1-3.el7

old:

python36-cryptography:

----------

new:

2.3-2.el7

old:

python36-ply:

----------

new:

3.9-2.el7

old:

python36-pycparser:

----------

new:

2.14-2.el7

old:

labredis:

----------

gpg-pubkey.(none):

----------

new:

2fa658e0-45700c69,352c64e5-52ae6884,de57bfbe-53a9be98,fd431d51-4ae0493b

old:

2fa658e0-45700c69,de57bfbe-53a9be98,fd431d51-4ae0493b

python36-asn1crypto:

----------

new:

0.24.0-7.el7

old:

python36-cffi:

----------

new:

1.9.1-3.el7

old:

python36-cryptography:

----------

new:

2.3-2.el7

old:

python36-ply:

----------

new:

3.9-2.el7

old:

python36-pycparser:

----------

new:

2.14-2.el7

old:

labmaster:

----------

gpg-pubkey.(none):

----------

new:

2fa658e0-45700c69,352c64e5-52ae6884,de57bfbe-53a9be98,fd431d51-4ae0493b

old:

2fa658e0-45700c69,de57bfbe-53a9be98,fd431d51-4ae0493b

python36-asn1crypto:

----------

new:

0.24.0-7.el7

old:

python36-cffi:

----------

new:

1.9.1-3.el7

old:

python36-cryptography:

----------

new:

2.3-2.el7

old:

python36-ply:

----------

new:

3.9-2.el7

old:

python36-pycparser:

----------

new:

2.14-2.el7

old:

labraas:

----------

gpg-pubkey.(none):

----------

new:

2fa658e0-45700c69,352c64e5-52ae6884,de57bfbe-53a9be98,fd431d51-4ae0493b

old:

2fa658e0-45700c69,de57bfbe-53a9be98,fd431d51-4ae0493b

python36-asn1crypto:

----------

new:

0.24.0-7.el7

old:

python36-cffi:

----------

new:

1.9.1-3.el7

old:

python36-cryptography:

----------

new:

2.3-2.el7

old:

python36-ply:

----------

new:

3.9-2.el7

old:

python36-pycparser:

----------

new:

2.14-2.el7

old:

install python36-pyOpenSSL

salt '*' pkg.install python36-pyOpenSSLsample output:

[root@labmaster ~]# salt '*' pkg.install python36-pyOpenSSL

labmaster:

----------

python36-pyOpenSSL:

----------

new:

17.3.0-2.el7

old:

labpostgres:

----------

python36-pyOpenSSL:

----------

new:

17.3.0-2.el7

old:

labraas:

----------

python36-pyOpenSSL:

----------

new:

17.3.0-2.el7

old:

labredis:

----------

python36-pyOpenSSL:

----------

new:

17.3.0-2.el7

old:

install rsync

This is not a mandatory package, we will use this to copy files b/w the nodes, Specifically the keys.

salt '*' pkg.install rsyncsample output

[root@labmaster ~]# salt '*' pkg.install rsync

labmaster:

----------

rsync:

----------

new:

3.1.2-10.el7

old:

labpostgres:

----------

rsync:

----------

new:

3.1.2-10.el7

old:

labraas:

----------

rsync:

----------

new:

3.1.2-10.el7

old:

labredis:

----------

rsync:

----------

new:

3.1.2-10.el7

old:

Place the installer files in the correct directories.

the automated/scripted installer was previously scp into the /root dir

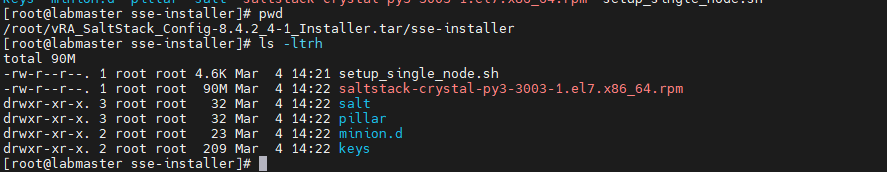

navigate to the extracted tar, cd to the sse-install dir, it should look like the below:

copy the pillar, state files from the SSE installer directory into the default piller_roots directory and the default file root dir (these folders do not exist by default, so we crate them)

sudo mkdir /srv/salt

sudo cp -r salt/sse /srv/salt/

sudo mkdir /srv/pillar

sudo cp -r pillar/sse /srv/pillar/

sudo cp -r pillar/top.sls /srv/pillar/

sudo cp -r salt/top.sls /srv/salt/

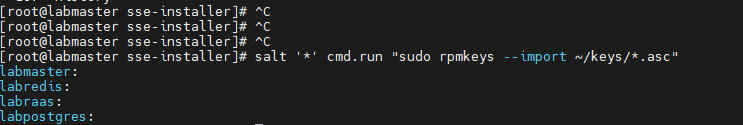

add SSE keys to all vms:

we will use rsync to copy the keys from the SSE installer directory to all the machines:

rsync -avzh keys/ [email protected]:~/keys

rsync -avzh keys/ [email protected]:~/keys

rsync -avzh keys/ [email protected]:~/keys

rsync -avzh keys/ [email protected]:~/keysinstall keys:

salt '*' cmd.run "sudo rpmkeys --import ~/keys/*.asc"output:

edit the pillar top.sls

vi /srv/pillar/top.slsreplace the list hilighted below with the minion names of all the instances that will be used for the SSE deployment.

Edited:

note: you can get the minion names using

salt-key -L

now, my updated top file looks like the below:

{# Pillar Top File #}

{# Define SSE Servers #}

{% load_yaml as sse_servers %}

- labmaster

- labpostgres

- labraas

- labredis

{% endload %}

base:

{# Assign Pillar Data to SSE Servers #}

{% for server in sse_servers %}

'{{ server }}':

- sse

{% endfor %}

now, edit the sse_settings.yaml

vi /srv/pillar/sse/sse_settings.yamlI have highlighted the important fields that must be updated on the config. the other fields are optional and can be changed as per your choice

this is how my updated sample config looks like:

# Section 1: Define servers in the SSE deployment by minion id

servers:

# PostgreSQL Server (Single value)

pg_server: labpostgres

# Redis Server (Single value)

redis_server: labredis

# SaltStack Enterprise Servers (List one or more)

eapi_servers:

- labraas

# Salt Masters (List one or more)

salt_masters:

- labmaster

# Section 2: Define PostgreSQL settings

pg:

# Set the PostgreSQL endpoint and port

# (defines how SaltStack Enterprise services will connect to PostgreSQL)

pg_endpoint: 172.16.120.111

pg_port: 5432

# Set the PostgreSQL Username and Password for SSE

pg_username: sseuser

pg_password: secure123

# Specify if PostgreSQL Host Based Authentication by IP and/or FQDN

# (allows SaltStack Enterprise services to connect to PostgreSQL)

pg_hba_by_ip: True

pg_hba_by_fqdn: False

pg_cert_cn: pgsql.lab.ntitta.in

pg_cert_name: pgsql.lab.ntitta.in

# Section 3: Define Redis settings

redis:

# Set the Redis endpoint and port

# (defines how SaltStack Enterprise services will connect to Redis)

redis_endpoint: 172.16.120.105

redis_port: 6379

# Set the Redis Username and Password for SSE

redis_username: sseredis

redis_password: secure1234

# Section 4: eAPI Server settings

eapi:

# Set the credentials for the SaltStack Enterprise service

# - The default for the username is "root"

# and the default for the password is "salt"

# - You will want to change this after a successful deployment

eapi_username: root

eapi_password: salt

# Set the endpoint for the SaltStack Enterprise service

eapi_endpoint: 172.16.120.115

# Set if SaltStack Enterprise will use SSL encrypted communicaiton (HTTPS)

eapi_ssl_enabled: True

# Set if SaltStack Enterprise will use SSL validation (verified certificate)

eapi_ssl_validation: False

# Set if SaltStack Enterprise (PostgreSQL, eAPI Servers, and Salt Masters)

# will all be deployed on a single "standalone" host

eapi_standalone: False

# Set if SaltStack Enterprise will regard multiple masters as "active" or "failover"

# - No impact to a single master configuration

# - "active" (set below as False) means that all minions connect to each master (recommended)

# - "failover" (set below as True) means that each minion connects to one master at a time

eapi_failover_master: False

# Set the encryption key for SaltStack Enterprise

# (this should be a unique value for each installation)

# To generate one, run: "openssl rand -hex 32"

#

# Note: Specify "auto" to have the installer generate a random key at installation time

# ("auto" is only suitable for installations with a single SaltStack Enterprise server)

eapi_key: auto

eapi_server_cert_cn: raas.lab.ntitta.in

eapi_server_cert_name: raas.lab.ntitta.in

# Section 5: Identifiers

ids:

# Appends a customer-specific UUID to the namespace of the raas database

# (this should be a unique value for each installation)

# To generate one, run: "cat /proc/sys/kernel/random/uuid"

customer_id: 43cab1f4-de60-4ab1-85b5-1d883c5c5d09

# Set the Cluster ID for the master (or set of masters) that will managed

# the SaltStack Enterprise infrastructure

# (additional sets of masters may be easily managed with a separate installer)

cluster_id: distributed_sandbox_env

refresh grains and piller data:

salt '*' saltutil.refresh_grains

salt '*' saltutil.refresh_pillar

Confirm if piller returns the items:

salt '*' pillar.items

sample output:

labraas:

----------

sse_cluster_id:

distributed_sandbox_env

sse_customer_id:

43cab1f4-de60-4ab1-85b5-1d883c5c5d09

sse_eapi_endpoint:

172.16.120.115

sse_eapi_failover_master:

False

sse_eapi_key:

auto

sse_eapi_num_processes:

12

sse_eapi_password:

salt

sse_eapi_server_cert_cn:

raas.lab.ntitta.in

sse_eapi_server_cert_name:

raas.lab.ntitta.in

sse_eapi_server_fqdn_list:

- labraas.ntitta.lab

sse_eapi_server_ipv4_list:

- 172.16.120.115

sse_eapi_servers:

- labraas

sse_eapi_ssl_enabled:

True

sse_eapi_ssl_validation:

False

sse_eapi_standalone:

False

sse_eapi_username:

root

sse_pg_cert_cn:

pgsql.lab.ntitta.in

sse_pg_cert_name:

pgsql.lab.ntitta.in

sse_pg_endpoint:

172.16.120.111

sse_pg_fqdn:

labpostgres.ntitta.lab

sse_pg_hba_by_fqdn:

False

sse_pg_hba_by_ip:

True

sse_pg_ip:

172.16.120.111

sse_pg_password:

secure123

sse_pg_port:

5432

sse_pg_server:

labpostgres

sse_pg_username:

sseuser

sse_redis_endpoint:

172.16.120.105

sse_redis_password:

secure1234

sse_redis_port:

6379

sse_redis_server:

labredis

sse_redis_username:

sseredis

sse_salt_master_fqdn_list:

- labmaster.ntitta.lab

sse_salt_master_ipv4_list:

- 172.16.120.113

sse_salt_masters:

- labmaster

labmaster:

----------

sse_cluster_id:

distributed_sandbox_env

sse_customer_id:

43cab1f4-de60-4ab1-85b5-1d883c5c5d09

sse_eapi_endpoint:

172.16.120.115

sse_eapi_failover_master:

False

sse_eapi_key:

auto

sse_eapi_num_processes:

12

sse_eapi_password:

salt

sse_eapi_server_cert_cn:

raas.lab.ntitta.in

sse_eapi_server_cert_name:

raas.lab.ntitta.in

sse_eapi_server_fqdn_list:

- labraas.ntitta.lab

sse_eapi_server_ipv4_list:

- 172.16.120.115

sse_eapi_servers:

- labraas

sse_eapi_ssl_enabled:

True

sse_eapi_ssl_validation:

False

sse_eapi_standalone:

False

sse_eapi_username:

root

sse_pg_cert_cn:

pgsql.lab.ntitta.in

sse_pg_cert_name:

pgsql.lab.ntitta.in

sse_pg_endpoint:

172.16.120.111

sse_pg_fqdn:

labpostgres.ntitta.lab

sse_pg_hba_by_fqdn:

False

sse_pg_hba_by_ip:

True

sse_pg_ip:

172.16.120.111

sse_pg_password:

secure123

sse_pg_port:

5432

sse_pg_server:

labpostgres

sse_pg_username:

sseuser

sse_redis_endpoint:

172.16.120.105

sse_redis_password:

secure1234

sse_redis_port:

6379

sse_redis_server:

labredis

sse_redis_username:

sseredis

sse_salt_master_fqdn_list:

- labmaster.ntitta.lab

sse_salt_master_ipv4_list:

- 172.16.120.113

sse_salt_masters:

- labmaster

labredis:

----------

sse_cluster_id:

distributed_sandbox_env

sse_customer_id:

43cab1f4-de60-4ab1-85b5-1d883c5c5d09

sse_eapi_endpoint:

172.16.120.115

sse_eapi_failover_master:

False

sse_eapi_key:

auto

sse_eapi_num_processes:

12

sse_eapi_password:

salt

sse_eapi_server_cert_cn:

raas.lab.ntitta.in

sse_eapi_server_cert_name:

raas.lab.ntitta.in

sse_eapi_server_fqdn_list:

- labraas.ntitta.lab

sse_eapi_server_ipv4_list:

- 172.16.120.115

sse_eapi_servers:

- labraas

sse_eapi_ssl_enabled:

True

sse_eapi_ssl_validation:

False

sse_eapi_standalone:

False

sse_eapi_username:

root

sse_pg_cert_cn:

pgsql.lab.ntitta.in

sse_pg_cert_name:

pgsql.lab.ntitta.in

sse_pg_endpoint:

172.16.120.111

sse_pg_fqdn:

labpostgres.ntitta.lab

sse_pg_hba_by_fqdn:

False

sse_pg_hba_by_ip:

True

sse_pg_ip:

172.16.120.111

sse_pg_password:

secure123

sse_pg_port:

5432

sse_pg_server:

labpostgres

sse_pg_username:

sseuser

sse_redis_endpoint:

172.16.120.105

sse_redis_password:

secure1234

sse_redis_port:

6379

sse_redis_server:

labredis

sse_redis_username:

sseredis

sse_salt_master_fqdn_list:

- labmaster.ntitta.lab

sse_salt_master_ipv4_list:

- 172.16.120.113

sse_salt_masters:

- labmaster

labpostgres:

----------

sse_cluster_id:

distributed_sandbox_env

sse_customer_id:

43cab1f4-de60-4ab1-85b5-1d883c5c5d09

sse_eapi_endpoint:

172.16.120.115

sse_eapi_failover_master:

False

sse_eapi_key:

auto

sse_eapi_num_processes:

12

sse_eapi_password:

salt

sse_eapi_server_cert_cn:

raas.lab.ntitta.in

sse_eapi_server_cert_name:

raas.lab.ntitta.in

sse_eapi_server_fqdn_list:

- labraas.ntitta.lab

sse_eapi_server_ipv4_list:

- 172.16.120.115

sse_eapi_servers:

- labraas

sse_eapi_ssl_enabled:

True

sse_eapi_ssl_validation:

False

sse_eapi_standalone:

False

sse_eapi_username:

root

sse_pg_cert_cn:

pgsql.lab.ntitta.in

sse_pg_cert_name:

pgsql.lab.ntitta.in

sse_pg_endpoint:

172.16.120.111

sse_pg_fqdn:

labpostgres.ntitta.lab

sse_pg_hba_by_fqdn:

False

sse_pg_hba_by_ip:

True

sse_pg_ip:

172.16.120.111

sse_pg_password:

secure123

sse_pg_port:

5432

sse_pg_server:

labpostgres

sse_pg_username:

sseuser

sse_redis_endpoint:

172.16.120.105

sse_redis_password:

secure1234

sse_redis_port:

6379

sse_redis_server:

labredis

sse_redis_username:

sseredis

sse_salt_master_fqdn_list:

- labmaster.ntitta.lab

sse_salt_master_ipv4_list:

- 172.16.120.113

sse_salt_masters:

- labmaster

Install Postgres:

salt labpostgres state.highstateoutput:

[root@labmaster sse]# sudo salt labpostgres state.highstate

labpostgres:

----------

ID: install_postgresql-server

Function: pkg.installed

Result: True

Comment: 4 targeted packages were installed/updated.

Started: 19:57:29.956557

Duration: 27769.35 ms

Changes:

----------

postgresql12:

----------

new:

12.7-1PGDG.rhel7

old:

postgresql12-contrib:

----------

new:

12.7-1PGDG.rhel7

old:

postgresql12-libs:

----------

new:

12.7-1PGDG.rhel7

old:

postgresql12-server:

----------

new:

12.7-1PGDG.rhel7

old:

----------

ID: initialize_postgres-database

Function: cmd.run

Name: /usr/pgsql-12/bin/postgresql-12-setup initdb

Result: True

Comment: Command "/usr/pgsql-12/bin/postgresql-12-setup initdb" run

Started: 19:57:57.729506

Duration: 2057.166 ms

Changes:

----------

pid:

33869

retcode:

0

stderr:

stdout:

Initializing database ... OK

----------

ID: create_pki_postgres_path

Function: file.directory

Name: /etc/pki/postgres/certs

Result: True

Comment:

Started: 19:57:59.792636

Duration: 7.834 ms

Changes:

----------

/etc/pki/postgres/certs:

----------

directory:

new

----------

ID: create_ssl_certificate

Function: module.run

Name: tls.create_self_signed_cert

Result: True

Comment: Module function tls.create_self_signed_cert executed

Started: 19:57:59.802082

Duration: 163.484 ms

Changes:

----------

ret:

Created Private Key: "/etc/pki/postgres/certs/pgsq.key." Created Certificate: "/etc/pki/postgres/certs/pgsq.crt."

----------

ID: set_certificate_permissions

Function: file.managed

Name: /etc/pki/postgres/certs/pgsq.crt

Result: True

Comment:

Started: 19:57:59.965923

Duration: 4.142 ms

Changes:

----------

group:

postgres

mode:

0400

user:

postgres

----------

ID: set_key_permissions

Function: file.managed

Name: /etc/pki/postgres/certs/pgsq.key

Result: True

Comment:

Started: 19:57:59.970470

Duration: 3.563 ms

Changes:

----------

group:

postgres

mode:

0400

user:

postgres

----------

ID: configure_postgres

Function: file.managed

Name: /var/lib/pgsql/12/data/postgresql.conf

Result: True

Comment: File /var/lib/pgsql/12/data/postgresql.conf updated

Started: 19:57:59.974388

Duration: 142.264 ms

Changes:

----------

diff:

---

+++

@@ -16,9 +16,9 @@

#

....

....

...

#------------------------------------------------------------------------------

----------

ID: configure_pg_hba

Function: file.managed

Name: /var/lib/pgsql/12/data/pg_hba.conf

Result: True

Comment: File /var/lib/pgsql/12/data/pg_hba.conf updated

...

...

...

+

----------

ID: start_postgres

Function: service.running

Name: postgresql-12

Result: True

Comment: Service postgresql-12 has been enabled, and is running

Started: 19:58:00.225639

Duration: 380.763 ms

Changes:

----------

postgresql-12:

True

----------

ID: create_db_user

Function: postgres_user.present

Name: sseuser

Result: True

Comment: The user sseuser has been created

Started: 19:58:00.620381

Duration: 746.545 ms

Changes:

----------

sseuser:

Present

Summary for labpostgres

-------------

Succeeded: 10 (changed=10)

Failed: 0

-------------

Total states run: 10

Total run time: 31.360 s

If this fails for some reason, you can revert/remove postgres by using below and fix the underlying errors before re-trying

salt labpostgres state.apply sse.eapi_database.revertexample:

[root@labmaster sse]# salt labpostgres state.apply sse.eapi_database.revert

labpostgres:

----------

ID: revert_all

Function: pkg.removed

Result: True

Comment: All targeted packages were removed.

Started: 16:30:26.736578

Duration: 10127.277 ms

Changes:

----------

postgresql12:

----------

new:

old:

12.7-1PGDG.rhel7

postgresql12-contrib:

----------

new:

old:

12.7-1PGDG.rhel7

postgresql12-libs:

----------

new:

old:

12.7-1PGDG.rhel7

postgresql12-server:

----------

new:

old:

12.7-1PGDG.rhel7

----------

ID: revert_all

Function: file.absent

Name: /var/lib/pgsql/

Result: True

Comment: Removed directory /var/lib/pgsql/

Started: 16:30:36.870967

Duration: 79.941 ms

Changes:

----------

removed:

/var/lib/pgsql/

----------

ID: revert_all

Function: file.absent

Name: /etc/pki/postgres/

Result: True

Comment: Removed directory /etc/pki/postgres/

Started: 16:30:36.951337

Duration: 3.34 ms

Changes:

----------

removed:

/etc/pki/postgres/

----------

ID: revert_all

Function: user.absent

Name: postgres

Result: True

Comment: Removed user postgres

Started: 16:30:36.956696

Duration: 172.372 ms

Changes:

----------

postgres:

removed

postgres group:

removed

Summary for labpostgres

------------

Succeeded: 4 (changed=4)

Failed: 0

------------

Total states run: 4

Total run time: 10.383 s

install redis

salt labredis state.highstatesample output:

[root@labmaster sse]# salt labredis state.highstate

labredis:

----------

ID: install_redis

Function: pkg.installed

Result: True

Comment: The following packages were installed/updated: jemalloc, redis5

Started: 20:07:12.059084

Duration: 25450.196 ms

Changes:

----------

jemalloc:

----------

new:

3.6.0-1.el7

old:

redis5:

----------

new:

5.0.9-1.el7.ius

old:

----------

ID: configure_redis

Function: file.managed

Name: /etc/redis.conf

Result: True

Comment: File /etc/redis.conf updated

Started: 20:07:37.516851

Duration: 164.011 ms

Changes:

----------

diff:

---

+++

@@ -1,5 +1,5 @@

...

...

-bind 127.0.0.1

+bind 0.0.0.0

.....

.....

@@ -1361,12 +1311,8 @@

# active-defrag-threshold-upper 100

# Minimal effort for defrag in CPU percentage

-# active-defrag-cycle-min 5

+# active-defrag-cycle-min 25

# Maximal effort for defrag in CPU percentage

# active-defrag-cycle-max 75

-# Maximum number of set/hash/zset/list fields that will be processed from

-# the main dictionary scan

-# active-defrag-max-scan-fields 1000

-

mode:

0664

user:

root

----------

ID: start_redis

Function: service.running

Name: redis

Result: True

Comment: Service redis has been enabled, and is running

Started: 20:07:37.703605

Duration: 251.205 ms

Changes:

----------

redis:

True

Summary for labredis

------------

Succeeded: 3 (changed=3)

Failed: 0

------------

Total states run: 3

Total run time: 25.865 s

Install RAAS

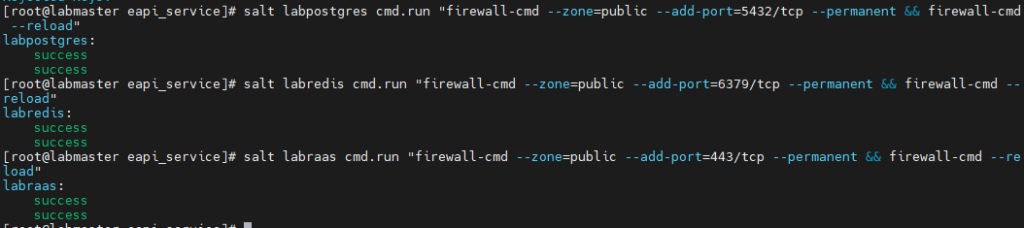

Before proceeding with RAAS setup, ensure Postgres and Redis is accessible: In my case, I still have linux firewall on the two machines, use the below command to add firewall rule exceptions for the respective node. again, I am leveraging salt to run the commands on the remote node

salt labpostgres cmd.run "firewall-cmd --zone=public --add-port=5432/tcp --permanent && firewall-cmd --reload"

salt labredis cmd.run "firewall-cmd --zone=public --add-port=6379/tcp --permanent && firewall-cmd --reload"

salt labraas cmd.run "firewall-cmd --zone=public --add-port=443/tcp --permanent && firewall-cmd --reload"

now, proceed with raas install

salt labraas state.highstatesample output:

[root@labmaster sse]# salt labraas state.highstate

labraas:

----------

ID: install_xmlsec

Function: pkg.installed

Result: True

Comment: 2 targeted packages were installed/updated.

The following packages were already installed: openssl, openssl-libs, xmlsec1, xmlsec1-openssl, libxslt, libtool-ltdl

Started: 20:36:16.715011

Duration: 39176.806 ms

Changes:

----------

singleton-manager-i18n:

----------

new:

0.6.0-5.el7.x86_64_1

old:

ssc-translation-bundle:

----------

new:

8.6.2-2.ph3.noarch_1

old:

----------

ID: install_raas

Function: pkg.installed

Result: True

Comment: The following packages were installed/updated: raas

Started: 20:36:55.942737

Duration: 35689.868 ms

Changes:

----------

raas:

----------

new:

8.6.2.11-1.el7

old:

----------

ID: install_raas

Function: cmd.run

Name: systemctl daemon-reload

Result: True

Comment: Command "systemctl daemon-reload" run

Started: 20:37:31.638377

Duration: 138.354 ms

Changes:

----------

pid:

31230

retcode:

0

stderr:

stdout:

----------

ID: create_pki_raas_path_eapi

Function: file.directory

Name: /etc/pki/raas/certs

Result: True

Comment: The directory /etc/pki/raas/certs is in the correct state

Started: 20:37:31.785757

Duration: 11.788 ms

Changes:

----------

ID: create_ssl_certificate_eapi

Function: module.run

Name: tls.create_self_signed_cert

Result: True

Comment: Module function tls.create_self_signed_cert executed

Started: 20:37:31.800719

Duration: 208.431 ms

Changes:

----------

ret:

Created Private Key: "/etc/pki/raas/certs/raas.lab.ntitta.in.key." Created Certificate: "/etc/pki/raas/certs/raas.lab.ntitta.in.crt."

----------

ID: set_certificate_permissions_eapi

Function: file.managed

Name: /etc/pki/raas/certs/raas.lab.ntitta.in.crt

Result: True

Comment:

Started: 20:37:32.009536

Duration: 5.967 ms

Changes:

----------

group:

raas

mode:

0400

user:

raas

----------

ID: set_key_permissions_eapi

Function: file.managed

Name: /etc/pki/raas/certs/raas.lab.ntitta.in.key

Result: True

Comment:

Started: 20:37:32.015921

Duration: 6.888 ms

Changes:

----------

group:

raas

mode:

0400

user:

raas

----------

ID: raas_owns_raas

Function: file.directory

Name: /etc/raas/

Result: True

Comment: The directory /etc/raas is in the correct state

Started: 20:37:32.023200

Duration: 4.485 ms

Changes:

----------

ID: configure_raas

Function: file.managed

Name: /etc/raas/raas

Result: True

Comment: File /etc/raas/raas updated

Started: 20:37:32.028374

Duration: 132.226 ms

Changes:

----------

diff:

---

+++

@@ -1,49 +1,47 @@

...

...

+

----------

ID: save_credentials

Function: cmd.run

Name: /usr/bin/raas save_creds 'postgres={"username":"sseuser","password":"secure123"}' 'redis={"password":"secure1234"}'

Result: True

Comment: All files in creates exist

Started: 20:37:32.163432

Duration: 2737.346 ms

Changes:

----------

ID: set_secconf_permissions

Function: file.managed

Name: /etc/raas/raas.secconf

Result: True

Comment: File /etc/raas/raas.secconf exists with proper permissions. No changes made.

Started: 20:37:34.902143

Duration: 5.949 ms

Changes:

----------

ID: ensure_raas_pki_directory

Function: file.directory

Name: /etc/raas/pki

Result: True

Comment: The directory /etc/raas/pki is in the correct state

Started: 20:37:34.908558

Duration: 4.571 ms

Changes:

----------

ID: change_owner_to_raas

Function: file.directory

Name: /etc/raas/pki

Result: True

Comment: The directory /etc/raas/pki is in the correct state

Started: 20:37:34.913566

Duration: 5.179 ms

Changes:

----------

ID: /usr/sbin/ldconfig

Function: cmd.run

Result: True

Comment: Command "/usr/sbin/ldconfig" run

Started: 20:37:34.919069

Duration: 32.018 ms

Changes:

----------

pid:

31331

retcode:

0

stderr:

stdout:

----------

ID: start_raas

Function: service.running

Name: raas

Result: True

Comment: check_cmd determined the state succeeded

Started: 20:37:34.952926

Duration: 16712.726 ms

Changes:

----------

raas:

True

----------

ID: restart_raas_and_confirm_connectivity

Function: cmd.run

Name: salt-call service.restart raas

Result: True

Comment: check_cmd determined the state succeeded

Started: 20:37:51.666446

Duration: 472.205 ms

Changes:

----------

ID: get_initial_objects_file

Function: file.managed

Name: /tmp/sample-resource-types.raas

Result: True

Comment: File /tmp/sample-resource-types.raas updated

Started: 20:37:52.139370

Duration: 180.432 ms

Changes:

----------

group:

raas

mode:

0640

user:

raas

----------

ID: import_initial_objects

Function: cmd.run

Name: /usr/bin/raas dump --insecure --server https://localhost --auth root:salt --mode import < /tmp/sample-resource-types.raas

Result: True

Comment: Command "/usr/bin/raas dump --insecure --server https://localhost --auth root:salt --mode import < /tmp/sample-resource-types.raas" run

Started: 20:37:52.320146

Duration: 24566.332 ms

Changes:

----------

pid:

31465

retcode:

0

stderr:

stdout:

----------

ID: raas_service_restart

Function: cmd.run

Name: systemctl restart raas

Result: True

Comment: Command "systemctl restart raas" run

Started: 20:38:16.887666

Duration: 2257.183 ms

Changes:

----------

pid:

31514

retcode:

0

stderr:

stdout:

Summary for labraas

-------------

Succeeded: 19 (changed=12)

Failed: 0

-------------

Total states run: 19

Total run time: 122.349 s

Install Eapi Agent:

salt labmaster state.highstateoutput:

[root@labmaster sse]# salt labmaster state.highstate

Authentication error occurred.

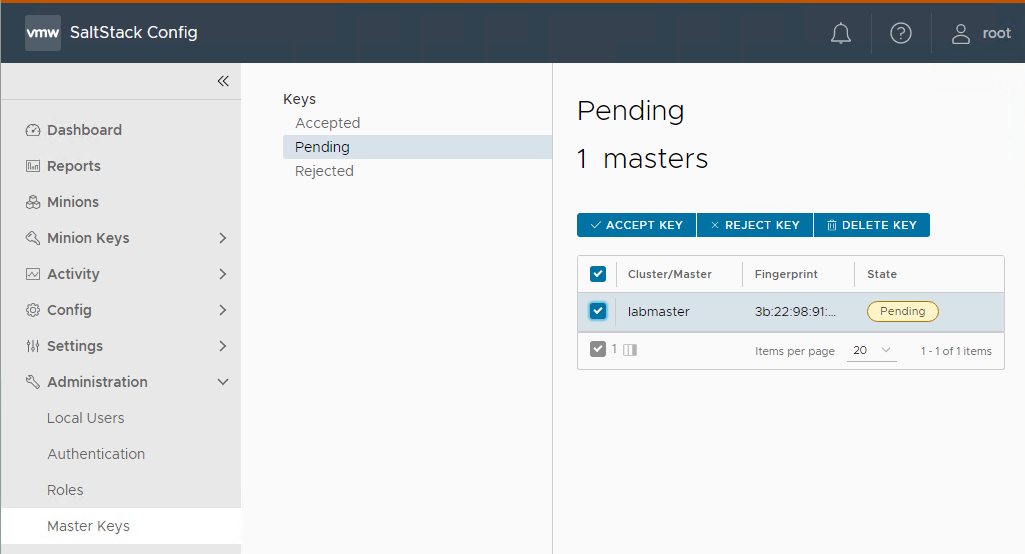

the Authentication error above is expected. now, we log in to the RAAS via webbrowser:

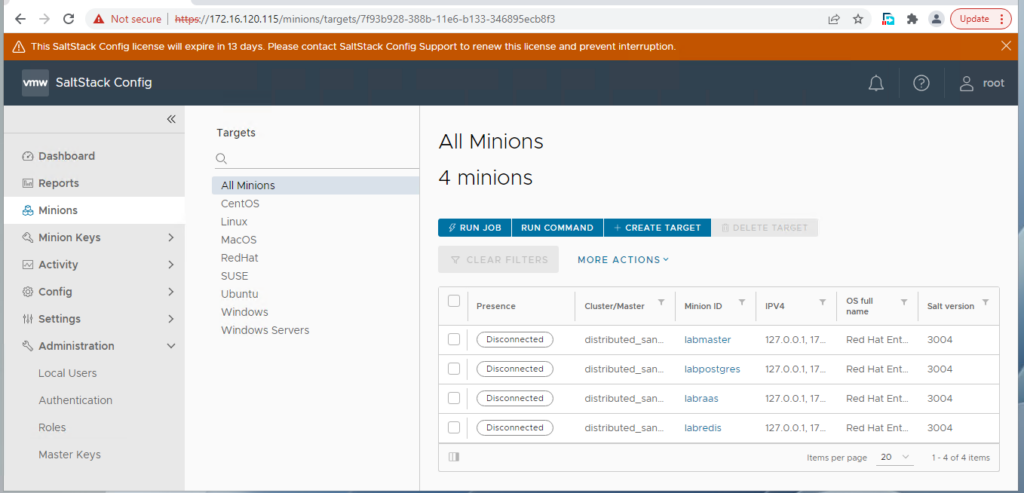

Accept the minion master keys and now we see all minion:

you now have salt-config /salt enterprise installed successfully.

Troubleshooting:

If the Postgres or RAAS high state fils with the bellow then download the newer version of salt-config tar files from VMware. (there are issues with the init.sls state files with 8.5 or older versions.

----------

ID: create_ssl_certificate

Function: module.run

Name: tls.create_self_signed_cert

Result: False

Comment: Module function tls.create_self_signed_cert threw an exception. Exception: [Errno 2] No such file or directory: '/etc/pki/postgres/certs/sdb://osenv/PG_CERT_CN.key'

Started: 17:11:56.347565

Duration: 297.925 ms

Changes:

----------

ID: create_ssl_certificate_eapi

Function: module.run

Name: tls.create_self_signed_cert

Result: False

Comment: Module function tls.create_self_signed_cert threw an exception. Exception: [Errno 2] No such file or directory: '/etc/pki/raas/certs/sdb://osenv/SSE_CERT_CN.key'

Started: 20:26:32.061862

Duration: 42.028 ms

Changes:

----------

you can work around the issue by hardcoding the full paths for pg cert and raas cert in the init.sls files.

ID: create_ssl_certificate

Function: module.run

Name: tls.create_self_signed_cert

Result: False

Comment: Module function tls.create_self_signed_cert is not available

Started: 16:11:55.436579

Duration: 932.506 ms

Changes:Cause: prerequisits are not installed. python36-pyOpenSSL and python36-cryptography must be installed on all nodes where tls.create_self_signed_cert is targeted against.

One Reply to “SaltConfig multi-node scripted/automated Deployment Part-2”