screenshot:

log: /var/log/boot.gz

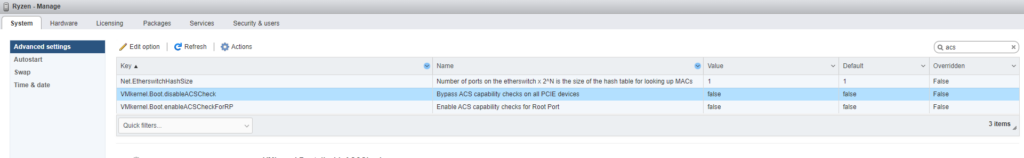

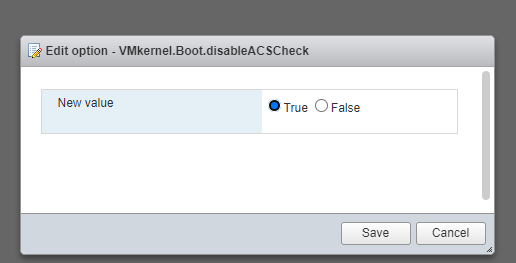

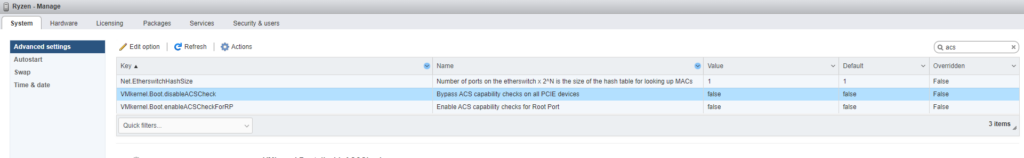

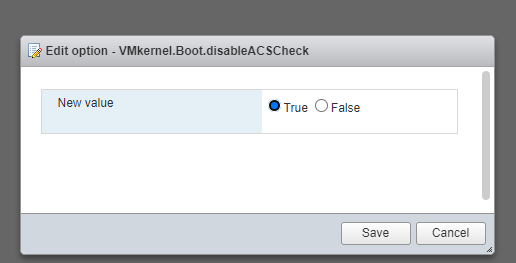

no PCI ACS support device_idworkaround was to disable ACS

screenshot:

log: /var/log/boot.gz

no PCI ACS support device_idworkaround was to disable ACS

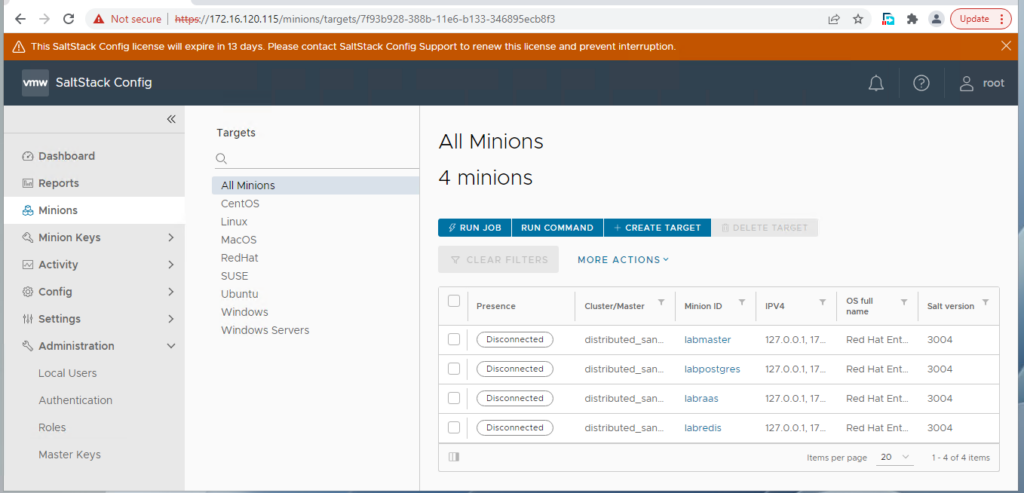

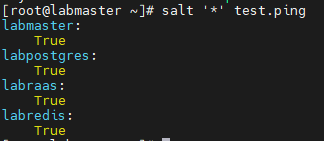

you must have a working salt-master and minions installed on the Redis/Postgres and the RAAS instance. Refer SaltConfig Multi-Node scripted Deployment Part-1

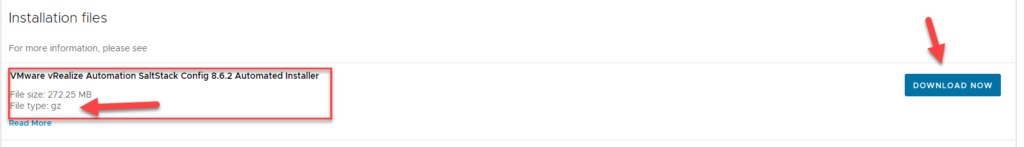

Dowload SaltConfig automated installer .gz from https://customerconnect.vmware.com/downloads/details?downloadGroup=VRA-SSC-862&productId=1206&rPId=80829

Extract and copy the files to the salt-master. In my case, I have placed it in the /root dir

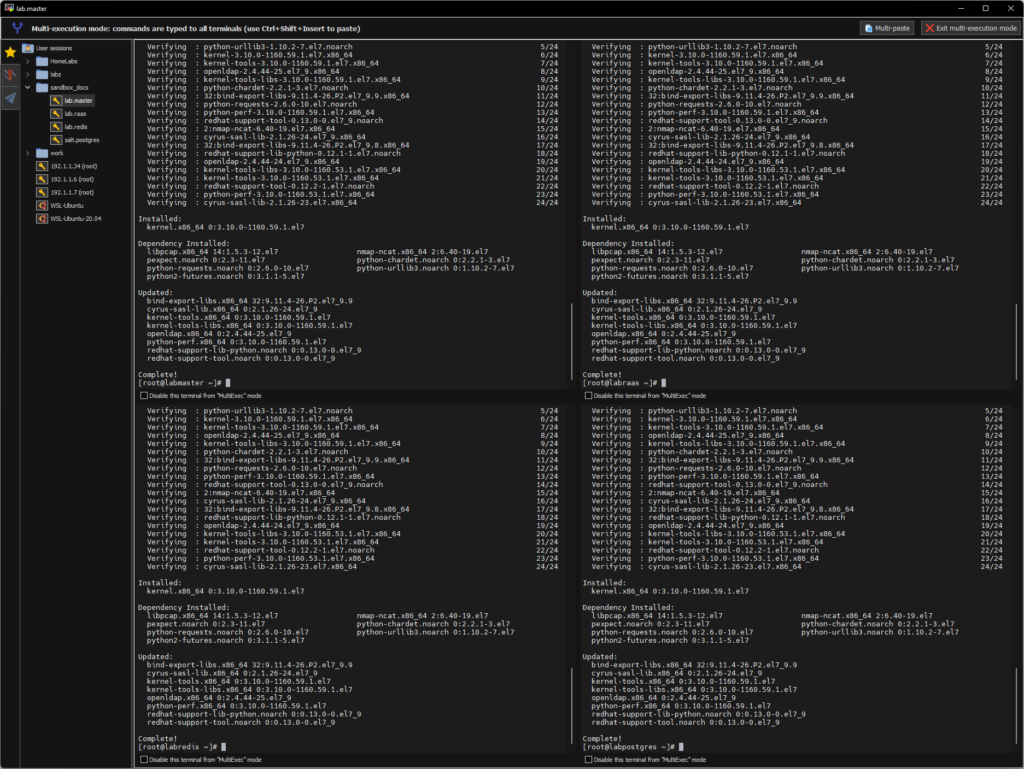

the automated/scripted installer needs additional packages. you will need to install the below components on all the machines.

Note: you can install most of the above using yum install packagename on centos however on redhat you will need to install the epel-release RPM manually

sudo yum install https://repo.ius.io/ius-release-el7.rpm https://dl.fedoraproject.org/pub/epel/epel-release-latest-7.noarch.rpm -y

Since the package needs to be installed on all nodes, I will leverage salt to run the commands on all nodes.

salt '*' cmd.run "sudo yum install https://repo.ius.io/ius-release-el7.rpm https://dl.fedoraproject.org/pub/epel/epel-release-latest-7.noarch.rpm -y"sample output:

[root@labmaster ~]# salt '*' cmd.run "sudo yum install https://repo.ius.io/ius-release-el7.rpm https://dl.fedoraproject.org/pub/epel/epel-release-latest-7.noarch.rpm -y"

labpostgres:

Loaded plugins: product-id, search-disabled-repos, subscription-manager

Examining /var/tmp/yum-root-VBGG1c/ius-release-el7.rpm: ius-release-2-1.el7.ius.noarch

Marking /var/tmp/yum-root-VBGG1c/ius-release-el7.rpm to be installed

Examining /var/tmp/yum-root-VBGG1c/epel-release-latest-7.noarch.rpm: epel-release-7-14.noarch

Marking /var/tmp/yum-root-VBGG1c/epel-release-latest-7.noarch.rpm to be installed

Resolving Dependencies

--> Running transaction check

---> Package epel-release.noarch 0:7-14 will be installed

---> Package ius-release.noarch 0:2-1.el7.ius will be installed

--> Finished Dependency Resolution

Dependencies Resolved

================================================================================

Package Arch Version Repository Size

================================================================================

Installing:

epel-release noarch 7-14 /epel-release-latest-7.noarch 25 k

ius-release noarch 2-1.el7.ius /ius-release-el7 4.5 k

Transaction Summary

================================================================================

Install 2 Packages

Total size: 30 k

Installed size: 30 k

Downloading packages:

Running transaction check

Running transaction test

Transaction test succeeded

Running transaction

Installing : epel-release-7-14.noarch 1/2

Installing : ius-release-2-1.el7.ius.noarch 2/2

Verifying : epel-release-7-14.noarch 1/2

Verifying : ius-release-2-1.el7.ius.noarch 2/2

Installed:

epel-release.noarch 0:7-14 ius-release.noarch 0:2-1.el7.ius

Complete!

labmaster:

Loaded plugins: product-id, search-disabled-repos, subscription-manager

Examining /var/tmp/yum-root-ALBF1m/ius-release-el7.rpm: ius-release-2-1.el7.ius.noarch

Marking /var/tmp/yum-root-ALBF1m/ius-release-el7.rpm to be installed

Examining /var/tmp/yum-root-ALBF1m/epel-release-latest-7.noarch.rpm: epel-release-7-14.noarch

Marking /var/tmp/yum-root-ALBF1m/epel-release-latest-7.noarch.rpm to be installed

Resolving Dependencies

--> Running transaction check

---> Package epel-release.noarch 0:7-14 will be installed

---> Package ius-release.noarch 0:2-1.el7.ius will be installed

--> Finished Dependency Resolution

Dependencies Resolved

================================================================================

Package Arch Version Repository Size

================================================================================

Installing:

epel-release noarch 7-14 /epel-release-latest-7.noarch 25 k

ius-release noarch 2-1.el7.ius /ius-release-el7 4.5 k

Transaction Summary

================================================================================

Install 2 Packages

Total size: 30 k

Installed size: 30 k

Downloading packages:

Running transaction check

Running transaction test

Transaction test succeeded

Running transaction

Installing : epel-release-7-14.noarch 1/2

Installing : ius-release-2-1.el7.ius.noarch 2/2

Verifying : epel-release-7-14.noarch 1/2

Verifying : ius-release-2-1.el7.ius.noarch 2/2

Installed:

epel-release.noarch 0:7-14 ius-release.noarch 0:2-1.el7.ius

Complete!

labredis:

Loaded plugins: product-id, search-disabled-repos, subscription-manager

Examining /var/tmp/yum-root-QKzOF1/ius-release-el7.rpm: ius-release-2-1.el7.ius.noarch

Marking /var/tmp/yum-root-QKzOF1/ius-release-el7.rpm to be installed

Examining /var/tmp/yum-root-QKzOF1/epel-release-latest-7.noarch.rpm: epel-release-7-14.noarch

Marking /var/tmp/yum-root-QKzOF1/epel-release-latest-7.noarch.rpm to be installed

Resolving Dependencies

--> Running transaction check

---> Package epel-release.noarch 0:7-14 will be installed

---> Package ius-release.noarch 0:2-1.el7.ius will be installed

--> Finished Dependency Resolution

Dependencies Resolved

================================================================================

Package Arch Version Repository Size

================================================================================

Installing:

epel-release noarch 7-14 /epel-release-latest-7.noarch 25 k

ius-release noarch 2-1.el7.ius /ius-release-el7 4.5 k

Transaction Summary

================================================================================

Install 2 Packages

Total size: 30 k

Installed size: 30 k

Downloading packages:

Running transaction check

Running transaction test

Transaction test succeeded

Running transaction

Installing : epel-release-7-14.noarch 1/2

Installing : ius-release-2-1.el7.ius.noarch 2/2

Verifying : epel-release-7-14.noarch 1/2

Verifying : ius-release-2-1.el7.ius.noarch 2/2

Installed:

epel-release.noarch 0:7-14 ius-release.noarch 0:2-1.el7.ius

Complete!

labraas:

Loaded plugins: product-id, search-disabled-repos, subscription-manager

Examining /var/tmp/yum-root-F4FNTG/ius-release-el7.rpm: ius-release-2-1.el7.ius.noarch

Marking /var/tmp/yum-root-F4FNTG/ius-release-el7.rpm to be installed

Examining /var/tmp/yum-root-F4FNTG/epel-release-latest-7.noarch.rpm: epel-release-7-14.noarch

Marking /var/tmp/yum-root-F4FNTG/epel-release-latest-7.noarch.rpm to be installed

Resolving Dependencies

--> Running transaction check

---> Package epel-release.noarch 0:7-14 will be installed

---> Package ius-release.noarch 0:2-1.el7.ius will be installed

--> Finished Dependency Resolution

Dependencies Resolved

================================================================================

Package Arch Version Repository Size

================================================================================

Installing:

epel-release noarch 7-14 /epel-release-latest-7.noarch 25 k

ius-release noarch 2-1.el7.ius /ius-release-el7 4.5 k

Transaction Summary

================================================================================

Install 2 Packages

Total size: 30 k

Installed size: 30 k

Downloading packages:

Running transaction check

Running transaction test

Transaction test succeeded

Running transaction

Installing : epel-release-7-14.noarch 1/2

Installing : ius-release-2-1.el7.ius.noarch 2/2

Verifying : epel-release-7-14.noarch 1/2

Verifying : ius-release-2-1.el7.ius.noarch 2/2

Installed:

epel-release.noarch 0:7-14 ius-release.noarch 0:2-1.el7.ius

Complete!

[root@labmaster ~]#

Note: in the above, i am targeting ‘*’ which means all accepted minions will be targeted when executing the job. in my case, I just have the 4 minions.. you can replace the ‘*’ with minion names should you have other minions that are not going to be used as a part of the installation. eg:

salt 'labmaster' cmd.run "rpm -qa | grep epel-release"

salt 'labredis' cmd.run "rpm -qa | grep epel-release"

salt 'labpostgres' cmd.run "rpm -qa | grep epel-release"

salt 'labraas' cmd.run "rpm -qa | grep epel-release"salt '*' pkg.install python36-cryptographyOutput:

[root@labmaster ~]# salt '*' pkg.install python36-cryptography

labpostgres:

----------

gpg-pubkey.(none):

----------

new:

2fa658e0-45700c69,352c64e5-52ae6884,de57bfbe-53a9be98,fd431d51-4ae0493b

old:

2fa658e0-45700c69,de57bfbe-53a9be98,fd431d51-4ae0493b

python36-asn1crypto:

----------

new:

0.24.0-7.el7

old:

python36-cffi:

----------

new:

1.9.1-3.el7

old:

python36-cryptography:

----------

new:

2.3-2.el7

old:

python36-ply:

----------

new:

3.9-2.el7

old:

python36-pycparser:

----------

new:

2.14-2.el7

old:

labredis:

----------

gpg-pubkey.(none):

----------

new:

2fa658e0-45700c69,352c64e5-52ae6884,de57bfbe-53a9be98,fd431d51-4ae0493b

old:

2fa658e0-45700c69,de57bfbe-53a9be98,fd431d51-4ae0493b

python36-asn1crypto:

----------

new:

0.24.0-7.el7

old:

python36-cffi:

----------

new:

1.9.1-3.el7

old:

python36-cryptography:

----------

new:

2.3-2.el7

old:

python36-ply:

----------

new:

3.9-2.el7

old:

python36-pycparser:

----------

new:

2.14-2.el7

old:

labmaster:

----------

gpg-pubkey.(none):

----------

new:

2fa658e0-45700c69,352c64e5-52ae6884,de57bfbe-53a9be98,fd431d51-4ae0493b

old:

2fa658e0-45700c69,de57bfbe-53a9be98,fd431d51-4ae0493b

python36-asn1crypto:

----------

new:

0.24.0-7.el7

old:

python36-cffi:

----------

new:

1.9.1-3.el7

old:

python36-cryptography:

----------

new:

2.3-2.el7

old:

python36-ply:

----------

new:

3.9-2.el7

old:

python36-pycparser:

----------

new:

2.14-2.el7

old:

labraas:

----------

gpg-pubkey.(none):

----------

new:

2fa658e0-45700c69,352c64e5-52ae6884,de57bfbe-53a9be98,fd431d51-4ae0493b

old:

2fa658e0-45700c69,de57bfbe-53a9be98,fd431d51-4ae0493b

python36-asn1crypto:

----------

new:

0.24.0-7.el7

old:

python36-cffi:

----------

new:

1.9.1-3.el7

old:

python36-cryptography:

----------

new:

2.3-2.el7

old:

python36-ply:

----------

new:

3.9-2.el7

old:

python36-pycparser:

----------

new:

2.14-2.el7

old:

salt '*' pkg.install python36-pyOpenSSLsample output:

[root@labmaster ~]# salt '*' pkg.install python36-pyOpenSSL

labmaster:

----------

python36-pyOpenSSL:

----------

new:

17.3.0-2.el7

old:

labpostgres:

----------

python36-pyOpenSSL:

----------

new:

17.3.0-2.el7

old:

labraas:

----------

python36-pyOpenSSL:

----------

new:

17.3.0-2.el7

old:

labredis:

----------

python36-pyOpenSSL:

----------

new:

17.3.0-2.el7

old:

This is not a mandatory package, we will use this to copy files b/w the nodes, Specifically the keys.

salt '*' pkg.install rsyncsample output

[root@labmaster ~]# salt '*' pkg.install rsync

labmaster:

----------

rsync:

----------

new:

3.1.2-10.el7

old:

labpostgres:

----------

rsync:

----------

new:

3.1.2-10.el7

old:

labraas:

----------

rsync:

----------

new:

3.1.2-10.el7

old:

labredis:

----------

rsync:

----------

new:

3.1.2-10.el7

old:

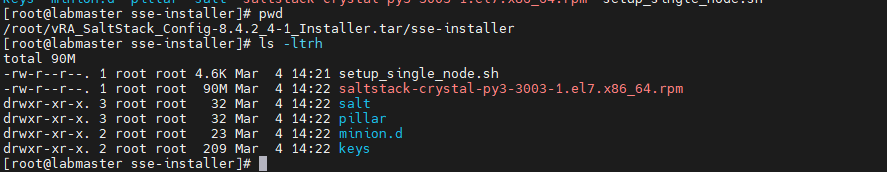

the automated/scripted installer was previously scp into the /root dir

navigate to the extracted tar, cd to the sse-install dir, it should look like the below:

copy the pillar, state files from the SSE installer directory into the default piller_roots directory and the default file root dir (these folders do not exist by default, so we crate them)

sudo mkdir /srv/salt

sudo cp -r salt/sse /srv/salt/

sudo mkdir /srv/pillar

sudo cp -r pillar/sse /srv/pillar/

sudo cp -r pillar/top.sls /srv/pillar/

sudo cp -r salt/top.sls /srv/salt/

we will use rsync to copy the keys from the SSE installer directory to all the machines:

rsync -avzh keys/ [email protected]:~/keys

rsync -avzh keys/ [email protected]:~/keys

rsync -avzh keys/ [email protected]:~/keys

rsync -avzh keys/ [email protected]:~/keysinstall keys:

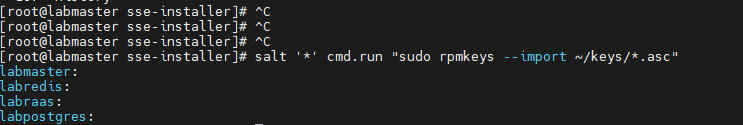

salt '*' cmd.run "sudo rpmkeys --import ~/keys/*.asc"output:

edit the pillar top.sls

vi /srv/pillar/top.slsreplace the list hilighted below with the minion names of all the instances that will be used for the SSE deployment.

Edited:

note: you can get the minion names using

salt-key -L

now, my updated top file looks like the below:

{# Pillar Top File #}

{# Define SSE Servers #}

{% load_yaml as sse_servers %}

- labmaster

- labpostgres

- labraas

- labredis

{% endload %}

base:

{# Assign Pillar Data to SSE Servers #}

{% for server in sse_servers %}

'{{ server }}':

- sse

{% endfor %}

now, edit the sse_settings.yaml

vi /srv/pillar/sse/sse_settings.yamlI have highlighted the important fields that must be updated on the config. the other fields are optional and can be changed as per your choice

this is how my updated sample config looks like:

# Section 1: Define servers in the SSE deployment by minion id

servers:

# PostgreSQL Server (Single value)

pg_server: labpostgres

# Redis Server (Single value)

redis_server: labredis

# SaltStack Enterprise Servers (List one or more)

eapi_servers:

- labraas

# Salt Masters (List one or more)

salt_masters:

- labmaster

# Section 2: Define PostgreSQL settings

pg:

# Set the PostgreSQL endpoint and port

# (defines how SaltStack Enterprise services will connect to PostgreSQL)

pg_endpoint: 172.16.120.111

pg_port: 5432

# Set the PostgreSQL Username and Password for SSE

pg_username: sseuser

pg_password: secure123

# Specify if PostgreSQL Host Based Authentication by IP and/or FQDN

# (allows SaltStack Enterprise services to connect to PostgreSQL)

pg_hba_by_ip: True

pg_hba_by_fqdn: False

pg_cert_cn: pgsql.lab.ntitta.in

pg_cert_name: pgsql.lab.ntitta.in

# Section 3: Define Redis settings

redis:

# Set the Redis endpoint and port

# (defines how SaltStack Enterprise services will connect to Redis)

redis_endpoint: 172.16.120.105

redis_port: 6379

# Set the Redis Username and Password for SSE

redis_username: sseredis

redis_password: secure1234

# Section 4: eAPI Server settings

eapi:

# Set the credentials for the SaltStack Enterprise service

# - The default for the username is "root"

# and the default for the password is "salt"

# - You will want to change this after a successful deployment

eapi_username: root

eapi_password: salt

# Set the endpoint for the SaltStack Enterprise service

eapi_endpoint: 172.16.120.115

# Set if SaltStack Enterprise will use SSL encrypted communicaiton (HTTPS)

eapi_ssl_enabled: True

# Set if SaltStack Enterprise will use SSL validation (verified certificate)

eapi_ssl_validation: False

# Set if SaltStack Enterprise (PostgreSQL, eAPI Servers, and Salt Masters)

# will all be deployed on a single "standalone" host

eapi_standalone: False

# Set if SaltStack Enterprise will regard multiple masters as "active" or "failover"

# - No impact to a single master configuration

# - "active" (set below as False) means that all minions connect to each master (recommended)

# - "failover" (set below as True) means that each minion connects to one master at a time

eapi_failover_master: False

# Set the encryption key for SaltStack Enterprise

# (this should be a unique value for each installation)

# To generate one, run: "openssl rand -hex 32"

#

# Note: Specify "auto" to have the installer generate a random key at installation time

# ("auto" is only suitable for installations with a single SaltStack Enterprise server)

eapi_key: auto

eapi_server_cert_cn: raas.lab.ntitta.in

eapi_server_cert_name: raas.lab.ntitta.in

# Section 5: Identifiers

ids:

# Appends a customer-specific UUID to the namespace of the raas database

# (this should be a unique value for each installation)

# To generate one, run: "cat /proc/sys/kernel/random/uuid"

customer_id: 43cab1f4-de60-4ab1-85b5-1d883c5c5d09

# Set the Cluster ID for the master (or set of masters) that will managed

# the SaltStack Enterprise infrastructure

# (additional sets of masters may be easily managed with a separate installer)

cluster_id: distributed_sandbox_env

refresh grains and piller data:

salt '*' saltutil.refresh_grains

salt '*' saltutil.refresh_pillar

Confirm if piller returns the items:

salt '*' pillar.items

sample output:

labraas:

----------

sse_cluster_id:

distributed_sandbox_env

sse_customer_id:

43cab1f4-de60-4ab1-85b5-1d883c5c5d09

sse_eapi_endpoint:

172.16.120.115

sse_eapi_failover_master:

False

sse_eapi_key:

auto

sse_eapi_num_processes:

12

sse_eapi_password:

salt

sse_eapi_server_cert_cn:

raas.lab.ntitta.in

sse_eapi_server_cert_name:

raas.lab.ntitta.in

sse_eapi_server_fqdn_list:

- labraas.ntitta.lab

sse_eapi_server_ipv4_list:

- 172.16.120.115

sse_eapi_servers:

- labraas

sse_eapi_ssl_enabled:

True

sse_eapi_ssl_validation:

False

sse_eapi_standalone:

False

sse_eapi_username:

root

sse_pg_cert_cn:

pgsql.lab.ntitta.in

sse_pg_cert_name:

pgsql.lab.ntitta.in

sse_pg_endpoint:

172.16.120.111

sse_pg_fqdn:

labpostgres.ntitta.lab

sse_pg_hba_by_fqdn:

False

sse_pg_hba_by_ip:

True

sse_pg_ip:

172.16.120.111

sse_pg_password:

secure123

sse_pg_port:

5432

sse_pg_server:

labpostgres

sse_pg_username:

sseuser

sse_redis_endpoint:

172.16.120.105

sse_redis_password:

secure1234

sse_redis_port:

6379

sse_redis_server:

labredis

sse_redis_username:

sseredis

sse_salt_master_fqdn_list:

- labmaster.ntitta.lab

sse_salt_master_ipv4_list:

- 172.16.120.113

sse_salt_masters:

- labmaster

labmaster:

----------

sse_cluster_id:

distributed_sandbox_env

sse_customer_id:

43cab1f4-de60-4ab1-85b5-1d883c5c5d09

sse_eapi_endpoint:

172.16.120.115

sse_eapi_failover_master:

False

sse_eapi_key:

auto

sse_eapi_num_processes:

12

sse_eapi_password:

salt

sse_eapi_server_cert_cn:

raas.lab.ntitta.in

sse_eapi_server_cert_name:

raas.lab.ntitta.in

sse_eapi_server_fqdn_list:

- labraas.ntitta.lab

sse_eapi_server_ipv4_list:

- 172.16.120.115

sse_eapi_servers:

- labraas

sse_eapi_ssl_enabled:

True

sse_eapi_ssl_validation:

False

sse_eapi_standalone:

False

sse_eapi_username:

root

sse_pg_cert_cn:

pgsql.lab.ntitta.in

sse_pg_cert_name:

pgsql.lab.ntitta.in

sse_pg_endpoint:

172.16.120.111

sse_pg_fqdn:

labpostgres.ntitta.lab

sse_pg_hba_by_fqdn:

False

sse_pg_hba_by_ip:

True

sse_pg_ip:

172.16.120.111

sse_pg_password:

secure123

sse_pg_port:

5432

sse_pg_server:

labpostgres

sse_pg_username:

sseuser

sse_redis_endpoint:

172.16.120.105

sse_redis_password:

secure1234

sse_redis_port:

6379

sse_redis_server:

labredis

sse_redis_username:

sseredis

sse_salt_master_fqdn_list:

- labmaster.ntitta.lab

sse_salt_master_ipv4_list:

- 172.16.120.113

sse_salt_masters:

- labmaster

labredis:

----------

sse_cluster_id:

distributed_sandbox_env

sse_customer_id:

43cab1f4-de60-4ab1-85b5-1d883c5c5d09

sse_eapi_endpoint:

172.16.120.115

sse_eapi_failover_master:

False

sse_eapi_key:

auto

sse_eapi_num_processes:

12

sse_eapi_password:

salt

sse_eapi_server_cert_cn:

raas.lab.ntitta.in

sse_eapi_server_cert_name:

raas.lab.ntitta.in

sse_eapi_server_fqdn_list:

- labraas.ntitta.lab

sse_eapi_server_ipv4_list:

- 172.16.120.115

sse_eapi_servers:

- labraas

sse_eapi_ssl_enabled:

True

sse_eapi_ssl_validation:

False

sse_eapi_standalone:

False

sse_eapi_username:

root

sse_pg_cert_cn:

pgsql.lab.ntitta.in

sse_pg_cert_name:

pgsql.lab.ntitta.in

sse_pg_endpoint:

172.16.120.111

sse_pg_fqdn:

labpostgres.ntitta.lab

sse_pg_hba_by_fqdn:

False

sse_pg_hba_by_ip:

True

sse_pg_ip:

172.16.120.111

sse_pg_password:

secure123

sse_pg_port:

5432

sse_pg_server:

labpostgres

sse_pg_username:

sseuser

sse_redis_endpoint:

172.16.120.105

sse_redis_password:

secure1234

sse_redis_port:

6379

sse_redis_server:

labredis

sse_redis_username:

sseredis

sse_salt_master_fqdn_list:

- labmaster.ntitta.lab

sse_salt_master_ipv4_list:

- 172.16.120.113

sse_salt_masters:

- labmaster

labpostgres:

----------

sse_cluster_id:

distributed_sandbox_env

sse_customer_id:

43cab1f4-de60-4ab1-85b5-1d883c5c5d09

sse_eapi_endpoint:

172.16.120.115

sse_eapi_failover_master:

False

sse_eapi_key:

auto

sse_eapi_num_processes:

12

sse_eapi_password:

salt

sse_eapi_server_cert_cn:

raas.lab.ntitta.in

sse_eapi_server_cert_name:

raas.lab.ntitta.in

sse_eapi_server_fqdn_list:

- labraas.ntitta.lab

sse_eapi_server_ipv4_list:

- 172.16.120.115

sse_eapi_servers:

- labraas

sse_eapi_ssl_enabled:

True

sse_eapi_ssl_validation:

False

sse_eapi_standalone:

False

sse_eapi_username:

root

sse_pg_cert_cn:

pgsql.lab.ntitta.in

sse_pg_cert_name:

pgsql.lab.ntitta.in

sse_pg_endpoint:

172.16.120.111

sse_pg_fqdn:

labpostgres.ntitta.lab

sse_pg_hba_by_fqdn:

False

sse_pg_hba_by_ip:

True

sse_pg_ip:

172.16.120.111

sse_pg_password:

secure123

sse_pg_port:

5432

sse_pg_server:

labpostgres

sse_pg_username:

sseuser

sse_redis_endpoint:

172.16.120.105

sse_redis_password:

secure1234

sse_redis_port:

6379

sse_redis_server:

labredis

sse_redis_username:

sseredis

sse_salt_master_fqdn_list:

- labmaster.ntitta.lab

sse_salt_master_ipv4_list:

- 172.16.120.113

sse_salt_masters:

- labmaster

salt labpostgres state.highstateoutput:

[root@labmaster sse]# sudo salt labpostgres state.highstate

labpostgres:

----------

ID: install_postgresql-server

Function: pkg.installed

Result: True

Comment: 4 targeted packages were installed/updated.

Started: 19:57:29.956557

Duration: 27769.35 ms

Changes:

----------

postgresql12:

----------

new:

12.7-1PGDG.rhel7

old:

postgresql12-contrib:

----------

new:

12.7-1PGDG.rhel7

old:

postgresql12-libs:

----------

new:

12.7-1PGDG.rhel7

old:

postgresql12-server:

----------

new:

12.7-1PGDG.rhel7

old:

----------

ID: initialize_postgres-database

Function: cmd.run

Name: /usr/pgsql-12/bin/postgresql-12-setup initdb

Result: True

Comment: Command "/usr/pgsql-12/bin/postgresql-12-setup initdb" run

Started: 19:57:57.729506

Duration: 2057.166 ms

Changes:

----------

pid:

33869

retcode:

0

stderr:

stdout:

Initializing database ... OK

----------

ID: create_pki_postgres_path

Function: file.directory

Name: /etc/pki/postgres/certs

Result: True

Comment:

Started: 19:57:59.792636

Duration: 7.834 ms

Changes:

----------

/etc/pki/postgres/certs:

----------

directory:

new

----------

ID: create_ssl_certificate

Function: module.run

Name: tls.create_self_signed_cert

Result: True

Comment: Module function tls.create_self_signed_cert executed

Started: 19:57:59.802082

Duration: 163.484 ms

Changes:

----------

ret:

Created Private Key: "/etc/pki/postgres/certs/pgsq.key." Created Certificate: "/etc/pki/postgres/certs/pgsq.crt."

----------

ID: set_certificate_permissions

Function: file.managed

Name: /etc/pki/postgres/certs/pgsq.crt

Result: True

Comment:

Started: 19:57:59.965923

Duration: 4.142 ms

Changes:

----------

group:

postgres

mode:

0400

user:

postgres

----------

ID: set_key_permissions

Function: file.managed

Name: /etc/pki/postgres/certs/pgsq.key

Result: True

Comment:

Started: 19:57:59.970470

Duration: 3.563 ms

Changes:

----------

group:

postgres

mode:

0400

user:

postgres

----------

ID: configure_postgres

Function: file.managed

Name: /var/lib/pgsql/12/data/postgresql.conf

Result: True

Comment: File /var/lib/pgsql/12/data/postgresql.conf updated

Started: 19:57:59.974388

Duration: 142.264 ms

Changes:

----------

diff:

---

+++

@@ -16,9 +16,9 @@

#

....

....

...

#------------------------------------------------------------------------------

----------

ID: configure_pg_hba

Function: file.managed

Name: /var/lib/pgsql/12/data/pg_hba.conf

Result: True

Comment: File /var/lib/pgsql/12/data/pg_hba.conf updated

...

...

...

+

----------

ID: start_postgres

Function: service.running

Name: postgresql-12

Result: True

Comment: Service postgresql-12 has been enabled, and is running

Started: 19:58:00.225639

Duration: 380.763 ms

Changes:

----------

postgresql-12:

True

----------

ID: create_db_user

Function: postgres_user.present

Name: sseuser

Result: True

Comment: The user sseuser has been created

Started: 19:58:00.620381

Duration: 746.545 ms

Changes:

----------

sseuser:

Present

Summary for labpostgres

-------------

Succeeded: 10 (changed=10)

Failed: 0

-------------

Total states run: 10

Total run time: 31.360 s

If this fails for some reason, you can revert/remove postgres by using below and fix the underlying errors before re-trying

salt labpostgres state.apply sse.eapi_database.revertexample:

[root@labmaster sse]# salt labpostgres state.apply sse.eapi_database.revert

labpostgres:

----------

ID: revert_all

Function: pkg.removed

Result: True

Comment: All targeted packages were removed.

Started: 16:30:26.736578

Duration: 10127.277 ms

Changes:

----------

postgresql12:

----------

new:

old:

12.7-1PGDG.rhel7

postgresql12-contrib:

----------

new:

old:

12.7-1PGDG.rhel7

postgresql12-libs:

----------

new:

old:

12.7-1PGDG.rhel7

postgresql12-server:

----------

new:

old:

12.7-1PGDG.rhel7

----------

ID: revert_all

Function: file.absent

Name: /var/lib/pgsql/

Result: True

Comment: Removed directory /var/lib/pgsql/

Started: 16:30:36.870967

Duration: 79.941 ms

Changes:

----------

removed:

/var/lib/pgsql/

----------

ID: revert_all

Function: file.absent

Name: /etc/pki/postgres/

Result: True

Comment: Removed directory /etc/pki/postgres/

Started: 16:30:36.951337

Duration: 3.34 ms

Changes:

----------

removed:

/etc/pki/postgres/

----------

ID: revert_all

Function: user.absent

Name: postgres

Result: True

Comment: Removed user postgres

Started: 16:30:36.956696

Duration: 172.372 ms

Changes:

----------

postgres:

removed

postgres group:

removed

Summary for labpostgres

------------

Succeeded: 4 (changed=4)

Failed: 0

------------

Total states run: 4

Total run time: 10.383 s

salt labredis state.highstatesample output:

[root@labmaster sse]# salt labredis state.highstate

labredis:

----------

ID: install_redis

Function: pkg.installed

Result: True

Comment: The following packages were installed/updated: jemalloc, redis5

Started: 20:07:12.059084

Duration: 25450.196 ms

Changes:

----------

jemalloc:

----------

new:

3.6.0-1.el7

old:

redis5:

----------

new:

5.0.9-1.el7.ius

old:

----------

ID: configure_redis

Function: file.managed

Name: /etc/redis.conf

Result: True

Comment: File /etc/redis.conf updated

Started: 20:07:37.516851

Duration: 164.011 ms

Changes:

----------

diff:

---

+++

@@ -1,5 +1,5 @@

...

...

-bind 127.0.0.1

+bind 0.0.0.0

.....

.....

@@ -1361,12 +1311,8 @@

# active-defrag-threshold-upper 100

# Minimal effort for defrag in CPU percentage

-# active-defrag-cycle-min 5

+# active-defrag-cycle-min 25

# Maximal effort for defrag in CPU percentage

# active-defrag-cycle-max 75

-# Maximum number of set/hash/zset/list fields that will be processed from

-# the main dictionary scan

-# active-defrag-max-scan-fields 1000

-

mode:

0664

user:

root

----------

ID: start_redis

Function: service.running

Name: redis

Result: True

Comment: Service redis has been enabled, and is running

Started: 20:07:37.703605

Duration: 251.205 ms

Changes:

----------

redis:

True

Summary for labredis

------------

Succeeded: 3 (changed=3)

Failed: 0

------------

Total states run: 3

Total run time: 25.865 s

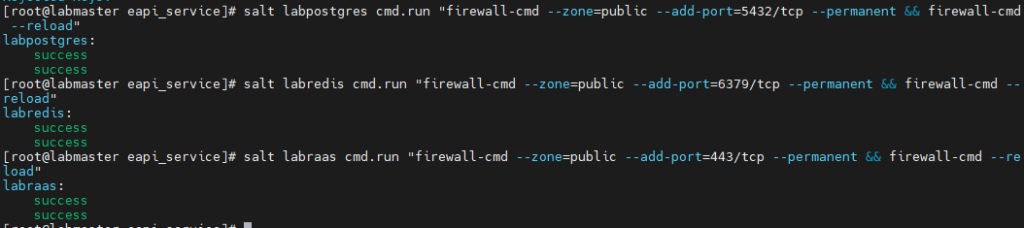

Before proceeding with RAAS setup, ensure Postgres and Redis is accessible: In my case, I still have linux firewall on the two machines, use the below command to add firewall rule exceptions for the respective node. again, I am leveraging salt to run the commands on the remote node

salt labpostgres cmd.run "firewall-cmd --zone=public --add-port=5432/tcp --permanent && firewall-cmd --reload"

salt labredis cmd.run "firewall-cmd --zone=public --add-port=6379/tcp --permanent && firewall-cmd --reload"

salt labraas cmd.run "firewall-cmd --zone=public --add-port=443/tcp --permanent && firewall-cmd --reload"

now, proceed with raas install

salt labraas state.highstatesample output:

[root@labmaster sse]# salt labraas state.highstate

labraas:

----------

ID: install_xmlsec

Function: pkg.installed

Result: True

Comment: 2 targeted packages were installed/updated.

The following packages were already installed: openssl, openssl-libs, xmlsec1, xmlsec1-openssl, libxslt, libtool-ltdl

Started: 20:36:16.715011

Duration: 39176.806 ms

Changes:

----------

singleton-manager-i18n:

----------

new:

0.6.0-5.el7.x86_64_1

old:

ssc-translation-bundle:

----------

new:

8.6.2-2.ph3.noarch_1

old:

----------

ID: install_raas

Function: pkg.installed

Result: True

Comment: The following packages were installed/updated: raas

Started: 20:36:55.942737

Duration: 35689.868 ms

Changes:

----------

raas:

----------

new:

8.6.2.11-1.el7

old:

----------

ID: install_raas

Function: cmd.run

Name: systemctl daemon-reload

Result: True

Comment: Command "systemctl daemon-reload" run

Started: 20:37:31.638377

Duration: 138.354 ms

Changes:

----------

pid:

31230

retcode:

0

stderr:

stdout:

----------

ID: create_pki_raas_path_eapi

Function: file.directory

Name: /etc/pki/raas/certs

Result: True

Comment: The directory /etc/pki/raas/certs is in the correct state

Started: 20:37:31.785757

Duration: 11.788 ms

Changes:

----------

ID: create_ssl_certificate_eapi

Function: module.run

Name: tls.create_self_signed_cert

Result: True

Comment: Module function tls.create_self_signed_cert executed

Started: 20:37:31.800719

Duration: 208.431 ms

Changes:

----------

ret:

Created Private Key: "/etc/pki/raas/certs/raas.lab.ntitta.in.key." Created Certificate: "/etc/pki/raas/certs/raas.lab.ntitta.in.crt."

----------

ID: set_certificate_permissions_eapi

Function: file.managed

Name: /etc/pki/raas/certs/raas.lab.ntitta.in.crt

Result: True

Comment:

Started: 20:37:32.009536

Duration: 5.967 ms

Changes:

----------

group:

raas

mode:

0400

user:

raas

----------

ID: set_key_permissions_eapi

Function: file.managed

Name: /etc/pki/raas/certs/raas.lab.ntitta.in.key

Result: True

Comment:

Started: 20:37:32.015921

Duration: 6.888 ms

Changes:

----------

group:

raas

mode:

0400

user:

raas

----------

ID: raas_owns_raas

Function: file.directory

Name: /etc/raas/

Result: True

Comment: The directory /etc/raas is in the correct state

Started: 20:37:32.023200

Duration: 4.485 ms

Changes:

----------

ID: configure_raas

Function: file.managed

Name: /etc/raas/raas

Result: True

Comment: File /etc/raas/raas updated

Started: 20:37:32.028374

Duration: 132.226 ms

Changes:

----------

diff:

---

+++

@@ -1,49 +1,47 @@

...

...

+

----------

ID: save_credentials

Function: cmd.run

Name: /usr/bin/raas save_creds 'postgres={"username":"sseuser","password":"secure123"}' 'redis={"password":"secure1234"}'

Result: True

Comment: All files in creates exist

Started: 20:37:32.163432

Duration: 2737.346 ms

Changes:

----------

ID: set_secconf_permissions

Function: file.managed

Name: /etc/raas/raas.secconf

Result: True

Comment: File /etc/raas/raas.secconf exists with proper permissions. No changes made.

Started: 20:37:34.902143

Duration: 5.949 ms

Changes:

----------

ID: ensure_raas_pki_directory

Function: file.directory

Name: /etc/raas/pki

Result: True

Comment: The directory /etc/raas/pki is in the correct state

Started: 20:37:34.908558

Duration: 4.571 ms

Changes:

----------

ID: change_owner_to_raas

Function: file.directory

Name: /etc/raas/pki

Result: True

Comment: The directory /etc/raas/pki is in the correct state

Started: 20:37:34.913566

Duration: 5.179 ms

Changes:

----------

ID: /usr/sbin/ldconfig

Function: cmd.run

Result: True

Comment: Command "/usr/sbin/ldconfig" run

Started: 20:37:34.919069

Duration: 32.018 ms

Changes:

----------

pid:

31331

retcode:

0

stderr:

stdout:

----------

ID: start_raas

Function: service.running

Name: raas

Result: True

Comment: check_cmd determined the state succeeded

Started: 20:37:34.952926

Duration: 16712.726 ms

Changes:

----------

raas:

True

----------

ID: restart_raas_and_confirm_connectivity

Function: cmd.run

Name: salt-call service.restart raas

Result: True

Comment: check_cmd determined the state succeeded

Started: 20:37:51.666446

Duration: 472.205 ms

Changes:

----------

ID: get_initial_objects_file

Function: file.managed

Name: /tmp/sample-resource-types.raas

Result: True

Comment: File /tmp/sample-resource-types.raas updated

Started: 20:37:52.139370

Duration: 180.432 ms

Changes:

----------

group:

raas

mode:

0640

user:

raas

----------

ID: import_initial_objects

Function: cmd.run

Name: /usr/bin/raas dump --insecure --server https://localhost --auth root:salt --mode import < /tmp/sample-resource-types.raas

Result: True

Comment: Command "/usr/bin/raas dump --insecure --server https://localhost --auth root:salt --mode import < /tmp/sample-resource-types.raas" run

Started: 20:37:52.320146

Duration: 24566.332 ms

Changes:

----------

pid:

31465

retcode:

0

stderr:

stdout:

----------

ID: raas_service_restart

Function: cmd.run

Name: systemctl restart raas

Result: True

Comment: Command "systemctl restart raas" run

Started: 20:38:16.887666

Duration: 2257.183 ms

Changes:

----------

pid:

31514

retcode:

0

stderr:

stdout:

Summary for labraas

-------------

Succeeded: 19 (changed=12)

Failed: 0

-------------

Total states run: 19

Total run time: 122.349 s

salt labmaster state.highstateoutput:

[root@labmaster sse]# salt labmaster state.highstate

Authentication error occurred.

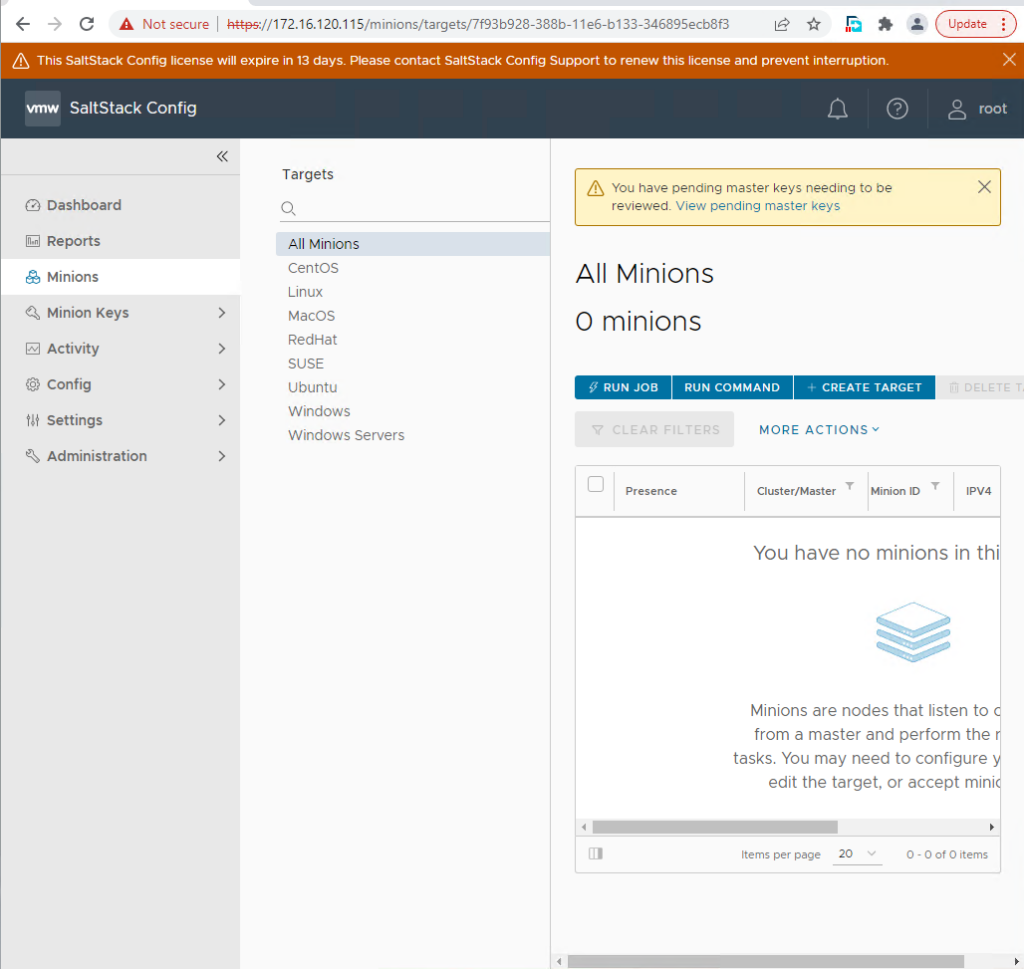

the Authentication error above is expected. now, we log in to the RAAS via webbrowser:

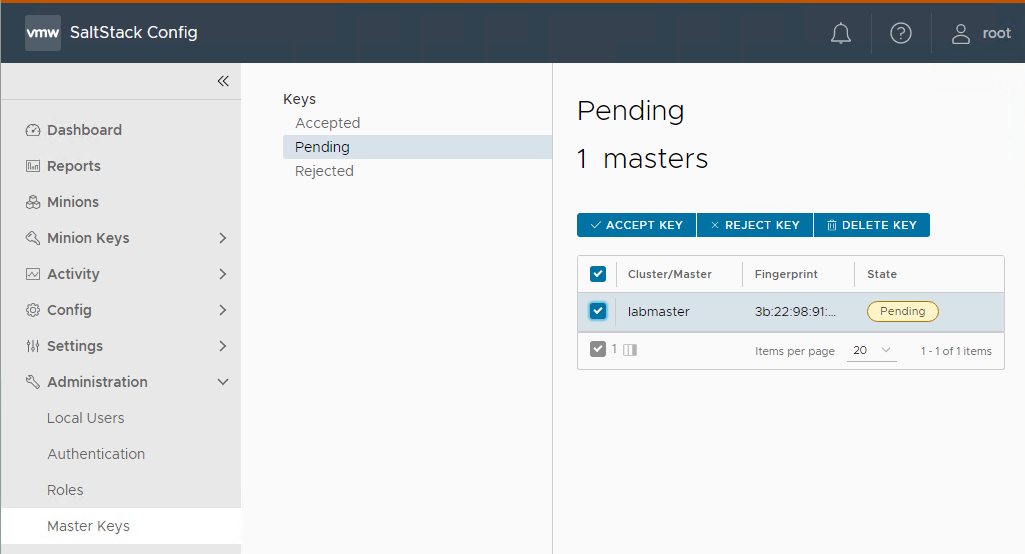

Accept the minion master keys and now we see all minion:

you now have salt-config /salt enterprise installed successfully.

If the Postgres or RAAS high state fils with the bellow then download the newer version of salt-config tar files from VMware. (there are issues with the init.sls state files with 8.5 or older versions.

----------

ID: create_ssl_certificate

Function: module.run

Name: tls.create_self_signed_cert

Result: False

Comment: Module function tls.create_self_signed_cert threw an exception. Exception: [Errno 2] No such file or directory: '/etc/pki/postgres/certs/sdb://osenv/PG_CERT_CN.key'

Started: 17:11:56.347565

Duration: 297.925 ms

Changes:

----------

ID: create_ssl_certificate_eapi

Function: module.run

Name: tls.create_self_signed_cert

Result: False

Comment: Module function tls.create_self_signed_cert threw an exception. Exception: [Errno 2] No such file or directory: '/etc/pki/raas/certs/sdb://osenv/SSE_CERT_CN.key'

Started: 20:26:32.061862

Duration: 42.028 ms

Changes:

----------

you can work around the issue by hardcoding the full paths for pg cert and raas cert in the init.sls files.

ID: create_ssl_certificate

Function: module.run

Name: tls.create_self_signed_cert

Result: False

Comment: Module function tls.create_self_signed_cert is not available

Started: 16:11:55.436579

Duration: 932.506 ms

Changes:Cause: prerequisits are not installed. python36-pyOpenSSL and python36-cryptography must be installed on all nodes where tls.create_self_signed_cert is targeted against.

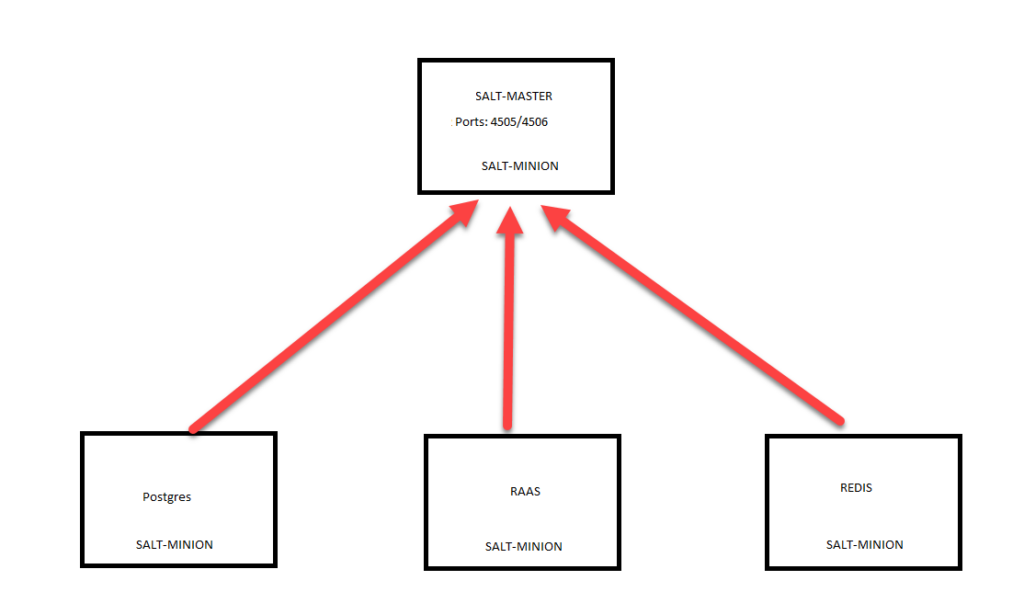

Topology: (master-minion communication)

OS: RHEL7.9/Centos7

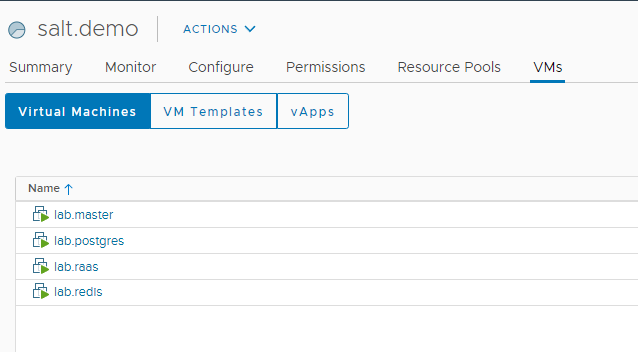

For the above topology, you will need 4 machines, we will be using the scripted installer to install RAAS, REDIS and postgres for us.

the VM’s I am using:

note: My RHEL machine are already registered with RHEL subscription manager.

we start by updating the machine on all machine’s

yum update -y

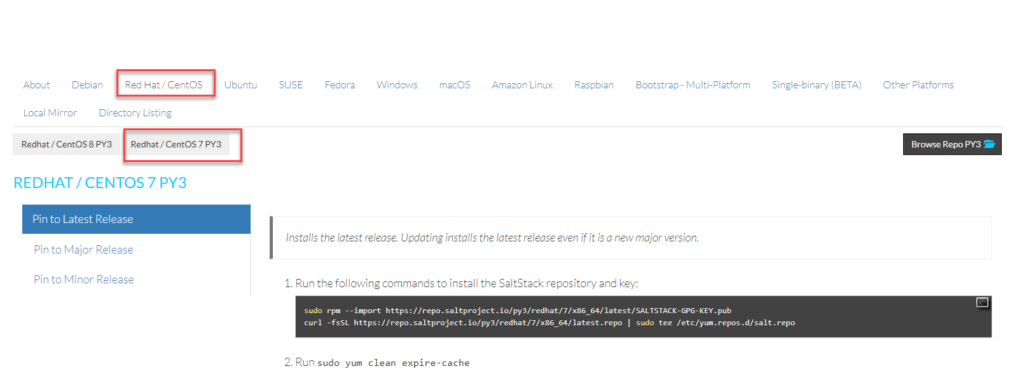

URL: https://repo.saltproject.io/

Navigate to the above URL and select the correct repository for your OS:

install the repository on all four machines.

sudo rpm --import https://repo.saltproject.io/py3/redhat/7/x86_64/latest/SALTSTACK-GPG-KEY.pub

curl -fsSL https://repo.saltproject.io/py3/redhat/7/x86_64/latest.repo | sudo tee /etc/yum.repos.d/salt.repoEg output:

Clear expired cache (run on all 4 machine)

sudo yum clean expire-cache

we now install salt-master on the master VM:

sudo yum install salt-master

press y to continue

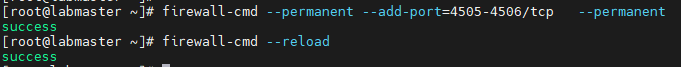

Salt master uses Port: 4505-4506, we add a firewall rule to allow traffic (run the below only on the master)

firewall-cmd --permanent --add-port=4505-4506/tcp --permanent

firewall-cmd --reload

Enable and start services:

sudo systemctl enable salt-master && sudo systemctl start salt-masteron all 4 machines, we install salt-minion.

yum install salt-minion -y

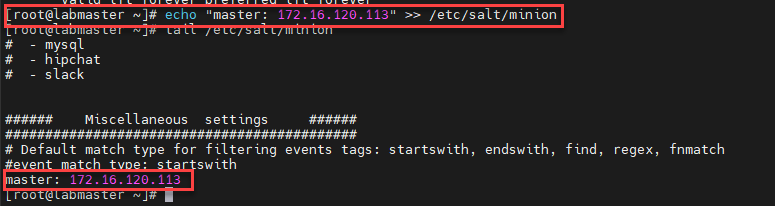

we will now need to edit the minion configuration file and point it to the salt-master IP. (this needs to be done on all nodes)

I use the below command to add the master IP the config file:

echo "master: 172.16.120.113" >> /etc/salt/minionEG output:

Enable and start the minion: (run on all nodes)

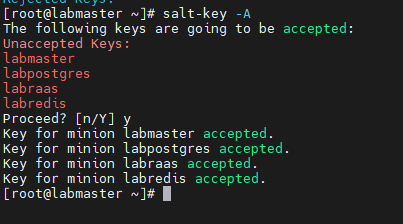

sudo systemctl enable salt-minion && sudo systemctl start salt-minionOn a successful connection, when you run salt-key -L on the master, you should see all the minions listed:

salt-key -L

Accept minion keys:

salt-key -A

Test minions:

salt '*' test.ping

Master: /etc/salt/master

/etc/salt/master.d/*

minion: /etc/salt/minion

/etc/salt/minion.d/*

Master: /var/log/salt/master

Minion: /var/log/salt/minion

minion logs:

Mar 04 12:05:26 xyzzzzy salt-minion[16137]: [ERROR ] Error while bringing up minion for multi-master. Is master at 172.16.120.113 responding?

Cause: minion Is not able to communicate with master. Either the master ports are not open or there is no master service running on the IP or network is unreachable.

The Salt Master has cached the public key for this node, this salt minion will wait for 10 seconds before attempting to re-authenticateMinion keys are not accepted by the master

Greetings!!,

I am writing this based on a popular request by partners.

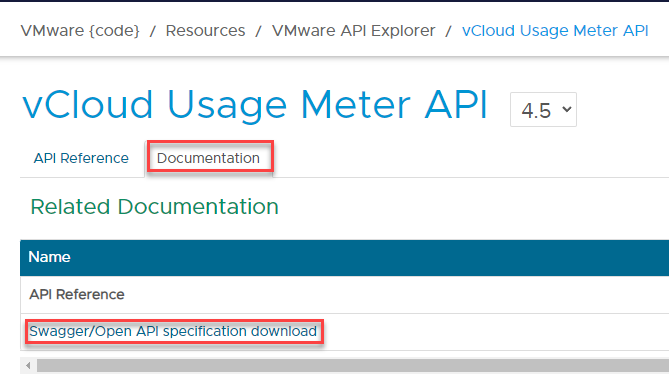

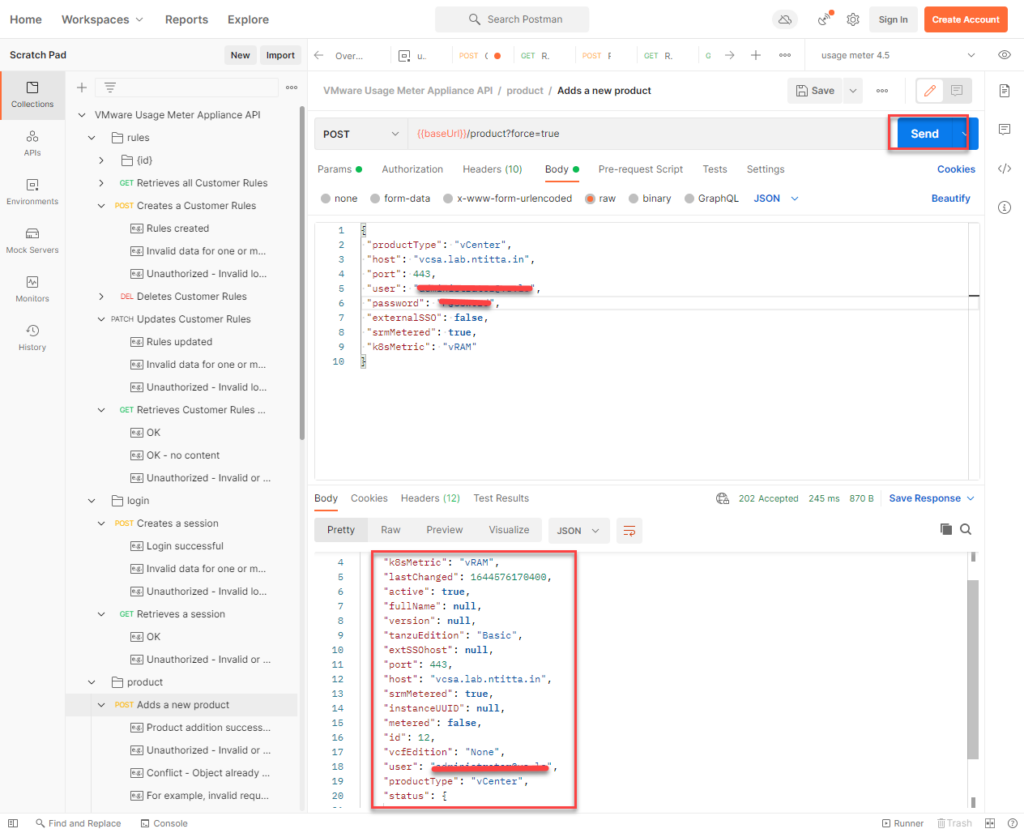

Usage meter API guide: https://developer.vmware.com/apis/1206/vcloud-usage-meter

usage meter OpenAPI specification: Click here to download JSON or use the below:

https://vdc-download.vmware.com/vmwb-repository/dcr-public/d61291b6-4397-44be-9b34-f046ada52f46/55e49229-0015-43d7-8655-b164861f7e0d/api_spec_4.5.jsonYou can find the openAPI specification on the API guide link > Documentation:

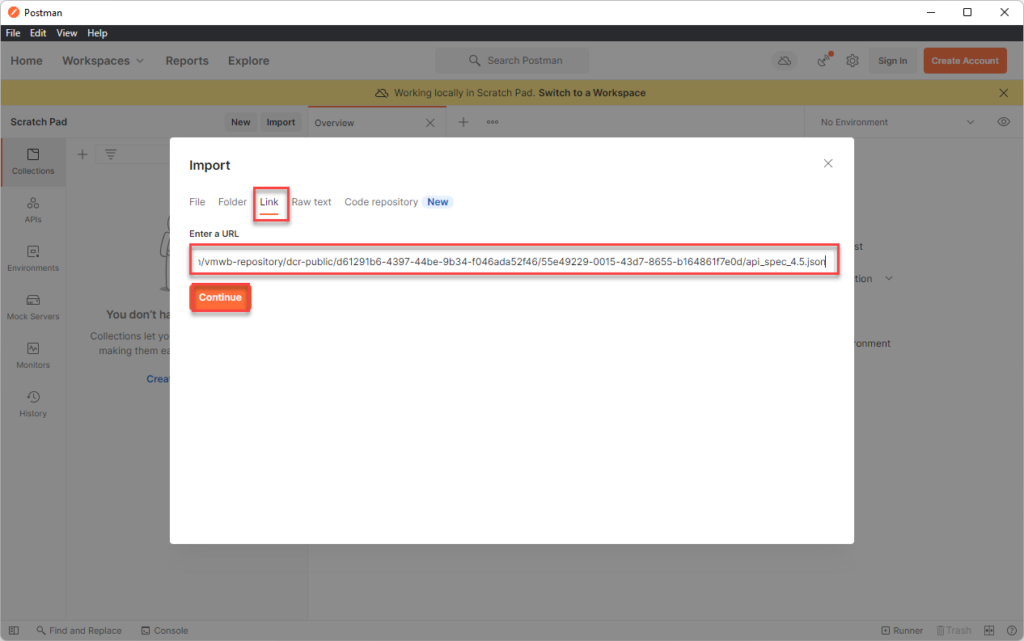

We will be using this JSON with postman.

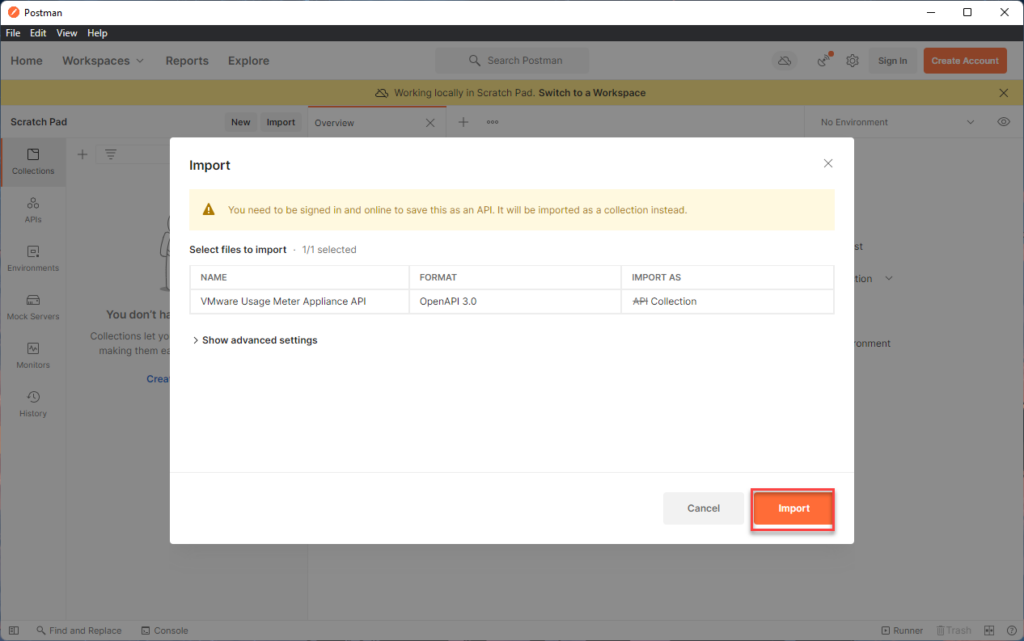

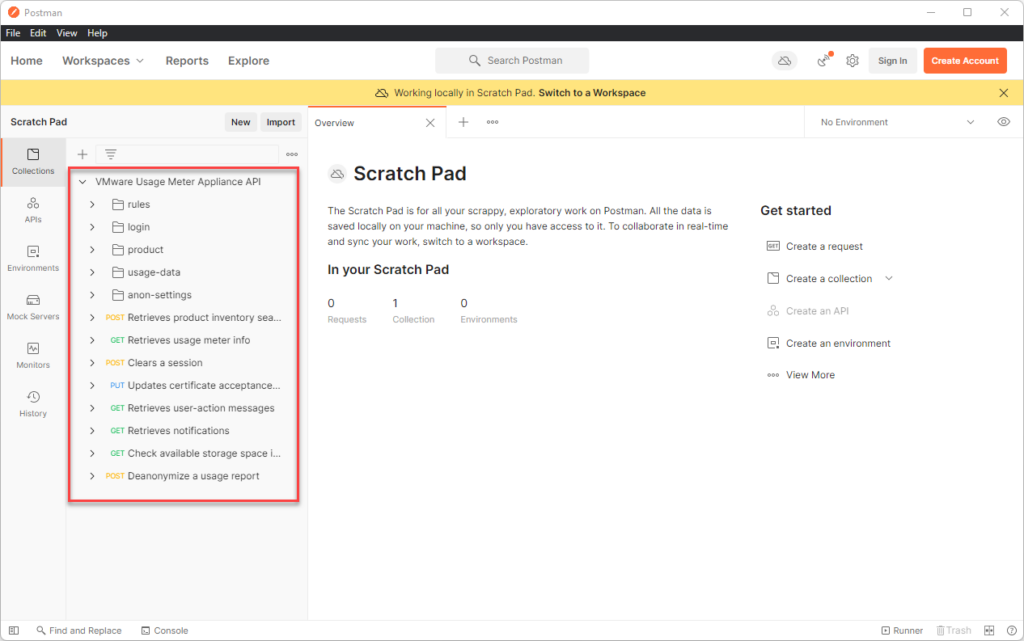

on postman, Navigate to File> import > (

either import the json file downloaded with previosu steps or use the URL. In my case I have used URL

and then click on import

you should now see the API collections listed like so:

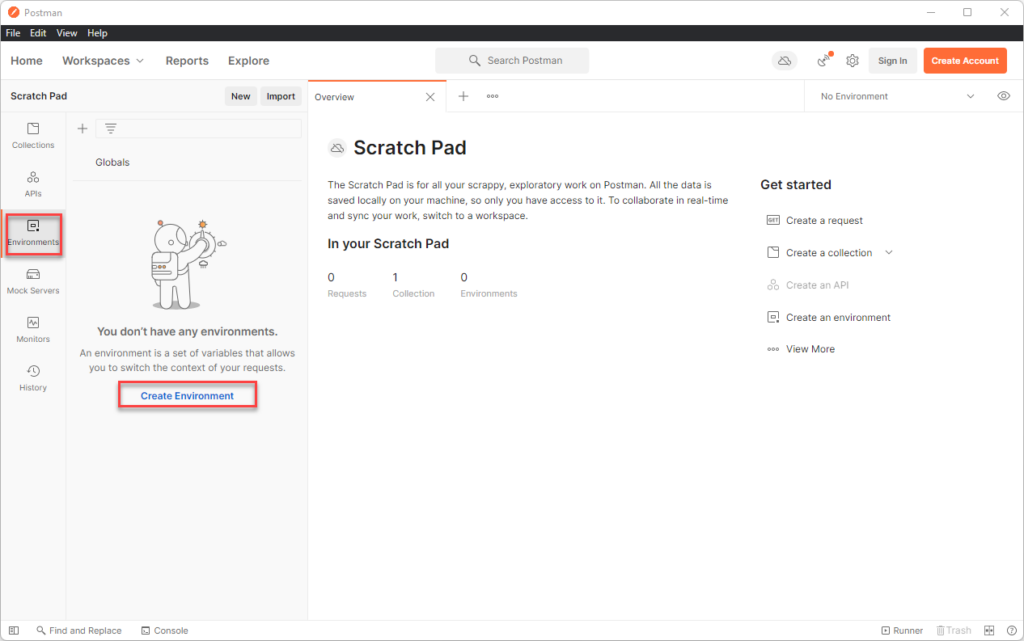

we will be using 2 variables

Navigate to Environment and create a new Env.

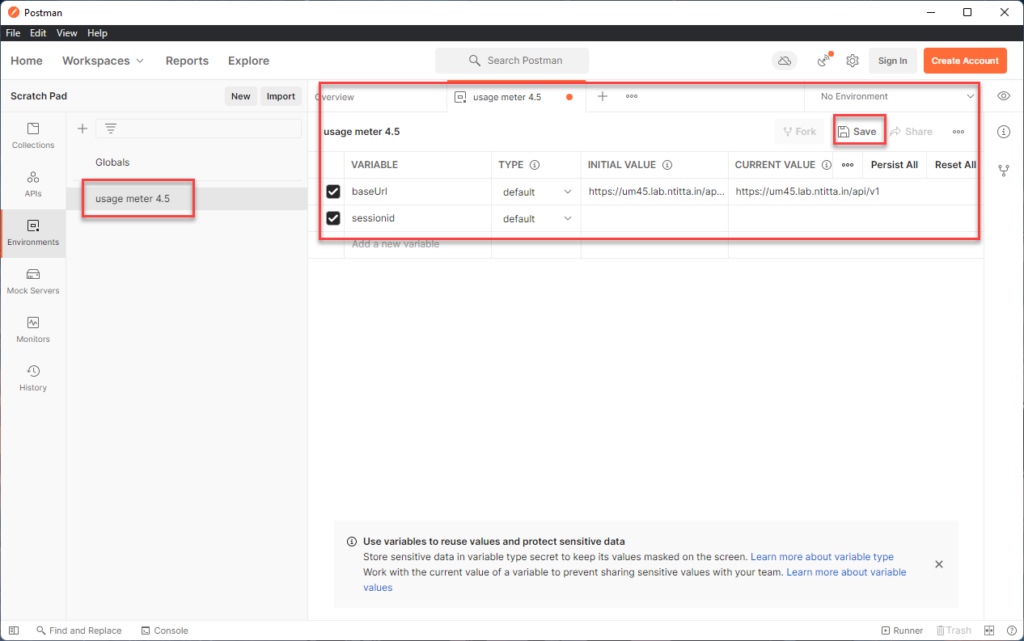

Enter a name of the enveronment and then in the variables, add baseUrl with value https://um_ip/api/v1

leave the sessionid blank for now. Ensure that you hit the save button here!!!

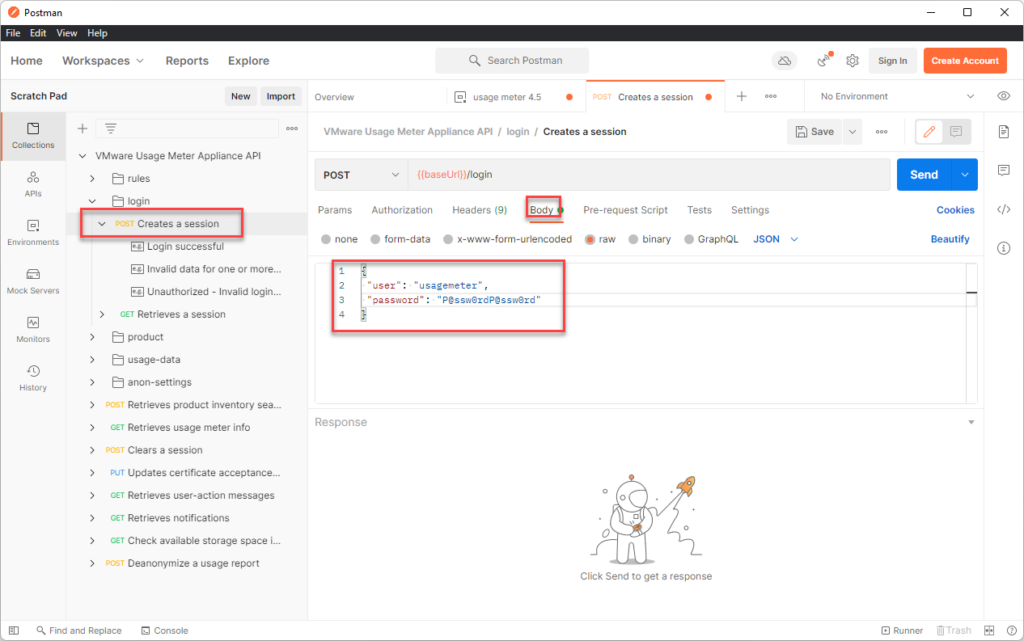

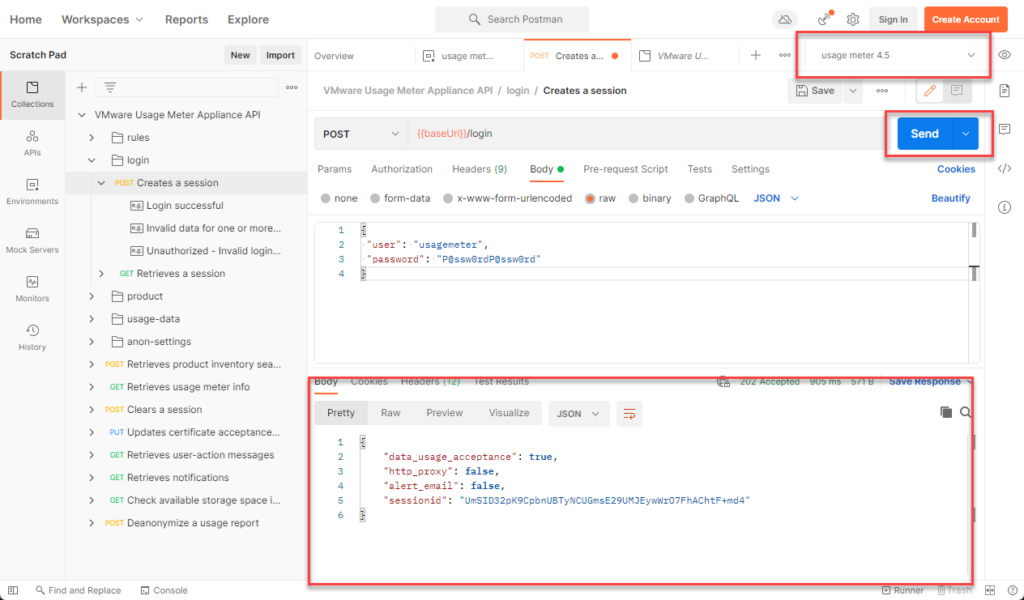

navigate back to collections > usage meter collection>login > create a session.

Click on body and enter the credentials

Switch the environment to usage meter 4.5 that we created in the previous step and hit send. we now have a sessionid

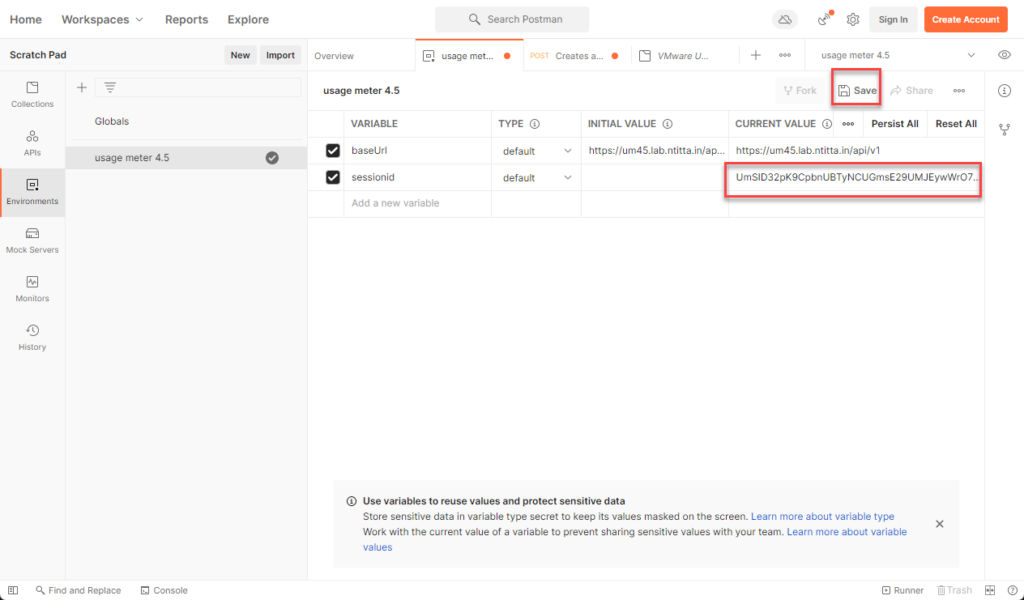

Go back in to environments > usage meter env > edit the sessionid field there and paste the sessionid you generated in the previous step: and ensure that you click on save!!

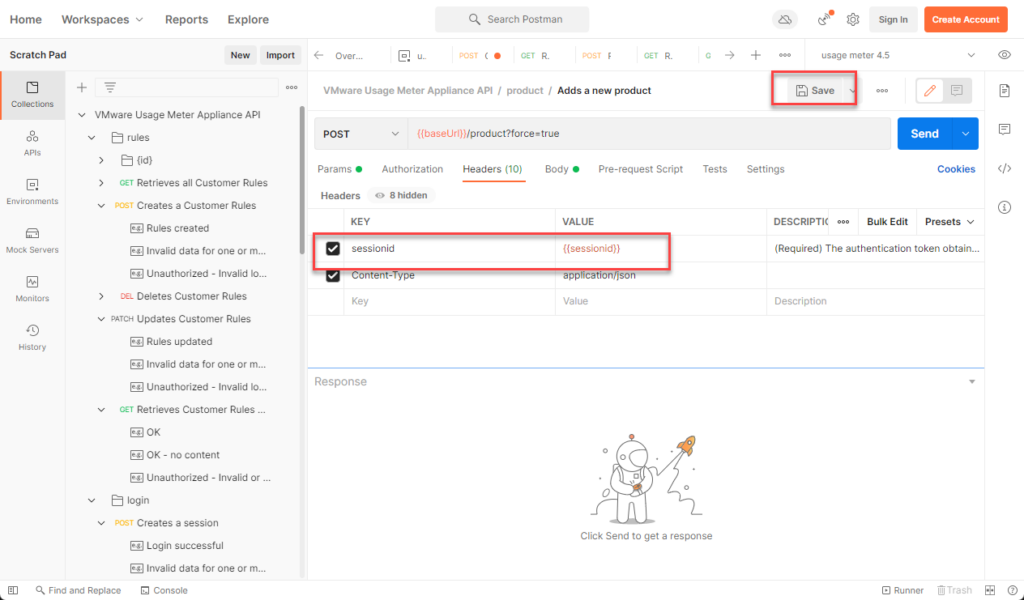

Navigate back to collection > usage meter collection > product> Adds a new product> header> repalce the value of sessionid with {{sessionid}} and hit save (you will need to add the sessionid variable for all api’s going forward with similar steps)

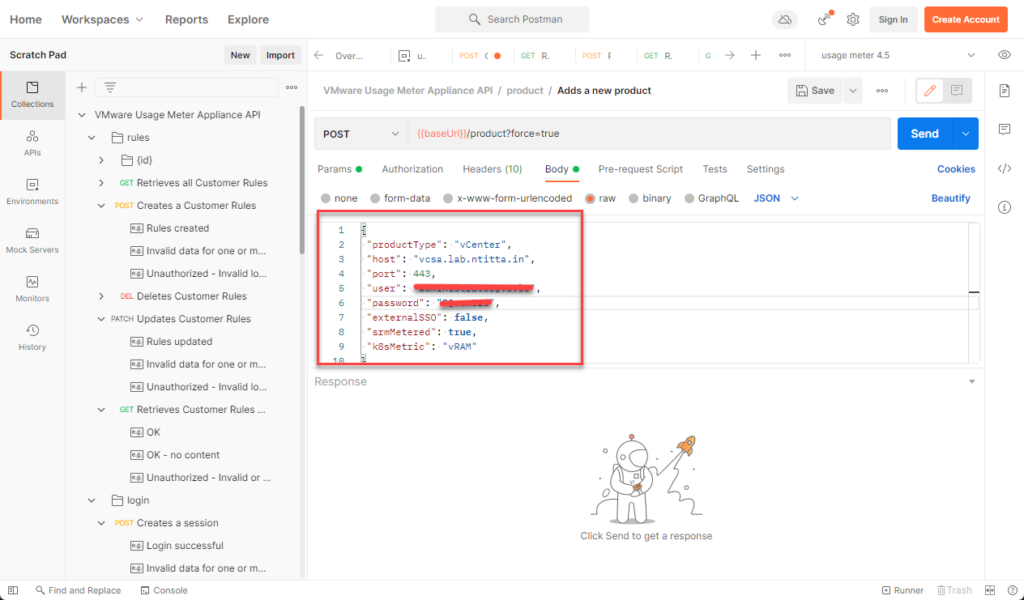

Switch to body and replace the raw data with the vCenter details:

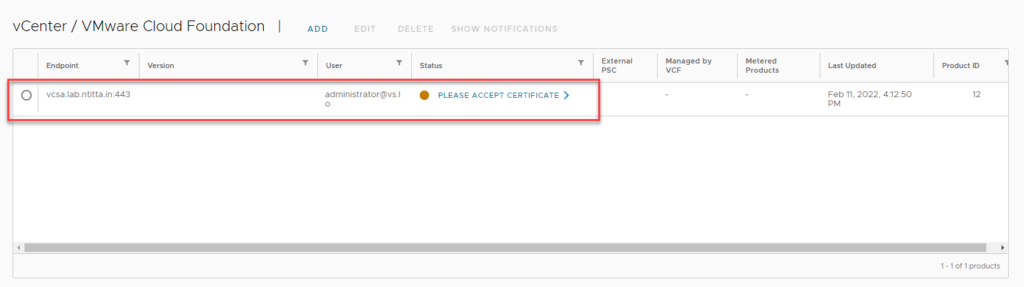

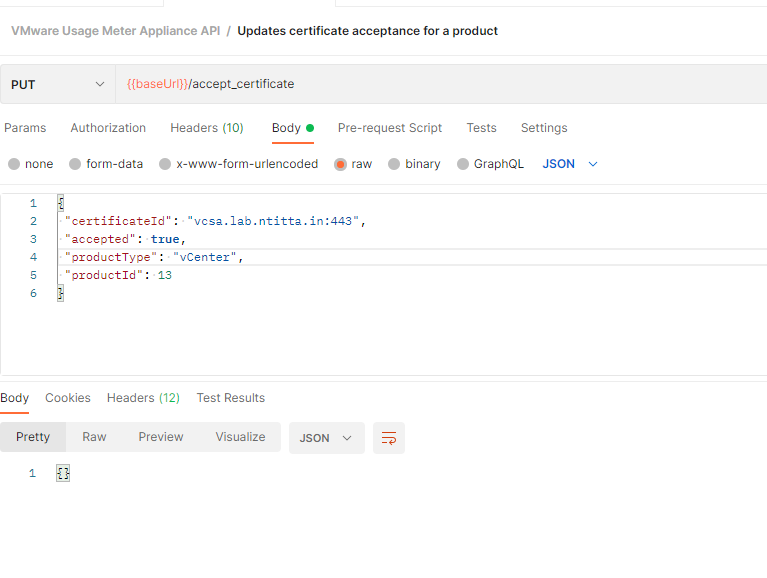

Hit send and the product should be added to usage meter:

Usage meter:

SRM collector logs are written in vccol_main.log

/opt/vmware/cloudusagemetering/var/logs/vccol_main.log

2021-12-22 17:30:24.159 ERROR --- [vCenter collector thread] c.v.um.vccollector.srm.SRMCollector : Unknown Failure HTTP transport error: org.bouncycastle.tls.TlsFatalAlert: certificate_unknown(46) :: https://srm02.ntitta.lab:443/drserver/vcdr/extapi/sdk

com.sun.xml.ws.client.ClientTransportException: HTTP transport error: org.bouncycastle.tls.TlsFatalAlert: certificate_unknown(46)

at com.sun.xml.ws.transport.http.client.HttpClientTransport.getOutput(HttpClientTransport.java:102)

at com.sun.xml.ws.transport.http.client.HttpTransportPipe.process(HttpTransportPipe.java:193)

at com.sun.xml.ws.transport.http.client.HttpTransportPipe.processRequest(HttpTransportPipe.java:115)

at com.sun.xml.ws.transport.DeferredTransportPipe.processRequest(DeferredTransportPipe.java:109)

at com.sun.xml.ws.api.pipe.Fiber.__doRun(Fiber.java:1106)

at com.sun.xml.ws.api.pipe.Fiber._doRun(Fiber.java:1020)

at com.sun.xml.ws.api.pipe.Fiber.doRun(Fiber.java:989)

at com.sun.xml.ws.api.pipe.Fiber.runSync(Fiber.java:847)

at com.sun.xml.ws.client.Stub.process(Stub.java:433)

at com.sun.xml.ws.client.sei.SEIStub.doProcess(SEIStub.java:161)

at com.sun.xml.ws.client.sei.SyncMethodHandler.invoke(SyncMethodHandler.java:78)

at com.sun.xml.ws.client.sei.SyncMethodHandler.invoke(SyncMethodHandler.java:62)

at com.sun.xml.ws.client.sei.SEIStub.invoke(SEIStub.java:131)

at com.sun.proxy.$Proxy41.srmLoginLocale(Unknown Source)

at com.vmware.um.vccollector.srm.api.SrmApiClient.openSrmPort(SrmApiClient.java:116)

at com.vmware.um.vccollector.srm.SRMCollector.getSrmLicenses(SRMCollector.java:312)

at com.vmware.um.vccollector.srm.SRMCollector.collect(SRMCollector.java:119)

at com.vmware.um.vccollector.srm.SRMCollectionStage.collectUsage(SRMCollectionStage.java:45)

at com.vmware.um.vccollector.VCCollector.collectStages(VCCollector.java:276)

at com.vmware.um.vccollector.VCCollector.collect(VCCollector.java:202)

at com.vmware.um.vccollector.VCCollector.collect(VCCollector.java:32)

at com.vmware.um.collector.CollectionHelper.collectFromServer(CollectionHelper.java:1014)

at com.vmware.um.collector.CollectionHelper.collectFromServersWithReporting(CollectionHelper.java:1170)

at com.vmware.um.collector.CollectionHelper.collectWithReporting(CollectionHelper.java:990)

at com.vmware.um.collector.CollectionHelper.lambda$start$9(CollectionHelper.java:1518)

at java.base/java.util.concurrent.Executors$RunnableAdapter.call(Unknown Source)

at java.base/java.util.concurrent.FutureTask.runAndReset(Unknown Source)

at java.base/java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(Unknown Source)

at java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(Unknown Source)

at java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(Unknown Source)

at java.base/java.lang.Thread.run(Unknown Source)

Caused by: org.bouncycastle.tls.TlsFatalAlert: certificate_unknown(46)

at org.bouncycastle.jsse.provider.ProvSSLSocketDirect.checkServerTrusted(ProvSSLSocketDirect.java:135)

at org.bouncycastle.jsse.provider.ProvTlsClient$1.notifyServerCertificate(ProvTlsClient.java:360)

at org.bouncycastle.tls.TlsUtils.processServerCertificate(TlsUtils.java:4813)

at org.bouncycastle.tls.TlsClientProtocol.handleServerCertificate(TlsClientProtocol.java:784)

at org.bouncycastle.tls.TlsClientProtocol.handleHandshakeMessage(TlsClientProtocol.java:670)

at org.bouncycastle.tls.TlsProtocol.processHandshakeQueue(TlsProtocol.java:695)

at org.bouncycastle.tls.TlsProtocol.processRecord(TlsProtocol.java:584)

at org.bouncycastle.tls.RecordStream.readRecord(RecordStream.java:245)

at org.bouncycastle.tls.TlsProtocol.safeReadRecord(TlsProtocol.java:843)

at org.bouncycastle.tls.TlsProtocol.blockForHandshake(TlsProtocol.java:417)

at org.bouncycastle.tls.TlsClientProtocol.connect(TlsClientProtocol.java:88)

at org.bouncycastle.jsse.provider.ProvSSLSocketDirect.startHandshake(ProvSSLSocketDirect.java:445)

at org.bouncycastle.jsse.provider.ProvSSLSocketDirect.startHandshake(ProvSSLSocketDirect.java:426)

at java.base/sun.net.www.protocol.https.HttpsClient.afterConnect(Unknown Source)

at java.base/sun.net.www.protocol.https.AbstractDelegateHttpsURLConnection.connect(Unknown Source)

at java.base/sun.net.www.protocol.http.HttpURLConnection.getOutputStream0(Unknown Source)

at java.base/sun.net.www.protocol.http.HttpURLConnection.getOutputStream(Unknown Source)

at java.base/sun.net.www.protocol.https.HttpsURLConnectionImpl.getOutputStream(Unknown Source)

at com.sun.xml.ws.transport.http.client.HttpClientTransport.getOutput(HttpClientTransport.java:89)

... 30 common frames omitted

Caused by: java.security.cert.CertificateException: There is no pinned certificate for this server and port

at com.vmware.um.common.certificates.PinnedCertificateTrustManager.checkServerTrusted(PinnedCertificateTrustManager.java:81)

at org.bouncycastle.jsse.provider.ImportX509TrustManager_7.checkServerTrusted(ImportX509TrustManager_7.java:56)

at org.bouncycastle.jsse.provider.ProvSSLSocketDirect.checkServerTrusted(ProvSSLSocketDirect.java:131)

... 48 common frames omittedWorkaround:

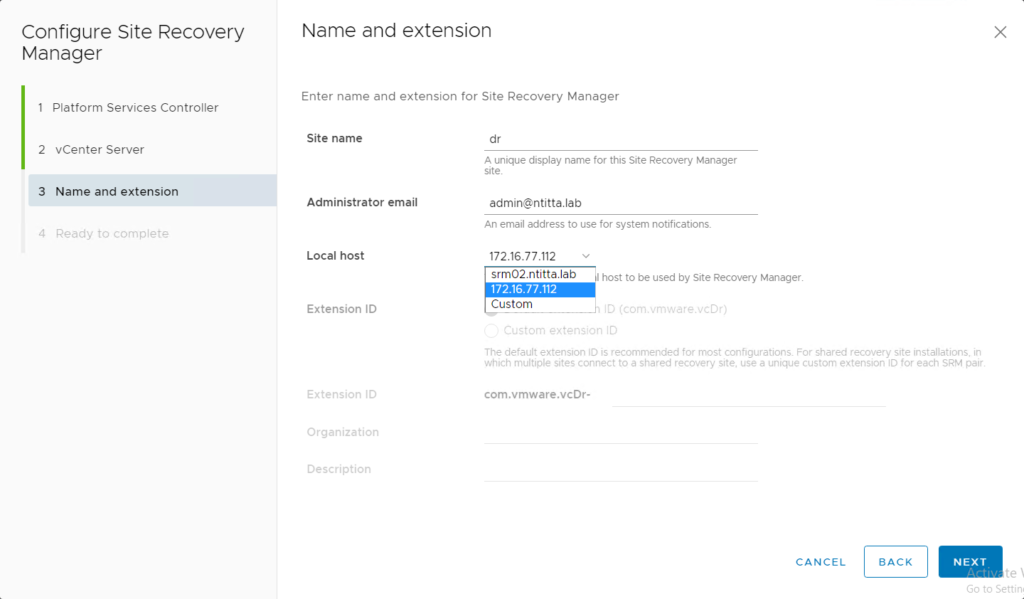

Re-register SRM to vCenter using an IP instead of the FQDN

Navigate to https://SRM:5480, log in as admin and re-register. Change the local host field to the IP address from the dropdown

error:

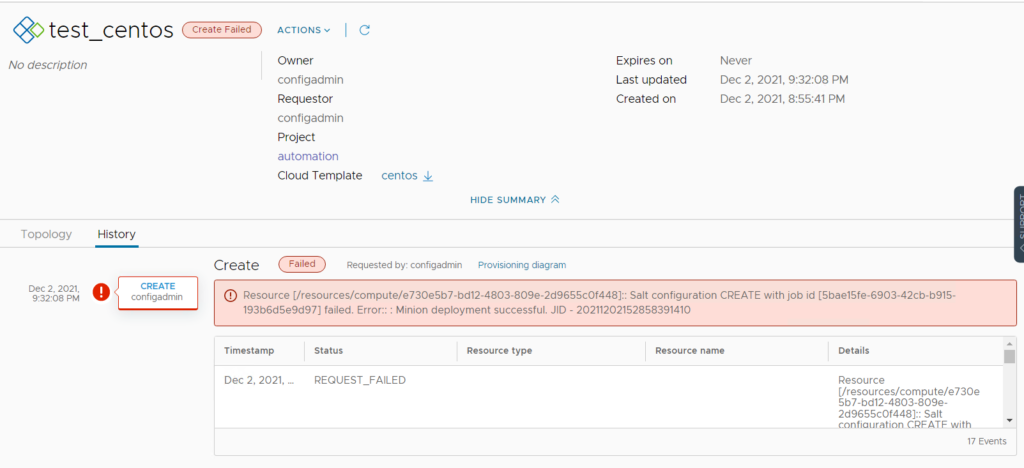

Resource [/resources/compute/e730e5b7-bd12-4803-809e-2d9655c0f448]:: Salt configuration CREATE with job id [5bae15fe-6903-42cb-b915-193b6d5e9d97] failed. Error:: : Minion deployment successful. JID - 20211202152858391410

investigation:

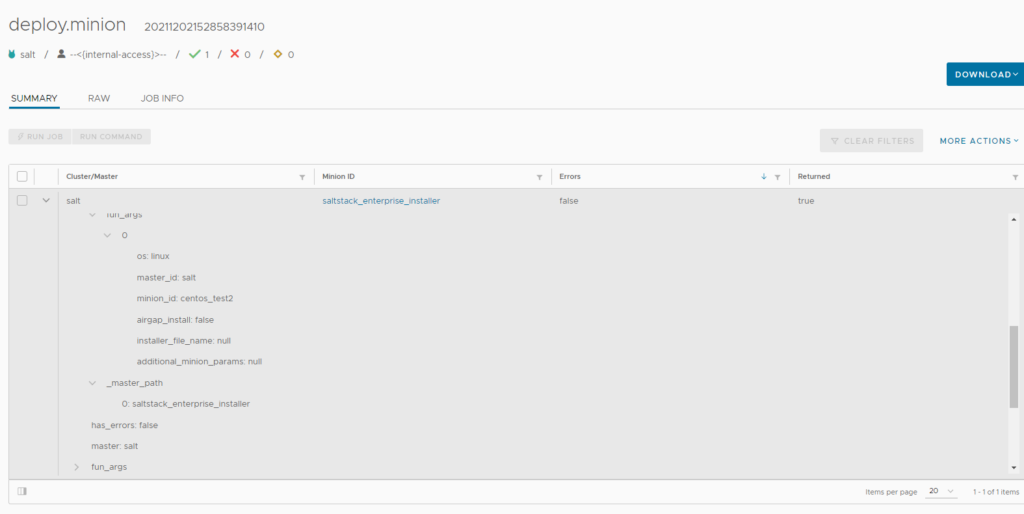

On salt-config > jobs , look for the deploy.minion task. This was a success in my case.

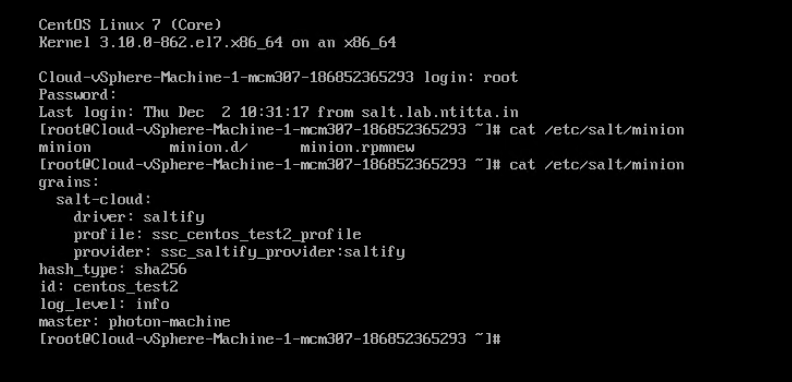

Re-ran the deployment, once the machine is provisioned and customization is successful (has IP when viewing VM from the vCenter view), open the console and I took a look at /etc/salt/minion

Here, the line: master: photon-machine is clearly wrong.

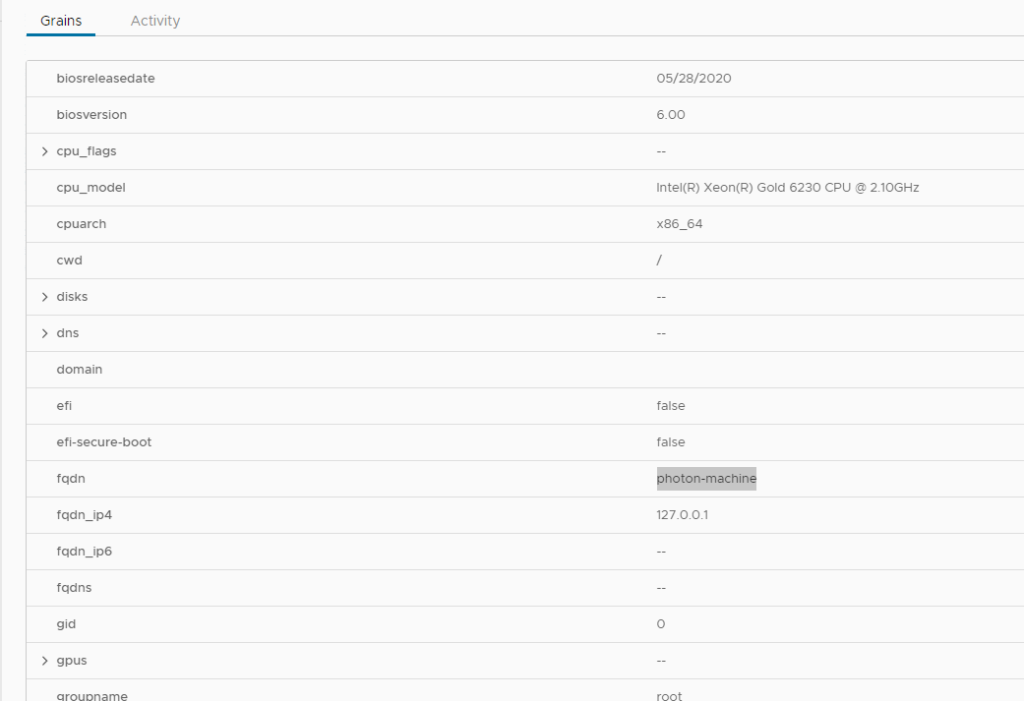

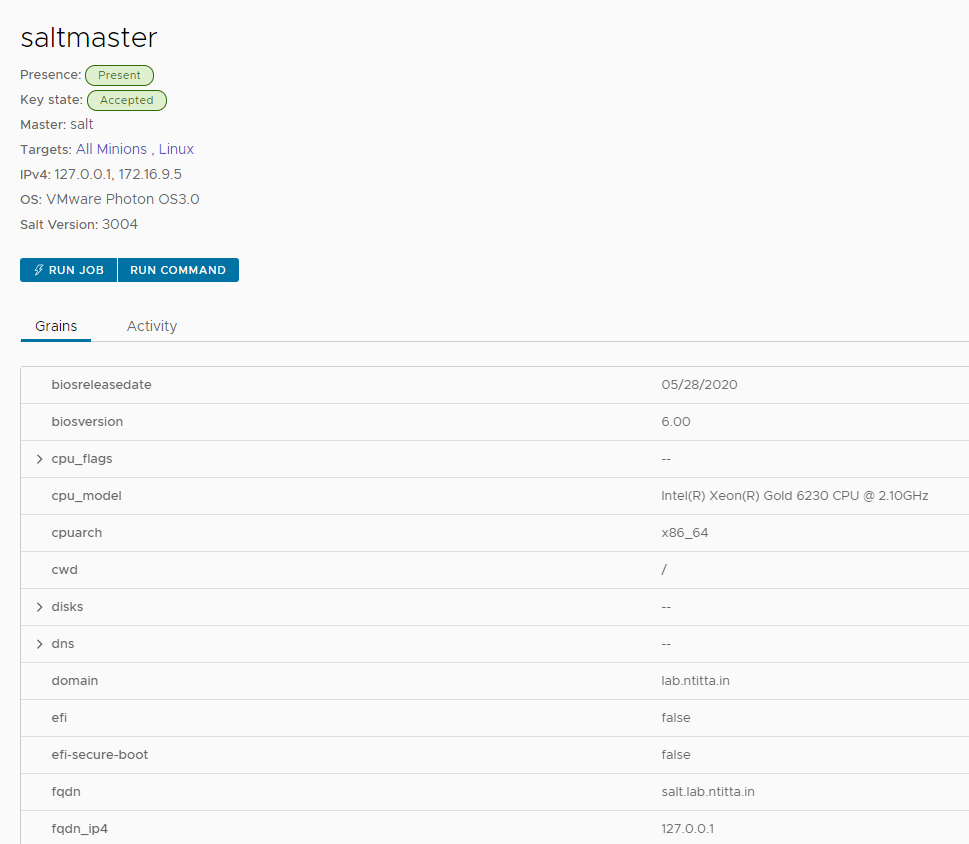

looking at the grains on the salt-config UI, we see the same grains:

Resolution:

restart salt-master and salt-minion service.

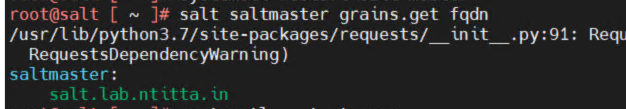

and then take a look at the grains on the CLI

salt saltmaster grains.get fqdn

Now, refresh grains

salt saltmaster saltutil.refresh_grainsand then take a look at the UI (in about a min time)

what that sorted, deploying new VM’s via VRA with the saltify driver now works!!

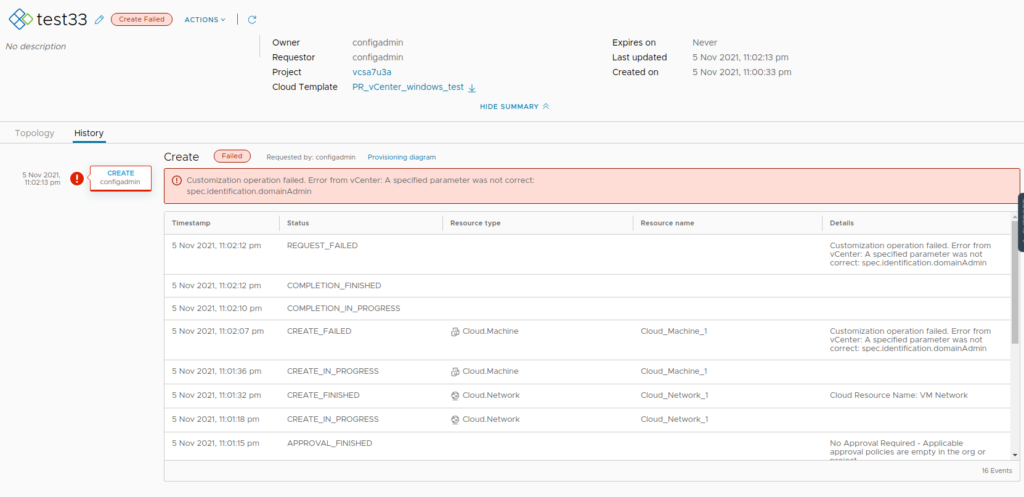

Windows-based deployment fails with error: “A specified parameter was not correct: spec.identification.domainAdmin”

Logs: vpxd.log on the vCenter

info vpxd[10775] [Originator@6876 sub=Default opID=68b9a06d] [VpxLRO] -- ERROR task-121185 -- vm-2123 -- vim.VirtualMachine.customize: vmodl.fault.InvalidArgument:

--> Result:

--> (vmodl.fault.InvalidArgument) {

--> faultCause = (vmodl.MethodFault) null,

--> faultMessage = <unset>,

--> invalidProperty = "spec.identification.domainAdmin"

--> msg = ""

--> }

...

...

...

--> identification = (vim.vm.customization.Identification) {

--> joinWorkgroup = <unset>,

--> joinDomain = "ntitta.lab",

--> domainAdmin = "",

--> domainAdminPassword = (vim.vm.customization.Password) {

--> value = (not shown),

--> plainText = trueCause: There were changes made to guest cust spec on 7.0u3a

Workaround:

For a blueprint that does not leverage domain join, Navigate to Cloud assembly > Network Profile> open (the-network-profile-used-in-bp) > networks > edit(vCenter_network_mapped)

leave the domain filed here as blank and then re-run the deployment.

re-run the deployment, it now works:

vcenter collection fails with error:” Failed customer rule collection – Could not find instanceUuid of the VM with moref: vm-xxxx from VC server with id: x.Could not find instanceUuid”

cause: usage meter receives an error when attempting to query the UUID of the VM moref seen on the error.

on the vCenter inventory, you will also see stale/orphaned/inaccessible machines

Resolution: Unregister/remove the invalid vm’s from the vCenter inventory and wait for the next collection cycle (collection occurs every hour)

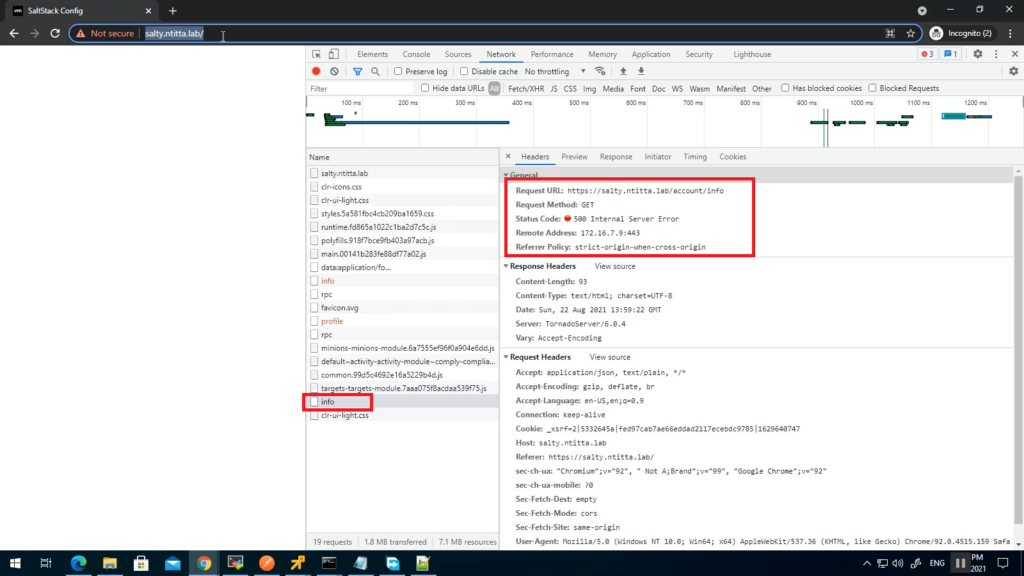

SaltConfig must be running version 8.5 and must be deployed via LCM.

If vRA is running on self-signed/local-CA/LCM-CA certificates the saltstack UI will not load and you will see similar symptoms:

Specifically, a blank page when logging on to salt UI with account/info api returning 500

Logs:

less /var/log/raas/raas

Traceback (most recent call last):

File "requests/adapters.py", line 449, in send

File "urllib3/connectionpool.py", line 756, in urlopen

File "urllib3/util/retry.py", line 574, in increment

urllib3.exceptions.MaxRetryError: HTTPSConnectionPool(host='automation.ntitta.lab', port=443): Max retries exceeded with url: /csp/gateway/am/api/auth/discovery?username=service_type&state=aHR0cHM6Ly9zYWx0eS5udGl0dGEubGFiL2lkZW50aXR5L2FwaS9jb3JlL2F1dGhuL2NzcA%3D%3D&redirect_uri=https%3A%2F%2Fsalty.ntitta.lab%2Fidentity%2Fapi%2Fcore%2Fauthn%2Fcsp&client_id=ssc-HLwywt0h3Y (Caused by SSLError(SSLCertVerificationError(1, '[SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed: self signed certificate in certificate chain (_ssl.c:1076)')))

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "tornado/web.py", line 1680, in _execute

File "raas/utils/rest.py", line 153, in prepare

File "raas/utils/rest.py", line 481, in prepare

File "pop/contract.py", line 170, in __call__

File "/var/lib/raas/unpack/_MEIb1NPIC/raas/mods/vra/params.py", line 250, in get_login_url

verify=validate_ssl)

File "requests/api.py", line 76, in get

File "requests/api.py", line 61, in request

File "requests/sessions.py", line 542, in request

File "raven/breadcrumbs.py", line 341, in send

File "requests/sessions.py", line 655, in send

File "requests/adapters.py", line 514, in send

requests.exceptions.SSLError: HTTPSConnectionPool(host='automation.ntitta.lab', port=443): Max retries exceeded with url: /csp/gateway/am/api/auth/discovery?username=service_type&state=aHR0cHM6Ly9zYWx0eS5udGl0dGEubGFiL2lkZW50aXR5L2FwaS9jb3JlL2F1dGhuL2NzcA%3D%3D&redirect_uri=https%3A%2F%2Fsalty.ntitta.lab%2Fidentity%2Fapi%2Fcore%2Fauthn%2Fcsp&client_id=ssc-HLwywt0h3Y (Caused by SSLError(SSLCertVerificationError(1, '[SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed: self signed certificate in certificate chain (_ssl.c:1076)')))

2021-08-23 04:29:16,906 [tornado.access ][ERROR :2250][Webserver:59844] 500 POST /rpc (127.0.0.1) 1697.46msTo resolve this, grab the root certificate of vRA and import this over to the saltstack appliance root store:

Grab root certificate:

Cli method:

root@salty [ ~ ]# openssl s_client -showcerts -connect automation.ntitta.lab:443

CONNECTED(00000003)

depth=1 CN = vRealize Suite Lifecycle Manager Locker CA, O = VMware, C = IN

verify error:num=19:self signed certificate in certificate chain

---

Certificate chain

0 s:/CN=automation.ntitta.lab/OU=labs/O=GSS/L=BLR/ST=KA/C=IN

i:/CN=vRealize Suite Lifecycle Manager Locker CA/O=VMware/C=IN

-----BEGIN CERTIFICATE-----

MIID7jCCAtagAwIBAgIGAXmkBtDxMA0GCSqGSIb3DQEBCwUAMFMxMzAxBgNVBAMM

KnZSZWFsaXplIFN1aXRlIExpZmVjeWNsZSBNYW5hZ2VyIExvY2tlciBDQTEPMA0G

A1UECgwGVk13YXJlMQswCQYDVQQGEwJJTjAeFw0yMTA1MjUxNDU2MjBaFw0yMzA1

MjUxNDU2MjBaMGUxHjAcBgNVBAMMFWF1dG9tYXRpb24ubnRpdHRhLmxhYjENMAsG

A1UECwwEbGFiczEMMAoGA1UECgwDR1NTMQwwCgYDVQQHDANCTFIxCzAJBgNVBAgM

AktBMQswCQYDVQQGEwJJTjCCASIwDQYJKoZIhvcNAQEBBQADggEPADCCAQoCggEB

AJ+p/UsPFJp3WESJfUNlPWAUtYOUQ9cK5lZXBrEK79dtOwzJ8noUyKndO8i5wumC

tNJP8U3RjKbqu75UZH3LiwoHTOEkqhWufrn8gL7tQjtiQ0iAp2pP6ikxH2bXNAwF

Dh9/2CMjLhSN5mb7V5ehu4rP3/Niu19nT5iA1XMER3qR2tsRweV++78vrYFsKDS9

ePa+eGvMNrVaXvbYN75KnLEKbpkHGPg9P10zLbP/lPIskEGfgBMjS7JKOPxZZKX1

GczW/2sFq9OOr4bW6teWG3gt319N+ReNlUxnrxMDkKcWrml8EbeQMp4RmmtXX5Z4

JeVEATMS7O2CeoEN5E/rFFUCAwEAAaOBtTCBsjAdBgNVHQ4EFgQUz/pxN1bN/GxO

cQ/hcQCgBSdRqaUwHwYDVR0jBBgwFoAUYOI4DbX97wdcZa/pWivAMvnnDekwMAYD

VR0RBCkwJ4IXKi5hdXRvbWF0aW9uLm50aXR0YS5sYWKCDCoubnRpdHRhLmxhYjAO

BgNVHQ8BAf8EBAMCBaAwIAYDVR0lAQH/BBYwFAYIKwYBBQUHAwIGCCsGAQUFBwMB

MAwGA1UdEwEB/wQCMAAwDQYJKoZIhvcNAQELBQADggEBAA2KntXAyrY6DHho8FQc

R2GrHVCCWG3ugyPEq7S7tAabMIeSVhbPWsDaVLro5PlldK9FAUhinbxEwShIJfVP

+X1WOBUxwTQ7anfiagonMNotGtow/7f+fnHGO4Mfyk+ICo+jOp5DTDHGRmF8aYsP

5YGkOdpAb8SuT/pNerZie5WKx/3ZuUwsEDTqF3CYdqWQZSuDIlWRetECZAaq50hJ

c6kD/D1+cq2pmN/DI/U9RAfsvexkhdZaMbHdrlGzNb4biSvJ8HjJMH4uNLUN+Nyf

2MON41QKRRuzQn+ahq7X/K2BbxJTQUZGwbC+0CA6M79dQ1eVQui4d5GXmjutqFIo

Xwo=

-----END CERTIFICATE-----

1 s:/CN=vRealize Suite Lifecycle Manager Locker CA/O=VMware/C=IN

i:/CN=vRealize Suite Lifecycle Manager Locker CA/O=VMware/C=IN

-----BEGIN CERTIFICATE-----

MIIDiTCCAnGgAwIBAgIGAXmEbtiqMA0GCSqGSIb3DQEBCwUAMFMxMzAxBgNVBAMM

KnZSZWFsaXplIFN1aXRlIExpZmVjeWNsZSBNYW5hZ2VyIExvY2tlciBDQTEPMA0G

A1UECgwGVk13YXJlMQswCQYDVQQGEwJJTjAeFw0yMTA1MTkxMTQyMDdaFw0zMTA1

MTcxMTQyMDdaMFMxMzAxBgNVBAMMKnZSZWFsaXplIFN1aXRlIExpZmVjeWNsZSBN

YW5hZ2VyIExvY2tlciBDQTEPMA0GA1UECgwGVk13YXJlMQswCQYDVQQGEwJJTjCC

ASIwDQYJKoZIhvcNAQEBBQADggEPADCCAQoCggEBAK6S4ESddCC7BAl4MACpAeAm

1JBaw72NgeSOruS/ljpd1MyDd/AJjpIpdie2M0cweyGDaJ4+/C549lxQe0NAFsgh

62BG87klbhzvYja6aNKvE+b1EKNMPllFoWiCKJIxZOvTS2FnXjXZFZKMw5e+hf2R

JgPEww+KsHBqcWL3YODmD6NvBRCpY2rVrxUjqh00ouo7EC6EHzZoJSMoSwcEgIGz

pclYSPuEzdbNFKVtEQGrdt94xlAk04mrqP2O6E7Fd5EwrOw/+dsFt70qS0aEj9bQ

nk7GeRXhJynXxlEpgChCDEXQ3MWvLIRwOuMBxQq/W4B/ZzvQVzFwmh3S8UkPTosC

AwEAAaNjMGEwHQYDVR0OBBYEFGDiOA21/e8HXGWv6VorwDL55w3pMB8GA1UdIwQY

MBaAFGDiOA21/e8HXGWv6VorwDL55w3pMA8GA1UdEwEB/wQFMAMBAf8wDgYDVR0P

AQH/BAQDAgGGMA0GCSqGSIb3DQEBCwUAA4IBAQBqAjCBd+EL6koGogxd72Dickdm

ecK60ghLTNJ2wEKvDICqss/FopeuEVhc8q/vyjJuirbVwJ1iqKuyvANm1niym85i

fjyP6XaJ0brikMPyx+TSNma/WiDoMXdDviUuYZo4tBJC2DUPJ/0KDI7ysAsMTB0R

8Q7Lc3GlJS65AFRNIxkpHI7tBPp2W8tZQlVBe7PEcWMzWRjWZAvwDGfnNvUtX4iY

bHEVWSzpoVQUk1hcylecYeMSCzBGw/efuWayIFoSf7ZXFe0TAEOJySwkzGJB9n78

4Rq0ydikMT4EFHP5G/iFI2zsx2vZGNsAHCw7XSVFydqb/ekm/9T7waqt3fW4

-----END CERTIFICATE-----

---

Server certificate

subject=/CN=automation.ntitta.lab/OU=labs/O=GSS/L=BLR/ST=KA/C=IN

issuer=/CN=vRealize Suite Lifecycle Manager Locker CA/O=VMware/C=IN

---

No client certificate CA names sent

Peer signing digest: SHA512

Server Temp Key: ECDH, P-256, 256 bits

---

SSL handshake has read 2528 bytes and written 393 bytes

---

New, TLSv1/SSLv3, Cipher is ECDHE-RSA-AES256-GCM-SHA384

Server public key is 2048 bit

Secure Renegotiation IS supported

Compression: NONE

Expansion: NONE

No ALPN negotiated

SSL-Session:

Protocol : TLSv1.2

Cipher : ECDHE-RSA-AES256-GCM-SHA384

Session-ID: B06BE4668E5CCE713F1C1547F0917CC901F143CB13D06ED7A111784AAD10B2F6

Session-ID-ctx:

Master-Key: 75E8109DD84E2DD064088B44779C4E7FEDA8BE91693C5FC2A51D3F90B177F5C92B7AB638148ADF612EBEFDA30930DED4

Key-Arg : None

PSK identity: None

PSK identity hint: None

SRP username: None

TLS session ticket:

0000 - b9 54 91 b7 60 d4 18 d2-4b 72 55 db 78 e4 91 10 .T..`...KrU.x...

0010 - 1f 97 a0 35 31 16 21 db-8c 49 bf 4a a1 b4 59 ff ...51.!..I.J..Y.

0020 - 07 22 1b cc 20 d5 52 7a-52 84 17 86 b3 2a 7a ee .".. .RzR....*z.

0030 - 14 c3 9b 9f 8f 24 a7 a1-76 4d a2 4f bb d7 5a 21 .....$..vM.O..Z!

0040 - c9 a6 d0 be 3b 57 4a 4e-cd cc 9f a6 12 45 09 b5 ....;WJN.....E..

0050 - ca c4 c9 57 f5 ac 17 04-94 cb d0 0a 77 17 ac b8 ...W........w...

0060 - 8a b2 39 f1 78 70 37 6d-d0 bf f1 73 14 63 e8 86 ..9.xp7m...s.c..

0070 - 17 27 80 c1 3e fe 54 cf- .'..>.T.

Start Time: 1629788388

Timeout : 300 (sec)

Verify return code: 19 (self signed certificate in certificate chain)

From the above example,

Certificate chain 0 s:/CN=automation.ntitta.lab/OU=labs/O=GSS/L=BLR/ST=KA/C=IN <—-this is my vRA cert

i:/CN=vRealize Suite Lifecycle Manager Locker CA/O=VMware/C=IN <—-This is the root cert (Generated via LCM)

Create a new cert file with the contents of the root certificate.

cat root.crt

-----BEGIN CERTIFICATE-----

MIIDiTCCAnGgAwIBAgIGAXmEbtiqMA0GCSqGSIb3DQEBCwUAMFMxMzAxBgNVBAMM

KnZSZWFsaXplIFN1aXRlIExpZmVjeWNsZSBNYW5hZ2VyIExvY2tlciBDQTEPMA0G

A1UECgwGVk13YXJlMQswCQYDVQQGEwJJTjAeFw0yMTA1MTkxMTQyMDdaFw0zMTA1

MTcxMTQyMDdaMFMxMzAxBgNVBAMMKnZSZWFsaXplIFN1aXRlIExpZmVjeWNsZSBN

YW5hZ2VyIExvY2tlciBDQTEPMA0GA1UECgwGVk13YXJlMQswCQYDVQQGEwJJTjCC

ASIwDQYJKoZIhvcNAQEBBQADggEPADCCAQoCggEBAK6S4ESddCC7BAl4MACpAeAm

1JBaw72NgeSOruS/ljpd1MyDd/AJjpIpdie2M0cweyGDaJ4+/C549lxQe0NAFsgh

62BG87klbhzvYja6aNKvE+b1EKNMPllFoWiCKJIxZOvTS2FnXjXZFZKMw5e+hf2R

JgPEww+KsHBqcWL3YODmD6NvBRCpY2rVrxUjqh00ouo7EC6EHzZoJSMoSwcEgIGz

pclYSPuEzdbNFKVtEQGrdt94xlAk04mrqP2O6E7Fd5EwrOw/+dsFt70qS0aEj9bQ

nk7GeRXhJynXxlEpgChCDEXQ3MWvLIRwOuMBxQq/W4B/ZzvQVzFwmh3S8UkPTosC

AwEAAaNjMGEwHQYDVR0OBBYEFGDiOA21/e8HXGWv6VorwDL55w3pMB8GA1UdIwQY

MBaAFGDiOA21/e8HXGWv6VorwDL55w3pMA8GA1UdEwEB/wQFMAMBAf8wDgYDVR0P

AQH/BAQDAgGGMA0GCSqGSIb3DQEBCwUAA4IBAQBqAjCBd+EL6koGogxd72Dickdm

ecK60ghLTNJ2wEKvDICqss/FopeuEVhc8q/vyjJuirbVwJ1iqKuyvANm1niym85i

fjyP6XaJ0brikMPyx+TSNma/WiDoMXdDviUuYZo4tBJC2DUPJ/0KDI7ysAsMTB0R

8Q7Lc3GlJS65AFRNIxkpHI7tBPp2W8tZQlVBe7PEcWMzWRjWZAvwDGfnNvUtX4iY

bHEVWSzpoVQUk1hcylecYeMSCzBGw/efuWayIFoSf7ZXFe0TAEOJySwkzGJB9n78

4Rq0ydikMT4EFHP5G/iFI2zsx2vZGNsAHCw7XSVFydqb/ekm/9T7waqt3fW4

-----END CERTIFICATE-----

Backup existing certificate store:

cp /etc/pki/tls/certs/ca-bundle.crt ~/Copy the lcm certificate to the certificate store:

cat root.crt >> /etc/pki/tls/certs/ca-bundle.crtadd the below to raas.service, /usr/lib/systemd/system/raas.service

Environment=REQUESTS_CA_BUNDLE=/etc/pki/tls/certs/ca-bundle.crtExample:

root@salty [ ~ ]# cat /usr/lib/systemd/system/raas.service

[Unit]

Description=The SaltStack Enterprise API Server

After=network.target

[Service]

Type=simple

User=raas

Group=raas

# to be able to bind port < 1024

AmbientCapabilities=CAP_NET_BIND_SERVICE

NoNewPrivileges=yes

RestrictAddressFamilies=AF_INET AF_INET6 AF_UNIX AF_NETLINK

PermissionsStartOnly=true

ExecStartPre=/bin/sh -c 'systemctl set-environment FIPS_MODE=$(/opt/vmware/bin/ovfenv -q --key fips-mode)'

ExecStartPre=/bin/sh -c 'systemctl set-environment NODE_TYPE=$(/opt/vmware/bin/ovfenv -q --key node-type)'

Environment=REQUESTS_CA_BUNDLE=/etc/pki/tls/certs/ca-bundle.crt

ExecStart=/usr/bin/raas

TimeoutStopSec=90

[Install]

WantedBy=multi-user.targetRestart salt service:

systemctl daemon-reload

systemctl restart raas && tail -f /var/log/raas/raasUpon restart, the above command should start to tail the raas logs, ensure that we no longer see the certificate-related messages.

curl –header “Content-Type: application/json” \

–request POST \

–data ‘{“username”:”xyz”,”password”:”xyz”}’ \

https://IP/suite-api/api/auth/token/acquire